What?

RC4 is a popular encryption algorithm. The way it works is that a “Key Scheduling Algorithm” (KSA) takes your key and generates a 256-byte array, and then a “Pseudo-Random Generation Algorithm” (PRGA) uses that byte array to output an endless stream of bytes (the “key stream”), which look like random noise unless you know what the original byte array was. To encrypt a text, this key stream is XORed with a plaintext, and decryption is done by XORing the ciphertext with the key stream again.

RC4 is broken in a variety of situations. If you just naively use it twice on two different plaintexts then that is it, it’s broken. If you try to route around this by prepending a public random value to the key with each encryption, it’s still broken. Extracting a key given its KSA-generated state should be difficult, but Biham and Carmeli showed how to do this to a 5-byte key with an 86% success rate; Akgun, Kavak and Demirci improved this success rate to 99%, and showed how to get a 3% success rate for a 16-byte key; Basu, Maitra, Paul & Talukdar used intensive, high-time-complexity algorithms to improve the rate for a 16-byte key to 14% or so.

Before Reviewer 2 pre-empts the next paragraph by noting that actually we have failed to cite the latest research that has the figure up to 23 bytes and 35%, we will pre-empt the pre-emption by insisting that this isn’t the point. The point is that if we pick a novel and encrypt it with a random 12-byte RC4 key, then ask you to recover the novel title, you’re not going to have an easy time of it. You might object that this isn’t fair, and you’re right, it isn’t. Even the stupendously broken Hill Cipher, invented in 1929, is considered stupendously broken because you can break it given a known pair of plaintext and ciphertext — but as to breaking just a ciphertext alone, people were still cracking their heads over it as late as 2009 and 2015. What we are trying to say is that cryptography has an amazing capacity to inflict trauma on people who go around looking for problems, even near “solved” terrain, and that there’s plenty we don’t understand even about RC4 and how to break it. For the more pragmatically-minded among you, what we are also trying to say is that if someone wanted to create some perfectly serviceable ransomware based entirely on RC4 encryption, there’d be nothing stopping them.

Why?

We have a love-hate relationship with the part of cryptanalysis that pummels the problem to submission using statistics and \(2^{94}\) plaintext-ciphertext pairs. By all objective measures, we’re the problem: out of the best attacks known on RC4 (cited above), exactly all of them apply probability theory to exploit statistical biases, and exactly 0 proceed with “The proof is trivial! Just biject the ciphertext to a non-degenerate residue class whose elements are clopen metric spaces”. All we can do is childishly shout “well what about 23-byte keys, when is that 35% success rate coming, huh”, and then when it inevitably does, lean against our paperback copy of Computational Complexity: A Modern Approach and weep.

One thing we’ll say for the above-mentioned known plaintext attack on the Hill Cipher is that it is beautiful. It engages with the cipher on its own linear-algebraic terms, then uses that understanding to tease out the key in one elegant motion, like untying a knot. The attack can only exist because linear algebra is as “solved” and well-understood as it is. RC4 is not built on linear algebra — it is built on transpositions and permutations. Can we somehow better understand the little mathematical corner of transposition-and-permutation-land that RC4 lives in? If that’s what we want to do, we are motivated to completely ignore the best and most well-understood attacks that use statistics to deliver the coup de grace, and instead look for much weaker attacks or even almost-attacks that explore that mathematical corner and maybe shed some light on it.

How?

Unfortunately we are emphatically not equipped to engage with RC4 proper on that level; by the time you are done reading, you will understand why. It’s been said that mathematical reasoning can be like navigating a series of dark rooms, where you fumble around for the light switch until you finally find it, turn on the light and can proceed to the next room. In terms of this analogy, the rooms we can even enter are very far removed from anything resembling the grand prize. This doesn’t mean we should just give up. As Pólya said, “If you can’t solve a problem, then there is an easier problem you can solve: find it.” That is, enter the first dark room with a sigh, and work from there.

What this means in practice, and what we do below, is that first, instead of trying to launch fancy “exploratory” attacks on the full RC4, we focus on the simpler task of retrieving the key from the KSA-generated state; and second, we turn our attention to toy versions of the cipher, where a statistical attack is trivial to launch and if you only cared about “a break no matter how” there would simply be no challenge. These toy versions are the simplest versions of RC4 that we could find where the relationship between the generated KSA state and the original key is not degenerate and doesn’t have an immediate closed-form solution — so that we actually have to do some thinking, which is the point of the exercise. Below we tackle two toy RC4 variants, which according to our internal scheme we have designated “RC-2zz” and “RC-2pz” (see addendum if you really care where we got these names).

Disclaimer

The mathematical claims below are preliminary and subject to possible modifications as errata is discovered. This is a diplomatic way of saying that we have programmatically verified that these results are not a hallucination and the described attacks do in fact apply to several test cases, but if you find out that actually in claim 7.5.1 we’ve neglected to mention that the function \(g^{\star}\) is not well-defined unless \(g^{\star}(0)\) is even or some such, please let us know.

Variant 1: RC-2zz

This is one of the simplest RC-like KSAs that do not allow a completely trivial recovery of the key from the generated state:

for i in range(256): S[i] = i i,j=(0,0) for ctr in range(256): j = k[ctr%len(k)] S[i], S[j] = S[j], S[i]

The initial state is obtained by swapping 0 with the key’s 0th byte, then 0 again with the 1st byte, then 0 again with the 2nd byte, and so on, where upon running out of key bytes we loop back again to the 0th byte. Now, a good, old-fashioned attack that doesn’t care about pretty mathematics, and simply goes for the jugular, would make short work of this KSA. To see why, consider the simple, mediocre key, key. The algorithm proceeds by swapping 0 and 107 (k), then 0 and 101 (e), then 0 and 121 (y), then 0 and 107 again, and so forth and so on until 256 swaps are performed. When the initial state is fully generated, all elements that aren’t 0, 107, 101 or 121 have not been swapped away — we will have for example \(S[50]=50\) and \(S[201]=201\). A simple scan of the final permutation will typically make it obvious which bytes other than 0 were a part of the key, and which were not. From there, we can start a brute-force search for keys composed of these three bytes and optionally 0, with possible repetition. There are infinitely many such keys, but only \(4! = 24\) possible permutations, so it won’t be long until we hit a valid key (that is, one that generates the desired permutation) by pure chance. What all this means is that using brute force and statistics, there is barely anything to discuss here.

Now, let us deliberately complicate our own lives, and engage with the KSA in terms of its mathematical structure. To do that, we must first learn the mathematical language used to describe and manipulate the basic building blocks that the KSA uses — swaps and permutations. Permutations are usually studied in Group Theory 101; Group Theory studies invertible operations, and it turns out that A. swapping stuff around is an invertible operation, and B. whenever you perform an invertible operation, you are, actually, swapping stuff around (this latter fact is called “Cayley’s Theorem“). In this context, you might see a permutation \(S\) described like so: \[(0\ 19\ 5)(7\ 4)\]

This is called putting the permutation in “cyclic form”; the example above specifies \(S[0]=19, S[19]=5, S[5]=0, S[7]=4, S[4]=7\) (and \(S[j]=j\) for all other \(j\)). Take a minute to convince yourself that any permutation on a finite amount of elements can be put in cyclic form (simply write \((0, S[0], S[S[0]], \ldots)\) until you are back to 0 again, then start with the smallest element that hasn’t yet appeared (let’s say it’s 7) and write again: \((7, S[7], S[S[7]], \ldots)\); keep adding these cycles until you are out of elements).

A simple swap of two elements \(a\) and \(b\) (a “transposition”) is also a permutation, and its cyclic form is \((a\ b)\). It’s handy to define “multiplication” for permutations, such that we can write \(p_1\ p_2\) and mean “execute \(p_2\) and then \(p_1\)” (if this seems backwards to you, just recall that in algebra \(f(g(x))\) also means “apply \(g\) first and \(f\) second”; the definition here works the exact same way, and for the same reason. If this still seems backwards to you, we apologize). Armed with this notation, we can now explicitly write the permutation state generated by the KSA above: \[(0,k[255])(0,k[254](0,k[253])\ldots(0,k[0]) = \prod_{i=0}^{255}(0,k[255-i])\]

Where, again, the transpositions are applied from right to left, and \(k[i]\) is actually shorthand for “\(k\) at the index \(i_{\text{mod} |k|}\)“. This is abuse of notation, but writing out the modulo operation every time is clunky, and in this case there is little danger of confusion.

Using the above KSA, the key soloing generates a permutation with one cycle of length 4, and another of length 2, where the element 0 appears in neither. We will write this out in more concise notation: <1||{4: 1, 2: 1}>, meaning: the element 0 appears in a cycle of length 1; of cycles of length 4, there are 1; of cycles of length 2, there are 1; all other cycles are of size 1. Cycles of size 1 are just elements that the permutation “doesn’t touch” — you could say that they stay put, or that they are fixed points of the permutation, or that they satisfy \(S[x]=x\), whichever is your favorite way to see it.

For this KSA, it is particularly useful to look not at the generated permutation itself, but instead at its “general shape” — the number of cycles of each length involved, and the position of 0 within them (we will call this sum of information the permutation’s “signature”). We can obtain a variety of different permutation signatures from different keys. The key rarer gives a permutation with <2||{2:2}>; abacus gives <1||{4:1,2:1}>; and calmly gives <3||{3:2}>. Actually, boldly also gives <3||{3:2}>, as does alibis and canine. If you squint at this “coincidence” hard enough, a general intuitive suspicion might start to form in your mind, which can be expressed either formally or clearly. Let us first express it formally:

DEFINITION 1.1 Two keys \(k_1, k_2\) are said to be 2zz-conjugate if there exists some permutation \(\psi\) such that \(\psi(0)=0\) and \(\psi(k_1)=k_2\). Permutations are applied to keys in the obvious way, e.g. applying \((krp)(ne)\) to key yields rny.

DEFINITION 1.2 Two permutations \(p_1, p_2\) are said to be 2zz-conjugate if there exists some permutation \(\psi\) such that \(\psi(0)=0\) and \(\psi^{-1}p_2\psi = p_1\).

This isn’t something we completely made up: the concept of “conjugate permutations” exists in the wide and beautiful mathematical world outside of this article, somewhere in chapter 3 or so of the aforementioned Group Theory 101 course. But that other definition is slightly different from the one we have above: \(\psi(0)=0\) is not required there. This is basically because the 2zz-KSA in some important sense “cares” more than your usual permutation whether a number is 0 or not, seeing as the number 0 is hardcoded into the 2zz-KSA, so if we try to prove anything interesting about the 2zz-KSA using plain vanilla conjugacy, we will probably fail. To wit, \((0\ 1\ 2)\) and \((1\ 2\ 3)\) are perfectly conjugate by the normal, vanilla definition for conjugacy, but the RC-2zz keys required to generate them will have a completely different underlying structure. You might want to stop and convince yourself that if two permutations are 2zz-conjugate, then they are also plain vanilla conjugate per the definition from group theory 101 (in mathematical terms this makes 2zz-conjugacy what’s called a “refinement” of vanilla conjugacy). With our concept of 2zz-conjugacy we are able to make the following claim:

CLAIM 1.1 Let \(k\) be a key that generates a permutation \(p\). Then there exist a key \(k^\star\), a permutation \(p^\star\) and a permutation \(\psi\) with the following properties:

1. \(k\), \(k^\star\) are conjugates; \(p\), \(p^\star\) are also conjugates; and further, they are conjugates via the same \(\psi\).

2. \(k^\star\) is lexicographically minimal (i.e. would come first in a dictionary) among all conjugates of \(k\).

What this means, in plain English, is that we can look at a key such as sheep and immediately disregard the specific letters s, h, e, and p being used. The 2zz-KSA will proceed in exactly the same way if the key were, let’s say, pgjjq instead, because there exists \(\psi = (spq)(hg)(ej)\) with \(\psi(sheep)=pgjjq\) — the two keys are 2zz-conjugate. We take all keys conjugate to sheep and put them in a giant pile, which we’ll name abccd after the pile member that’s first alphabetically. Then it’s enough that we compute \(\text{2zz-KSA}(abccd)=p\) once; For every key \(k\) in that same conjugate pile, we can take a shortcut and instead of computing \(\text{2zz-KSA}(k)\), compute the correct \(\psi\) with \(\psi(abccd)=k\) then immediately obtain \(\psi^{-1}p\psi = \text{2zz-KSA}(k)\). This is not a whitepaper and so we won’t include a proof here, but you might become convinced of this if you follow the two permutation constructions for the two keys abccd and sheep step-by-step, and verify for yourself that at the cyclical structure level, they proceed the same way. At a very hand-wavey level, we might say that permutation-signature-wise, the 2zz-KSA doesn’t “care” about the actual value of each byte, only whether two bytes are identical and whether any of them are 0.

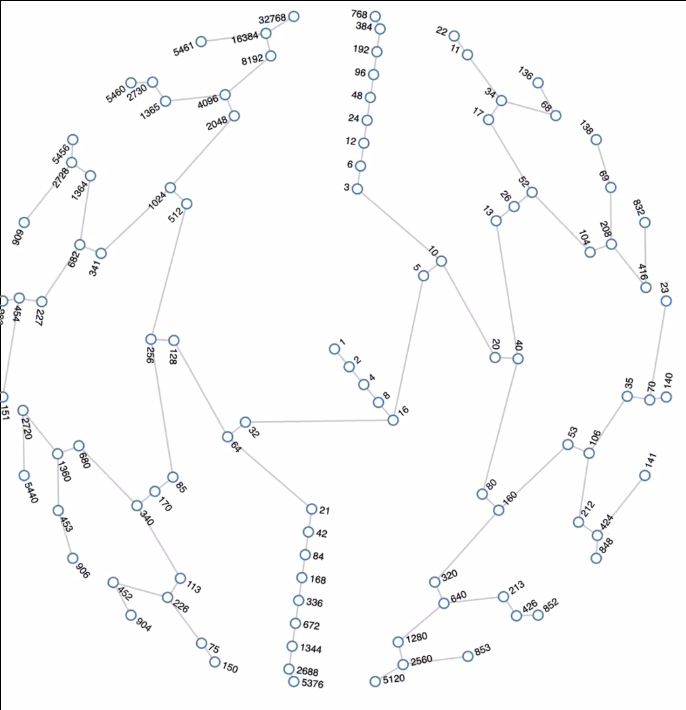

If that still doesn’t sound perfectly clear, we are happy to announce that in fact you can forget all about it and instead stare at the below pretty picture, which illustrates what happens after 4 steps of 2zz-KSA on a 4-byte key: \[\text{2zz-KSA-4-steps}({\color{green}{\bullet}}{\color{blue}{\bullet}}{\color{green}{\bullet}}{\color{red}{\bullet}})=(0{\color{red}{\bullet}})(0{\color{green}{\bullet}})(0{\color{blue}{\bullet}})(0{\color{green}{\bullet}})=(0{\color{red}{\bullet}})({\color{green}{\bullet}}{\color{blue}{\bullet}})\\ \text{2zz-KSA-4-steps}({\color{blue}{\bullet}}{\color{red}{\bullet}}{\color{blue}{\bullet}}{\color{green}{\bullet}})=(0{\color{green}{\bullet}})(0{\color{blue}{\bullet}})(0{\color{red}{\bullet}})(0{\color{blue}{\bullet}})=(0{\color{green}{\bullet}})({\color{blue}{\bullet}}{\color{red}{\bullet}})\\ \text{2zz-KSA-4-steps}({\color{red}{\bullet}}{\color{green}{\bullet}}{\color{red}{\bullet}}{\color{blue}{\bullet}})=(0{\color{blue}{\bullet}})(0{\color{red}{\bullet}})(0{\color{green}{\bullet}})(0{\color{red}{\bullet}})=(0{\color{blue}{\bullet}})({\color{red}{\bullet}}{\color{green}{\bullet}})\\ \text{2zz-KSA-4-steps}(1,2,1,3)=(03)(01)(02)(01) = (03)(12)\]

Assuming all colorful circles are nonzero and different from each other (and remember, transpositions are applied from right to left). That last row has the lexicographically minimal representative. Once you realize what is going on here and extend the result in your mind’s eye to the entire 256 iterations, you have understood everything so far, and should also not have trouble with the following convenient result:

CLAIM 1.2 Let \(k\) be a key that 2zz-generates a permutation \(p_1\), which is conjugate to \(p_2\) via \(\psi^{-1}p_2\psi = p_1\). Then \(\psi(k)\) generates \(p_2\).

What this means is that when looking for a key \(k\) that generates \(p\), it is enough to find a key that generates a permutation conjugate to \(p\). Again, a proof will not be included here, but the principle behind it is very similar to the one behind claim 1.1.

While constructing a feasible practical attack isn’t our main goal here, it’d do us well to consider it anyway so as to ground the discussion. So how can we use all the above philosophizing to our advantage when attempting to extract a key from an initial permutation state? We can construct a precomputed table that, for each permutation signature, records the shortest key that generates that permutation signature and is lexicographically minimal among its conjugates. This is best done by iterating over every possible lexicographically minimal representative key, running the KSA, noting the result and updating the value for the resulting signature, if none exists. So, for instance, we might run the KSA with the key abcdce, obtain the result <3||{3:2}> (see above with calmly, boldly, alibis) and update the table — <3||{3:2}> : abcdce, if we haven’t yet found a shorter key that generates a permutation with that signature.

Alas, we have to contend with the question of how large this table is, and how long it will take us to compute. We might try to bound the table size from above using the number of different permutation signatures — but the number of conjugacy classes of a permutation group with 256 elements is a 48-bit number. That means a database weighing Petabytes (\(10^{15}\) bytes), more on the “extremely determined nation state” side than the “bored student” side. Thankfully, we can do a lot without scratching this bound: after all, one brute-force of all \(n\)-bit minimal keys generates a database that can then extract all such keys from the initial permutation. So, we can limit ourselves to 128-bit (16-byte) minimal keys, and maybe get a better bound.

How much better? Given some natural number \(m\), how many keys are there of length \(m\) that are lexicographically minimal among their conjugates? To tackle this question we steeled our hearts, recalled all the wonderful tools of combinatorics at our disposal, and then promptly dismissed them and instead wrote a script to manually count the possibilities for \(m \leq 7\) which we then cross-referenced with the online encyclopedia of integer sequences. This search produced OEIS A024716, “\(a_n = \sum_{1 \leq j \leq i \leq n}S(i,j)\) where \(S(i,j)\) are Stirling numbers of the second kind”. The 16th entry in the sequence, corresponding to 16-byte (128-bit) keys, is a 33-bit number. That’s very feasible.

So that’s a theoretically possible attack with some forced mathematics, but the really interesting thing would be to go all the way, and find an algorithm that retrieves a short minimal key from the function signature, instead of relying on a precomputed table. But it’s not immediately clear how to go about that. In fact, we can posit a generalized “RC-2zz problem”: Given a conjugacy class \(C\) of a permutation group on \(n\) elements (possibly \(n > 256\) here) and an integer \(m\), does there exist a key \(k\) with size shorter than \(m\) that generates a permutation in \(C\) via a generalized RC-2zz KSA that runs for \(n\) iterations instead of a fixed \(256\)? If handed the correct key, it is straightforward to verify that a permutation is generated as desired, but how well can we mortals produce such a key from scratch? Is there a polynomial-time algorithm to do this, or maybe it is provably difficult?

As a point of interest, the insights above carry over even if we generalize the cipher a little to something like this:

for i in range(256):

S[i] = i

i,j=(p1,0)

for ctr in range(256):

j = p2 + k[ctr%len(k)]

S[i],S[j] = S[j],S[i]

With p1, p2 public parameters. Instead of transpositions of \((0 k_i)\) we now deal with transpositions of \((p_1 k_i+p_2)\). The above analysis remains applicable except that the “special element” being transposed with is now \(p_1\) instead of 0, and all key bytes are shifted by the constant \(p_2\).

Variant 2: RC-2pz

Now that we’re comfortable with permutations, cyclic forms and conjugacy, we can carefully move an inch up the ladder of complexity and address a slightly more challenging KSA. We call this variant RC-2pz, and the initialization goes as follows:

for i in range(256):

S[i] = i

i,j=(0,0)

for ctr in range(256):

j += k[ctr%len(k)] (mod 256)

S[i], S[j] = S[j], S[i]

The sharp-eyed among you will notice that this is almost RC-2zz. The one difference is that the assignment (=) in line 5 is now an addition assignment (+=). You might rightfully wonder how much this could complicate the analysis, but the answer is, unfortunately, “very”. For one thing, with this one innocent-seeming tweak all the convenient properties due to 2zz-conjugacy up and disappear. For example, the two keys k1 = [1, 14] and k2 = [1, 255] are 2zz-conjugate, but try to put both through the KSA and their behavior diverges very quickly — by the 2nd iteration k1 performs an actual swap \((0, 15)\) while k1 performs the “swap” \((0,0)\), a non-opeartion. Let’s do what comes naturally and define a new and more suitable notion of 2pz-conjugacy:

DEFINITION 2.2 Two keys \(K,L\) are said to be 2pz-conjugate if:

1. For all \(0<m<n\), \(\Sigma_{i=1}^m K_i = \Sigma_{i=1}^n K_i\) if and only if \(\Sigma_{i=1}^m L_i = \Sigma_{i=1}^n L_i\)

2. For all \(n>0\), \(\Sigma_{i=1}^n K_i = 0\) if and only if \(\Sigma_{i=1}^n L_i = 0\)

(1) means, plainly, that if the running sum of \(K\) is equal at indices \(m\) and \(n\), then the same is true for \(L\), and vice-versa (all sums are modulo 256 — this is the last time we’re going to explicitly write this down). A reader with an eye for analogies might suggest we could drop condition (2) for a more “vanilla flavor” 2pz-conjugacy. The result isn’t something you find in any mathematical textbook we’re aware of, but indeed it is closer to something you might find in one, hypothetically, and the further analysis below will mainly deal with this “weak” 2pz-conjugacy for the sake of simplicity. Still, if we try to extrapolate this definition to a full attack similar to the one launched on 2zz, we run into a wall quickly. First, the naive approach to check if two keys are conjugate was straightforward for 2zz, and here it has shot up in complexity (one might toy in analyzing exactly how intractable it is, but soon we will propose a less naive approach, anyway). Second, and more importantly, the number of conjugacy classes veritably explodes. Consider that [1], [2], [3], all the way to [255] are all 2zz-conjugate keys. In contrast, [1], [2] and [4] are each in a distinct 2pz-conjugacy class already!

All we did to move from 2zz to here is a tiny gradation up the ladder of complexity, replacing one = with a +=. We didn’t even introduce any feedback that makes the swaps themselves dependent on the current state (such as j += S[i]). And yet our entire approach from before is basically useless now. All we have is maybe some better intuition about how to approach the problem. Imagine attacking the actual real-life RC4 KSA, which sits at the top of a long tower of such complexity gradations and has j = j + S[i] + k[i%len(k)], with an auto-increment of i.

Now, and this is the real beauty of the thing, if we stop forcing mathematics into it and allow an attacker to “eye it” and use statistical reasoning, RC-2pz is laughably easy to break. This is because one property of swaps and permutations when put in cyclical form is that if \(x_0,x_1,x_2,x_3,\ldots,x_n\) are all different then \[ (x_0 x_n)\ldots(x_0 x_3)(x_0 x_2)(x_0 x_1) = (x_0 x_1 x_2 x_3 \ldots x_n)\]

This implies that if we look at the cycles of the final generated permutation, usually a lot of them are going to contain sequences of the form \((C,C+k_j,C+k_j+k_{j+1}, C+k_j+k_{j+1}+k_{j+2}\ldots)\), and thus if we take the sequence of differences from one term in the cycle to the next we will run into a lot of pretty key bytes sitting in a row waiting to be harvested. Allowing for a bit of trial and error, it’s not very complicated to proceed from there. Alas, we’re not here to shout “boom, broken”, we are here to handicap ourselves, disallow such vulgar methods, and hopefully enhance our mathematical understanding.

So what can we do? We’ve convinced ourselves that enumerating all values \(m,n\) where the running key sum will repeat \(\sum_{i=1}^{m}K_i = \sum_{i=1}^{n}K_i)\) isn’t a good enough approach. Perhaps we can make progress by having a better understanding of how these “repeats” behave. We can start by convincing ourselves of the following claim:

CLAIM 2.1 let \(K\) be an RC-2pz key and denote \(\sigma(m,n) := \sum_{i=m}^{n-1} K_i\). Note that this implies the first swap will be \((0,\sigma(0,1))\), the second \((0,\sigma(0,2))\), and so on. Then there exists a function \(\varphi_K: \mathbb{N}_{|k|} \rightarrow \mathbb{N} \cup \{\infty\}\) such that for all \(i > 0\), we have \(\sigma(i,i+\varphi_K(i_{\text{mod} |k|})) = 0\), and further \(\varphi_K(i_{\text{mod} |k|})\) is minimal with this property. The case \(\varphi_K(i) = \infty\) should be understood to mean that \(\sigma(i,i+j) \neq \sigma(i)\) for all \(j>0\). For convenience let us generally define a binary function also named \(\varphi\) with \(\varphi(K,i) = \varphi_K(i)\).

This is less complicated than it sounds. All it is saying is that after the \(i\)-th iteration of the KSA, it is enough to consider the value of \(i_{\mod k}\) to understand how many iterations the KSA will go through until the running sum will reach the same value again (it might help to note that \(\sigma(0,i)\) is the variable j in the initial code listing in this section). Again, we won’t do any proofs here, but as far as proofs go, this one is not so complicated. The value of \(\sigma(0,i)\) will repeat once the sum of key bytes from that point on is exactly \(0_{\text{mod} 256}\), and if you sum bytes starting from a given position in the key (and looping around once you run out of key), this determines the exact sequence of bytes participating in the sum, which in turn determines how many of them must be collected until the sum hits 0.

In fact, \(\varphi(K,i)\) is not an arbitrary number. We now take a deep breath and state

CLAIM 2.2 Let \(K\) an RC-2pz key. Fix some \(0 \leq i \leq |K|\), and write \(\sigma(0,|K|)=q2^n\) with \(q\) odd. Then there exists some \(0 \leq j \leq |K|\) such that \(2^n\) divides \(\sigma(i+j,i+|K|)\), and \(\varphi(K,i) = |K|\frac{\sigma(i+j,i+|K|)}{\sigma(0,|K|)}-(|K|-j)\), with the division performed modulo 256 taking the smallest nonzero possible quotient (so for example \(\frac{28}{27} = 27\), even though we have \(20m = 28\) not just for \(m=27\) but also for \(m=91,155,219\)). Conversely, any value of \(0 < j < |K|\) with \(\sigma(i+j,i+|K|)\) divisible by \(2^n\), when evaluated in the formula above, will result in some \(l\) with \(\sigma(i,i+l)=0\), though it is not guaranteed to be minimal.

The above merry jumble of symbols probably requires another illustration involving colorful circles, which we provide below. Suppose for example that for the key \(K=({\color{blue}{\bullet}}{\color{red}{\bullet}}{\color{green}{\bullet}})\) we have \(\sigma(i,i+l)=0\) with \(i=1,l=19\). This implies that if we sum the first 19 key elements, the result is 0: \[\text{Sum}({\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet})=0\]

By simple addition to both the right-hand side and the left-hand side, this implies: \[\text{Sum}({\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}{\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet})=\text{Sum}({\color{green} \bullet}{\color{blue} \bullet})\]

Now the left-hand side is just a multiple of the sum of all key bytes: \[7 \times \text{Sum}({\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}) = \text{Sum}({\color{green} \bullet}{\color{blue} \bullet})\]

And more generally, by the same logic, every instance of \(\sigma(i,i+l)=0\) corresponds to some equation \(m \times \text{Sum}({\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet})=\text{Sum}(\text{subsequence}({\color{red} \bullet}{\color{green} \bullet}{\color{blue} \bullet}))\) with an actual solution \(m\) (and by reasoning backwards, it is not too difficult to see that every such equation with an actual solution \(m\) produces an instance of \(\sigma(i,i+l)=0\)). Subsequences are allowed to loop around the end of the key and back to the beginning; actually that funny \(\sigma(i+j,i+|K|)\) term that appears to come out of nowhere is just some machinery to express that.

All that’s left is to apply a basic fact of number theory, which is that the equation \(ax=b\) has solutions modulo \(M\) if and only if \(\text{gcd}(a,M)\) divides \(b\). Claim 2.2 then immediately results, including a guarantee that the modulo-256 division has a valid result. That entire unwieldy-looking formula for \(\varphi(|K|,i)\) is just bean counting to figure out the exact number of pretty circles implied by the relationship above, and the decomposition of the key bytes sum into \(q2^n\) with \(q\) odd is just an artifact of computing its \(\text{gcd}\) with 256.

If you don’t find this proof by hand-waving convincing enough, we offer some additional hand-waving in the form of an honest-to-god example of Claim 2.2 at work. This is an RC-2pz key: \[(1,2,7)\]

The \(\varphi\) values for this key are \((384,154,230)\) (we have verified this manually). Here \(\sigma(0,|K|) = 1+2+7 = 10\), which decomposes to \(q2^n\) with \(q=5\) and \(n=1\). For \(i=0\), it so happens the correct value of \(j\) is \(3\). This results in \(\sigma(i+j,i+|K|)=0\), which is divisible by \(2^n=2\). So, plugging it into the formula in claim 2.2 should produce some \(l\) with \(\sigma(0,0+l)=0\). Doing so is pretty straightforward, except for the tricky term \(\frac{0}{10}\); while in usual number-land this is simply 0, the division modulo \(256\) (taking the smallest nonzero solution) gives the result \(128\). We multiply that result by the key length (3), then subtract the term \(|K|-j\) which in this case is 0, since we picked \(j=|K|\). \(3 \times 128 – 0 = 384\), the correct value for \(\varphi(K,0)\).

Similarly for \(i=1\), the solution is obtained by choosing \(j=1\). The resulting value for \(\sigma(i+j,i+|K|)\) is \(\sigma(2,4)=8\). By our definition \(\frac{8}{10} = 52\). With \(|K|=3\) and \(|K|-j=2\), using the formula above we obtain the correct value \(\varphi(K,1) = 154\) (that is, we have \(\sigma(1,1+154)=0\), and 154 is minimal with this property).

We can now proceed to

CLAIM 2.3 Two keys are weakly 2pz-conjugate if and only if the infinite extensions of their \(\varphi\) values are equal, where the infinite extension of \(a_0, a_1, \ldots, a_n\) is defined to be \((a_{i \text{mod} n)})_{i=0}^\infty\).

This is, again, not so complicated. The only reason we had to introduce this whole “infinite extension” business is that someone could have said “ah-ha! I have a counter-example: \((9,15)\) and \((9,15,9,15)\) are 2pz-conjugate, but one of them has a \(\varphi\)-value sequence of length two, and the other of length four!”. So if you are upset about claim 2.3 not just ending with the word “equal”, blame that person.

At this point we are juggling 3 different sets of properties that can encode some information about a key. At the top is the key itself which, obviously, encodes the entire information about the key. Farther down – the key’s \(\varphi\)-values. Even farther down from that — the structure of the resulting permutation after being put through the RC-2pz KSA. In this list of points A, B and C, we’ve just seen how to move from A to B, and it should be straightforward to move from A to C efficiently by simply running the RC-2pz KSA on the key. But what about moving from B to C, or backwards, from C to B or from B to A? If we could do all of these, it would constitute a full attack on RC-2pz: look at a generated permutation, fish out a sequence of \(\varphi\)-values that generates it, then investigate that sequence to recover the key.

Happily, the climb from B to A is possible (which immediately hands us B to C as well). Given that seeing is believing, recall the list of \(\varphi\)-values we just used \(\varphi(K)=(384,153,230)\) that correspond to the key \(K=(1,2,7)\) and observe the following sleight of hand: \[1\times\left(\frac{384-0}{3}+0\right)+2\times\left(\frac{384-0}{3}+0\right)+7\times\left(\frac{384-0}{3}+0\right)=0\\

1\times\left(\frac{154-1}{3}+0\right)+2\times\left(\frac{154-1}{3}+1\right)+7\times\left(\frac{154-1}{3}+0\right)=0\\

1\times\left(\frac{230-2}{3}+1\right)+2\times\left(\frac{230-2}{3}+0\right)+7\times\left(\frac{230-2}{3}+1\right)=0\]

Or, for the linear-algebraically minded among you: \[\left(\frac{1}{3}\left(\begin{pmatrix} 384 & 384 & 384 \\ 154 & 154 & 154 \\ 230 & 230 & 230 \end{pmatrix}-\begin{pmatrix} 0 & 0 & 0 \\ 1 & 1 & 1 \\ 2 & 2 & 2 \end{pmatrix}\right)+\begin{pmatrix} 0 & 0 & 0 \\ 0 & 1 & 0 \\ 1 & 0 & 1 \end{pmatrix}\right) \begin{pmatrix}1 \\ 2 \\ 7 \end{pmatrix} = \begin{pmatrix} 0 \\ 0 \\ 0 \end{pmatrix}\]

The reason for this is straightforward enough that we don’t need to reach for the colorful circles. The 1st \(\varphi\)-value of the key being 154 means, by definition, that \(\underbrace{k_1 + k_2 + k_0 + k_1 + k_2 + \ldots + k_0}_{\text{154 terms}} = 0\). That’s \(\frac{154-1}{3}\) times \(k_0\), \(\frac{154-1}{3}\) times \(k_1\) and \(\frac{154-1}{3}\) times \(k_2\), which gives us the first equation. Similarly for the two other ones. So, given the \(\varphi\) values, the problem of recovering possible original keys is reduced to the known-to-be-manageable problem of solving the above system of equations (equivalently, computing the kernel of the matrix in the giant parentheses on the left-hand side, which equals \(\begin{pmatrix} 128 & 128 & 128 \\ 51 & 52 & 51 \\ 77 & 76 & 77\end{pmatrix}\)).

What about moving from C to B — that is, given a permutation signature, recovering a possible \(\varphi\)-value sequence? It’s not difficult to work out some really simple cases by hand; for example, for a 1-byte key, the signature of the 2pz-KSA generated state can be determined directly from the GCD of the byte with 256:

| \(\gcd(i,256)\) | signature of 2pz-KSA([i]) |

|---|---|

| \(1\) | <256||{256: 1}> |

| \(2\) | <64||{64: 2}> |

| \(4\) | <16||{16: 4}> |

| \(8\) | <4||{4: 8}> |

| \(\geq 16\) | <1||{}> |

But as the key size grows this relationship gets very complicated, very quickly. Here are some choice values for 3 bytes of key:

| \(k\) | signature of 2pz-KSA(k) |

|---|---|

| \((13, 3, 4)\) | <9||{9: 1, 23: 1, 48: 1, 24: 2}> |

| \((11, 7, 6)\) | <59||{59: 1, 37: 1}> |

| \((10, 13, 11)\) | <23||{23: 1, 83: 1, 54: 1, 53: 1}> |

| \((9, 12, 2)\) | <8||{8: 1, 64: 1, 39: 1, 26: 2}> |

As with the analogous problem for RC-2zz, this is probably the toughest nut to emerge out of the entire approach, which we do not have a closed-form solution for and can only route around with precomputed tables and other such dishonorable kludges. Still, we were pleasantly surprised to learn that the \(\varphi\) values that determine the proper concept of 2pz-conjugacy are so well-behaved with respect to the key, and we’re more excited than frustrated to have stumbled upon this interesting problem.

Possible Future Work

Given that the angle of making an attack work with some probability on the full RC4 using whatever method is relatively well-covered by prior work, we are naturally drawn to instead linger on the combinatorial questions left to us even by the above analysis of very weak RC4 variants. “Given a permutation signature, recover a valid conjugacy class representative (for RC-2zz) / \(\varphi\)-value sequence (for RC-2pz) that will generate it via the corresponding KSA” — is this problem tractable, and by what means? Is there an even more closed form for deriving RC-2pz \(\varphi\)-values from the key, instead of trying all \(O(|K|^2)\) possible values of two indices and seeing which one produce the smallest solutions? We hope that we have managed to show some of the magic of permutation groups, and stimulate some curiosity about very weak RC4 variants. Now, excuse us, we’re off to cleanse our palate with some CTF exercises where no one cares how “properly motivated” your solution is, and getting the flag is the only thing that matters.

Addendum: Naming Scheme of RC-Variants

This is a short, informal explanation for readers who are comfortable with linear algebra and are really curious where we got “RC-2pz” from. The “2” is the easiest to explain: in RC4 itself and in all variants explored above, all manipulations on the permutation state are performed using 2 variables, i and j, but in principle one could use 3 or more; this notation is there to allow for that. p and z stand for matrix types out of the following table:

| Letter | Matrix type |

| z | Zero |

| p | At most one nonzero element, equal to 1 |

| q | At most one nonzero element |

| b | All nonzero elements equal to 1 |

| i | Identity |

| c | Scalar |

| d | Diagonal |

| f | Any |

Denote the current permutation state \(S\) and the current state vector \(v\). Each iteration of the KSA, a new state vector is computed as a sum of affine transforms – one on \(v\), one on \(S(v)\) (applying entry-wise), one on \(S^2(v)\), et cetera, though in the text above we only get far as an affine transform on \(v\) itself. This transform requires a matrix and a vector to express. For instance, in RC-2pz, if the previous state vector is \(v\), the next state vector is \(Av+w+\begin{pmatrix} 0 \ k \end{pmatrix}\) where \(A,w\) are known public cipher parameters — \(A\) has at most nonzero element, which equals 1, and \(w\) is the zero vector. This defines a family of ciphers that includes RC-2pz as exposited in the main text above, as well as some more trivial variants that we didn’t want to mention in the main text to avoid needless complication.