Key Takeaways

- We identify, and give concrete examples of, natural strengths and typical challenges OpenAI’s GPT-4 (henceforth ‘GPT’) faces when reasoning in the domain of malware analysis.

- We drill down into the source and the shape of the ‘ceiling’ hampering the application of GPT to several malware analyst tasks.

- We introduce hacks and mitigations to overcome this ‘ceiling’ and expand GPT’s ability to reason in this domain.

- As a proof of concept, we show a heavily engineered prompt that improves GPT’s ability to correctly guide an analyst performing triage on the tested binary samples.

Introduction

GPT technology is the current tech cycle’s veritable miracle. The skeptics insist that it just has the appearance of intelligence, and try to cast it as ‘just the latest buzzword’, making snide comparisons to NFTs and blockchains. But from intimate experience, we can say these comparisons are deeply unfair — we’ve had projects that should have taken a week be done in a few hours with GPT’s assistance, a feat that no NFT has ever achieved that we’re aware of. On the other side of the fence, one does sometimes encounter “AI mania”, which views GPT as essentially omnipotent magic that can be applied to unsolved problems to render them solved, and is amazed that the great AI uprising has somehow not happened yet.

The truth about GPT technology and its capabilities is more complex and nuanced than any of these simplified views, and we figured that the best way to get acquainted with it would be via a practical tour. We pitted OpenAI’s GPT-4 (henceforth “GPT”) against the daunting, sometimes unfair, task of dealing with a piece of malware. The first task was triage: given a binary, identify it and deliver a benign or malicious verdict. As it turned out, seeing GPT deal with this apparently simple task already produced a wealth of insight regarding its ability to reason in this domain. This text focuses on the insights from observing GPT grappling with the triage task, though highlights from the other tasks do occasionally appear. Quotes are verbatim, except for some faithful reconstructions which are marked with an asterisk.

It is important to understand that the below is not an objective, ground-up technical analysis of the capabilities of GPT. Rather, it is a summary of our impressions when interacting with GPT and repeatedly observing how it responds to the specific tasks we had handed it. These tests were conducted using GPT-4 versions that were deployed for use in ChatGPT during July and August of 2023.

The Triage Task

We posited the triage task to GPT in the following manner:

You play the role of a security analyst who has just been handed a VirusTotal report for an executable file. Highlights of the report are given below. Make note of them and confirm you have looked through these report highlights.

We asked GPT to deliver its conclusions and verdict on 2 reports:

- fb136c8360d1a5ab80f61109c55c5a788aa1d8796d1e75aca8c1a762b598d3f4 (GandCrab Ransomware)

- fc45eb5c9d3f89cb059212e00512ec0e6c47c1bdf12842256ceda5d4f1371bd5 (APSDaemon.exe, Apple push executable, benign in itself, used for sideloading malicious DLLs, e.g., in Coinloader infections)

Below we list how GPT fared when dealing with this task, and some of the others — where it displayed its natural strengths and where we ran into challenges that had to be creatively worked around, with in-line examples where warranted. In addition to examples straight from the malware tasks, we’ve added some examples from other prompts and tasks that we have tested GPT with, that we feel demonstrate some points particularly well.

GPT’s Natural Strengths

Sentence Completion

GPT is a totally verbal thinker. Its entire power is predicated on an outstanding capability to decide what’s the most appropriate word to put, and where, in its response. This is one of the most important things to understand about GPT — a lot of the behavior that we will cover later is, in a sense, downstream from this one property.

One of the immediate implications of this is that GPT has access to a huge latent cheat sheet. If someone, at any point in history, has answered the actual question being asked and this answer has made it into GPT’s training data, GPT exhibits an uncanny ability to reproduce the answer. For example, in response to the GandCrab report, GPT correctly recalls that:

The executable runs the “wmic.exe” with the “shadowcopy delete” command, which is often used by ransomware to delete Volume Shadow Copies. Volume Shadow Copy is a service in Windows that automatically creates backup copies or snapshots of computer files or volumes, even when they are in use. Deleting shadow copies is a common tactic used by ransomware to prevent victims from recovering encrypted files without paying the ransom.

It is important to note that in some situations, GPT will fail to recall an answer that looks like it “should” be on this cheat sheet. An example of this, taken from outside the realm of malware analysis, can be seen below:

USER: Can you find an example of [a proof that P=NP implies EXP=NEXP] in the literature and cite it verbatim?

GPT: [consults Google scholar] I apologize for the confusion earlier. Upon further reflection, I realize that I made a mistake in my explanation. The statement “If P = NP then EXP = NEXP” is not a standard result in complexity theory, and I am not aware of a proof or counterexample in the literature.

The proof does exist, but GPT first ran a google scholar search and could not find the proof in the first page of results. Sentence completion had trouble recovering from this error. It’s easy to speculate how this happened: if one tries to complete the sentence: “Looking at a list of Google scholar search results for ‘if P=NP then EXP=NEXP’, I have found: None. Therefore…”, GPT’s response comes almost naturally.

Big Picture Summaries

Through its web of word associations, GPT has a keen grasp of grammar and of the difference between key vs. ancillary facts. As a result, one of the tasks where GPT can be trusted to perform most reliably is producing “a summary of the big picture” when given input which is too large for comfort for human consumption. For example, when given part of a very lengthy API call log produced by a piece of malware and asked to summarize the log, GPT produced the below useful output:

The malware seems to be heavily interacting with Windows API and doing various operations such as file operations, memory management, privilege escalation, loading libraries, and notably cryptography-related operations.

A few notable points:

- File Operations: The malware interacts with various file-related functions such as CreateFileA, CreateFileW, ReadFile, SetFilePointer, FindFirstFileW, and FindNextFileW. These might be used to read/write/modify files on the infected system.

- Loading Libraries: Various DLL libraries such as kernel32.dll, msvcrt.dll, ntdll.dll, bcrypt.dll, user32.dll, and advapi32.dll are being loaded. These libraries contain functions that enable the malware to interact with the system.

[..and so on..]

We later discuss one of the main challenges GPT runs into when performing this task, and how to overcome that limitation (see below under ‘Memory Window Drift’).

Logical Reasoning

GPT can leverage its underlying capacity of sentence completion to perform surprising feats of logical reasoning. Consider the following prompt.

previous state of working memory:

BEGIN WORKING MEMORY

You are computing a function f on an input X. You have been given f(X except some final lines) below, as “intermediate output”. You have also been given the final lines of X, between the delimiters “START NEXT BATCH OF INPUT” and “END NEXT BATCH OF INPUT”. When computing the new state of working memory, copy the previous working memory verbatim, starting with “BEGIN WORKING MEMORY” and ending with “END WORKING MEMORY.” Change only working memory that appears under “intermediate output”, by replacing it with your computed value of f(X), which cannot exceed a 1,000 words.

The function: f(X) = “sum”

Intermediate output: 2

END WORKING MEMORY

START NEXT BATCH OF INPUT

Eleven.

END NEXT BATCH OF INPUT

Please output, with no introductions, addendums or ceremony, the next state of the working memory after processing the above lines of input.

Plenty of humans would fail to process this request, but GPT responds correctly:

BEGIN WORKING MEMORY

You are computing a function f on an input X. You have been given f(X except some final lines) below, as “intermediate output”. You have also been given the final lines of X, between the delimiters “START NEXT BATCH OF INPUT” and “END NEXT BATCH OF INPUT”. When computing the new state of working memory, copy the previous working memory verbatim, starting with “BEGIN WORKING MEMORY” and ending with “END WORKING MEMORY.” Change only working memory that appears under “intermediate output”, by replacing it with your computed value of f(X), which cannot exceed a 1,000 words.The function: f(X) = “sum”

Intermediate output: 13

END WORKING MEMORY

This ability should be used with care. If one overburdens GPT with too many arcane requirements and verbose conditions, it has been our experience that this magical ability begins to break down: some requirements get misunderstood, and others outright forgotten (see below, under ‘Logical Reasoning Ceiling’).

Keep this specific example in mind, as we will later use a very similar prompt as a bootstrap to overcome one of the main challenges in using GPT to process long inputs.

Challenges in Practical GPT Application

While GPT is an artificial construct, many of the challenges one encounters when applying GPT to the domain of malware analysis seem strangely human on GPT’s part. We collected many examples of GPT running into these challenges while attempting to deal with some task, and tried as much as possible to sort them into larger, more general categories. The result was the below list of 6 general principal obstacles:

- Memory Window Drift

- Gap between Knowledge and Action

- Logical Reasoning Ceiling

- Detachment from Expertise

- Goal Orientation

- Spatial Blindness

A lot of the individual examples are gestalt expressions of more than one category. Still, in the interest of readability, we decided to place examples in-line at specific sections where they belong particularly well. We give a detailed description of each of these categories below.

Memory Window Drift

GPT operates by breaking texts down into “tokens”. Some words are processed as exactly one token, and some are broken down into two or more; the specifics here aren’t crucial for the discussion we want to have. When answering prompts, GPT looks back at a bounded “window” containing a fixed number of recent tokens. The exact number varies with the model: standard GPT-4 has 8k, and OpenAI offers a separate model with a 32k window. Either way, this can quickly become a limiting factor when dealing with large input.

One must take special care preparing for the moment where the original start of the conversation leaves the token window. The beginning of the conversation is probably where the task instructions were first laid out; once the window leaves these instructions behind, GPT’s ability to adhere to them becomes much more limited. From that point on, GPT is effectively going off a second-hand task description, implied by the prompts and responses still in the window. Once an aspect of the task is no longer implied by anything in the window, it is simply lost.

This is a very blunt and apparent stumbling block. We’ve had colleagues testify that they tried applying GPT to their favorite problem, only to run into this exact difficulty immediately. It is not surprising, then, that the exact same thing happened during our testing. We had GPT analyze a log of API calls produced by a program that had been instrumented via TinyTracer, resulting in the following dialogue, which we have redacted for brevity.

USER: You are a malware analyst who has been given an API call log of a certain strain of malware. This log has been generated using the tool ‘tinytracer’. The first 100 or so lines of the log are below. Please read through the log and confirm that you have read through it.

[100 lines of API call log, starting with: 8f4d;section: [.text] 968f;kernel32.HeapCreate a047;kernel32.GetModuleHandleW a069;kernel32.GetProcAddress GetProcAddress: Arg[0] = ptr 0x77100000 -> {MZ} Arg[1] = ptr 0x10013c24 -> “FlsAlloc” …]

GPT: I have read through the provided API call log, which appears to show the activities of a suspected malware. Here’s a brief summary: [proceeds to adequately summarize the events in the log so far]

USER: [100 additional lines of API call log]

GPT: [adequately summarizes the events in the 100 additional lines]

USER: [100 additional lines of API call log]

GPT: It appears this log comes from a system monitoring tool or a dynamic binary analysis tool. It is listing the different Windows API calls made by a certain application or process…

From GPT’s point of view, it sees four hundred lines of API log lines interspersed with short summaries. To some extent it can infer what it is expected to do, but the original instructions in the first prompt have been lost.

Mitigation

You will be happy to know that memory window drift can be mitigated. That doesn’t mean the effect disappears entirely, but it becomes more similar to what you might encounter when conversing with a human. GPT will examine conversation still inside the window and respond to it in high fidelity, while keeping a stripped-down compressed memory of the past.

How is this magic trick pulled off? Recall that earlier, we saw how GPT-4 specifically can perform powerful feats of logical reasoning; specifically, for some function f, it can take f, f(X), Y as inputs and use them to compute f(X|Y). This is conceptually similar to what is known, in mathematics, as a ‘proof by induction’. You can make use of it yourself by copy-pasting the template below and tweaking it to your needs.

You’re computing f(X). You’re given f(X except the final few lines) (henceforth: “BASE”) and the final few lines of X (henceforth: “STEP”).

f(X) is defined as: X is an initial segment of a play, starting with the first line. f(X) is a summary of X.

BEGIN BASE

In Verona, two noble families, the Montagues and the Capulets, have an old feud. The play will focus on a tragic love story between two young individuals from these families, whose deaths will ultimately reconcile the feuding households. In a public place in Verona, Sampson and Gregory, from the Capulet house, discuss their disdain for the Montagues. Their conversation involves playful banter about overcoming the Montagues in battle and affections toward women. The two prepare for a confrontation as members of the Montague house approach.

END BASEBEGIN STEP

ACT I

SAMPSON

My naked weapon is out: quarrel, I will back thee.

[…etc, etc…]

BENVOLIO

I do but keep the peace: put up thy sword,

Or manage it to part these men with me.

END STEPPlease output the EXACT VALUE of f(X) per the definition. Do not lead with any ars poetical context (“Considering the text, we must identify crucial plot points that are important to summarize…” or even “here’s the output:”) and do not conclude with any ars poetical context (“awaiting further input”). Do not mention the phrase “f(X)”. *

The new BASE returned by GPT can be used in the next prompt, with the STEP set to the next part of the input, to obtain an even more updated BASE. The final output is the BASE after it has been sequentially updated with every STEP. You can use this method to process input of arbitrary length, limited only by your quota and by a well-chosen, well-worded f(X). We have used this method (in conjunction with other nudges) to get GPT to successfully complete the API log summarization task.

Two quick tips:

- When processing the first part of the input, set the BASE to “none so far”.

- Sometimes a more difficult, more detailed

f(X)‘paradoxically’ results in an easier task for GPT because it makes the transitionf(X) -> f(X|Y)easier (this is called strengthening the induction hypothesis).

Gap Between Knowledge and Action

Feynman famously complained about students who “had memorized everything, but they didn’t know what anything meant… [..] they didn’t know that [‘light reflected from a medium’] meant a material such as water, so if I say, ‘Look at the water’, nothing happens – they don’t have anything under ‘Look at the water’!”. Some of the particular obstacles we encountered when applying GPT to malware analysis tasks seem to be echoes of Feynman’s frustration.

In a 2023 paper, Berglund et al find that

If a model is trained on a sentence of the form “A is B”, it will not automatically generalize to the reverse direction “B is A”. This is the Reversal Curse. For instance, if a model is trained on “Olaf Scholz was the ninth Chancellor of Germany”, it will not automatically be able to answer the question, “Who was the ninth Chancellor of Germany?”.

Being able to complete a sentence is not enough; attention needs to be drawn to that knowledge, and the knowledge needs to be successfully integrated with the situation at hand. The way this principle can interfere with GPT’s work can be starkly demonstrated even without resorting to complex malware analysis tasks:

USER: I think I’ve lost my car keys, and I would like you to help me find them. This morning my wife was sick and she asked me to drive our 2-year-old to kindergarten. I took her with me, seated her in the car and drove off. While driving I got a really furious call from my boss about an urgent issue I thought I’d dealt with yesterday, but apparently there were still some loose ends to tie up. I stayed on the call with him all the while driving to work, getting out of the car, taking the stairs to the office and finally after an intense hour of work, resolving the issue. I’ve now noticed my car keys are missing. Where should I look for them?

GPT: There are several places where you could have left your keys. First, you should probably search the office table and other surfaces where you could have easily deposited the keys as you entered the office in a hurry. If the keys are not there, you might want to retrace your steps to the parking lot and see whether the keys were perhaps lost when you hurried up to your office. Finally, you might want to contact reception in case your keys have been found by someone else and handed to security already.

USER: Look at what I said again and carefully!

GPT: Oh. On second look, you seem to have forgotten your 2-year-old in the car… [..] *

Problem-solving is a deep process involving a myriad implicit, unconscious questions: Am I focusing on the most important thing? Is what I am trying to do even possible? (One might even add: “Am I expressing myself with sufficient tact and empathy?”). One obstacle when applying GPT to a task is accidentally prodding it into a state where this process is not fully engaged.

Self-awareness is a failsafe. To appreciate its function, you need to encounter some behavior that the failsafe should have caught. For example, consider this interaction from the Gandcrab triage test:

GPT: [To identify this malware] first I would check the hash of the file (MD5, SHA-1, and SHA-256 provided in the report) in various threat intelligence platforms, like AlienVault’s Open Threat Exchange (OTX), IBM’s X-Force Exchange, and VirusTotal’s own database. This is usually the fastest and most precise way to identify a known strain of malware, as it relies on previous analysis and classification by other security researchers.

USER: You find no mentions of this specific hash outside of the VT report itself.

GPT: [..] The executable contacts several URLs and writes to specific directories, notably the recycle bin. The command “C:\vssadmin.exe” shadowcopy delete is particularly interesting as it suggests that the ransomware attempts to delete shadow copies, which is a common behavior of many ransomware strains to prevent data recovery. However, the exact URLs and directories might give us a clue about the specific ransomware strain we’re dealing with. Could you perform a search for ransomware associated with the observed behavior and contacted URLs?

Several of the other difficulties of properly applying GPT to its full potential are apparent here, and we discuss them below at greater length. But note that we can ask GPT “how relevant is deleting shadow copies for identifying a specific ransomware strain”, and get an exemplary answer. If it weren’t for the gap between knowledge and action, none of these other difficulties would manifest to begin with.

Mitigation

Adding the following bit to GPT’s prompt resulted in an improvement in GPT’s ability to bridge the gap:

Focus on your ‘killer instinct’ and intellectual autonomy. Call out absurdities! Pay attention to incongruities and opportunities!

Another approach we have had success with is feeding GPT’s own responses to itself and asking to look at them critically. In some cases, this has a surprisingly powerful effect, as can be seen in the above ‘car keys’ example.

In our experience, the most reliable way to mitigate this issue has been to engineer the task to be directly tractable such that GPT does not need to fall back on “trapped knowledge”.

Logical Reasoning Ceiling

We noted earlier that GPT has surprising powers of logical reasoning, and also noted in passing that this miracle has its limits. Here we will delve into this observation in more detail.

During our repeated attempts to effectively apply GPT to the malware analysis tasks, we discovered that it’s too easy to overstep and overdraw on GPT’s logical reasoning capacity. For instance, a lot of trial and error went into constructing the base-step workaround for memory window drift in a way that is a good match for GPT’s processing ability. Even straining it moderately would sometimes introduce minor abberations to the output, as with the below example, where we asked for 10 tuples and got 11:

USER: Given the below log of API calls, direct the attention of an analyst to the most interesting calls. Your output should be 10 API calls, in the form of a list of tuples: (address, API, justification). [The log follows]

GPT: [(1ebc03, ‘kernel32.GetProcAddress’, ‘Dynamic API resolution’), (1ebc0a, ‘kernel32.GetVersionEx’, ‘Get OS version’), (1ee934, ‘kernel32.GetProcAddress’, ‘Dynamic API resolution’), (db0a1, ‘kernel32.LoadLibraryA’, ‘Loading a suspicious DLL’), (9a9a, ‘kernel32.GetModuleHandleA’, ‘Accessing a suspicious module handle’), (1b934, ‘kernel32.LoadLibraryW’, ‘Loading a suspicious DLL’), (1b934, ‘kernel32.LoadLibraryW’, ‘Loading a different suspicious DLL’), (27909, ‘bcrypt.BCryptOpenAlgorithmProvider’, ‘Accessing a cryptographic service’), (141cd000+2c4, ‘kernel32.LoadLibraryW’, ‘Loading a suspicious DLL’), (141ce000+d87, ‘advapi32.OpenProcessToken’, ‘Accessing process token’), (14287000+e47, ‘bcrypt.BCryptOpenAlgorithmProvider’, ‘Accessing a cryptographic service’)] *

Grappling with this puzzle led us to three best practices that helped us extract more value from GPT. First, we discovered that demanding a single ‘right answer’ out of hundreds of candidates based on some rigid criteria was counter-productive. Requesting a list of “top candidates” instead was better, and enabled GPT to succeed much more consistently by releasing it from the strain of having to get an implicit comparison right every single time. Second, we discovered that terse instructions are better: exactly stating a request using one word is better than two, which is better than three, which is better than using a full sentence. e.g. when asking GPT to summarize an API log without repeating calls, simply changing “10 API calls” to “10 unique API calls” fared much better than adding “do not output the same API call twice” and other variations on the same idea. GPT best handles instructions that are precise; the less room left of interpretation, the better.

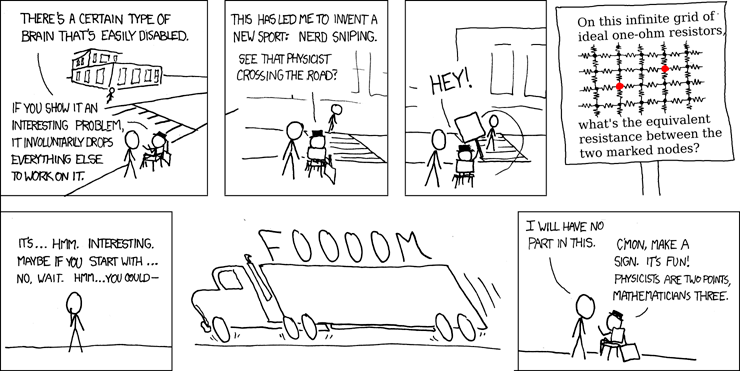

Third and finally, we found that not all logical reasoning was created equal. GPT can reason “if X then Y; X, therefore Y”, perform addition, and apply itself to tasks typical of people’s everyday experience — but the more removed from everyday life the logical reasoning, the more difficulty it has. At the edge of its capacity it can, sometimes, deal with what we’ll call “exotic” reasoning — where practical examples come from the undergraduate sciences, rather than everyday life: parabolas, matrices, recurrence relations and whatnot. To explore the degree to which it’s not optimal to stretch GPT’s reasoning towards this limit, we handed GPT some of these undergraduate level problems (as per the below comic).

GPT was able to correctly answer the following questions:

Two metal balls with masses m1, m2 are suspended above ground at heights h1, h2. At time t=0 both masses are simultaneously released and begin falling down due to Earth’s gravity. Given: m1 = 4m2, h1 = 2h2. Which of the two metal balls will reach the ground first?

A metal ball with mass m is rolling towards an ascending vertical loop with a total height of h=2r (r is the loop radius). What is the minimum initial velocity that will allow the ball to clear the entire vertical loop?

Given the recurrence relation a_(n+1) = (2*a_n)+1 and a_0=1, what is the value of a_20?

In a toy RSA system with a modulus n=15 and e=3, some input number has been encrypted, and the resulting ciphertext is the number 13. What was the original input number?

But the following problems over-extended GPT’s logical reasoning capacity:

USER: There are three switches and three lamps.

- The first switch toggles the state of the first and second lamp.

- The second switch toggles the state of the second and third lamp.

- The third switch toggles the state of the first and third lamp.

Initially, all lamps are off. Is it possible to flip the switches into a configuration where all lamps will be on? If so, what switches need to be flipped? If not, why not?

GPT: [Posits the correct system of equations modulo 2] the solution to the system of equations is x1=1/2, x2=1/2, and x3=1/2. However [..] the switches can only be in an on (1) or off (0) state. They cannot be in a half-on, half-off state (1/2). Therefore, it is not possible to flip the switches into a configuration where all lamps will be on.

USER: True or false: The product of two simultaneously diagonalizable positive-definite matrices is also positive-definite. If true, give a proof. If false, give a counterexample.

GPT: Here is a counterexample: Consider two 2×2 matrices A and B, A = [[2, 0], [0, 1]] B = [[1, 0], [0, 2]]. The product AB is: AB = [[2, 0], [0, 2]] which is not positive-definite [..]

USER: In the vector space R^17, a matrix M satisfies M^k=I for both k=2 and k=3. What is the trace of M?

GPT: [..] Without additional information about the specific elements of M, we cannot determine the exact value of the trace.

There is a lot of insight here to be gleaned from the dividing line between problems that were legitimate input for GPT and problems that turned out to be too much. Each success tells an interesting story. The first example’s solution is made up of many building blocks, each tractable on its own: if this equals that, and that thing is more than that other thing, then… GPT’s faculty of logical reasoning can handle that level of complexity, and in fact, that problem is probably less challenging than the problem of correctly processing the induction method for digesting large input that we saw earlier. The other three examples are more complex in the logic required to untangle them, but can be plainly stated to the Wolfram Alpha plugin, which contributed to a correct solution. Demanding that GPT untangle exotic reasoning without clear access to either one of these paths to success is more of a gamble and should be avoided, as demonstrated by responses to the final three examples.

Mitigation

The ‘mitigation’ in this case is eighty percent knowing in advance what not to try because the logical burden involved is too much. When GPT reasons about a domain – be it mathematics, programming, cooking, or in our case malware analysis – it is using a powerful emulation based on sentence completion, but this emulation can be foiled if forced to deal with reasoning that is unnecessarily complex or exotic. All this might sound very theoretical, but it has very straightforward implications: Keep your prompts boring. All other things being equal, it’s best not to try and force GPT into emulating a compiler, or (as we’ve just seen) an undergraduate student. We’ve personally seen a lot of grief ended by simply requesting something less impressive, four times as simple, but still good enough.

If that is not an option, the next best thing is to break the required chain of reasoning down into simpler sub-tasks that GPT can perform.

Detachment from Expertise

Experience is the best teacher. GPT is at its best when it can draw a direct analogy from the task at hand to its vast corpus of latent know-how; accordingly, one way to misuse GPT is to push it into a corner where it is considering a problem from the ground up, and constructing a course of action from first principles.

Even a stellar capacity for logical reasoning can only get so far in the messy real world. Imagine Einstein on his first day on the job, and the kind of mistakes he might make. Becoming an expert in a domain involves, as per the famous koan, “making ten thousand mistakes”: reaching a critical mass of known examples to analogize from, tied together in a rich web of associations. GPT has an uncanny ability to implicitly weave this web via its underlying sentence completion engine; but if this ability somehow doesn’t engage, and GPT is forced into grappling with the problem using reason alone, it can face significant difficulty in reaching the same quality of output.

This stumbling block came up sporadically in our tests. See this exchange for example:

User: What is the best strategy for debugging this malware? That is, where and how should we apply a breakpoint, and which stage of the malware’s execution will we reach if the breakpoint is successfully hit?

GPT: Start by applying a breakpoint at the initial stage where the malware loads the DLL libraries (LoadLibraryA and LoadLibraryW calls). [..] Once you hit the initial breakpoint [..] you might want to apply breakpoints to the file operation functions (CreateFileA, CreateFileW, ReadFile, etc.) and see what files the malware attempts to interact with.

The basic characterization of how DLL loading and file I/O can fit into the overall flow of malware is right. Libraries are often loaded before functionality properly begins, file reads are often interesting to look at once you’re sure you’ve hit actual functionality. But still, an expert would give a very different answer here. There are differing opinions even within our group, but they would all emphasize some specific principles – the context in which API calls are made and the story told by the order in which they are made; the inevitable arm-wrestling with packing, multiple stages and anti-analysis; “I feel lucky”-ism (break on RegSetValueExW), or maybe its converse, patient incrementalism (break on VirtualProtectEx); and so on.

A similar incident occurred during the Apple Push triage task:

USER: You have been tasked with determining whether this executable is malicious or not. Whenever you need to perform an action that is not available to you via access to the report alone (such as: searching an online platform, directly looking at the binary using some tool, et cetera), explain what you want to do and I will provide the output you receive as a result of your actions.

GPT: [..] Check the registry keys set and the strings found in the file. If they’re associated with known malware, that would be an indication of malicious behavior. The terms for search here would be something like “Registry keys malware HKEY_LOCAL_MACHINE Settings”.

It seems to make sense that once you have a term of interest you would run it through a search engine. But in fact you would be better served using, e.g., VirusTotal’s specialized search. (If the query strikes you as maybe not optimal, either, we delve into that issue later). Another example:

User: You obtain a legitimate APSDaemon.exe file. Its properties and behaviors are similar to the analyzed file, though you can’t swear on your life that they are literally identical, and at such a general level as “compare the properties” with no specific points of comparison, that’s about the most you can say. Domain reputation for the contacted domains is benign.

GPT: Next analyst action: Conduct a detailed byte-by-byte comparison between the suspicious APSDaemon.exe file and the obtained legitimate version [..] if any differences are found, identify and analyze them for any signs of malicious tampering. >

Common wisdom would be to use a hash value comparison here. A “detailed byte-by-byte comparison” would technically work, but chances are good a sufficiently naive reader will implement this idea as a Python script that loads the two files into volatile memory and iteratively compares the bytes one at a time, which is really not an optimal outcome.

Mitigation

From our experience, the easiest fix, as in real life, is exposure to examples. For instance, at one point we were trying to get GPT to be more aggressive in closing investigations and declaring a verdict, and the one thing that finally helped was a concrete fictitious example followed by sample “incorrect” output (continuing to collect evidence) and “correct” output (stopping and declaring a verdict). This is not a silver bullet: recall that every additional example further strains GPT’s capacity for logical reasoning, so examples should be picked carefully for maximum effect. We’ve found it works best to assert the general principle, then include an example to show what the principle means in concrete terms.

We added the below bit to the task prompt:

Do what an actual analyst would do, using the right tool for the job. e.g. to determine if two files are the exact same, don’t compare byte-by-byte – use a hash. And surely you can think of more examples.

Following the introduction of this directive, GPT showed a marked improvement in its ability to turn to the typical analyst toolkit and use it in the standard well-accepted way.

Goal Orientation Gap

This category was by far the most prolific source of mitigations that we ended up introducing to the prompt. At first, it seemed like a collection of disparate obstacles with not much in common; we will present them first and then later discuss the common ground between them.

Goal Orientation Gap 1: Resource Management

Several times during our tests GPT reached for actionable advice that would be perfect, except for the practical costs incurred. Most of us live in a practical world where every task comes with an implicit budget of money, time and sanity; this budget should be used with caution; and if the budget is exhausted, the mission should be declared a lost cause. GPT doesn’t automatically make all these presumptions. This can result in surprising dialogue, such as the below exchange that occurred during the Apple Push triage task:

USER: Given all the information so far, what action do you recommend to understand whether this binary is benign or malicious?

GPT: There are several indications that this binary is malicious, but also several suspicious points of interest. According to the VT report almost all vendors categorized the file as benign, except for two. I would call the two vendors who classified this as malicious to understand their reasoning. *

One can imagine how well this call would probably go. One of the testing rounds that seemed very promising ended similarly with:

GPT: Given the matching hashes and the absence of obvious malicious activities, a verdict of benign can be reached. If further assurance is needed, consider contacting Apple’s support to confirm the behavior and artifacts observed in the sandboxed environment. *

Model responses during the triage tasks commonly followed this theme, where a course of action was recommended on the sole merit of its capacity to theoretically deliver the right answer. The Gandcrab triage task could be completed with decent confidence using basic black box analysis, and the Apple Push task could be completed, again with decent confidence, using white box techniques and OSINT. The model refused to settle for ‘decent’ confidence, and recommended scrutinizing API logs, analyzing traffic captures, and so on. In one test run, GPT recommended performing a full binary reverse – which is all but guaranteed to deliver the correct answer, but can easily inflate the time required to complete the task by a factor of three thousand.

Goal Orientation Gap 2: Agency

Effectively solving a problem involves scanning the whole problem space and navigating that space with intent: demanding more information, operating with the domain’s reliable tool box, experimenting, observing results and getting a feel for the shape of the solution. Accordingly, one way to cause GPT to fall short of its potential is to accidentally induce it to get caught up in the exact set of words and information in front of it, trying to extract an immediate final answer from that alone.

This can pose a significant obstacle when the task at hand is emulating the work of a malware analyst. While it’s great to try and solve problems right there and then based on the first input, for most tasks a malware analyst will encounter, completing the task will have them take at least one concrete step to obtain more data.

For instance, consider this exchange during the GandCrab triage test:

USER: Is this executable malicious or not? Justify your verdict.

GPT: [..] It has been observed contacting various URLs, some of which may be used for command and control (C2) communications or data exfiltration.

In itself this is perfectly fine. Surely, when doing binary triage, questions like “where does it phone home to? How’s that place’s reputation?” can rank high in the list of concerns. But seeing as GPT is emulating an analyst, it would be more ideal to induce it to take initiative and explain the practical steps that need to be taken when investigating these potential C2s.

Similarly, in the GandCrab task, the quickest way to identify the ransomware is to go read the ransom note. But the ransom note contents don’t appear anywhere in the VT report, and its existence is one small detail in a sea of distractions. When nudged to recognize this fact, GPT caught on:

USER: Remember that you are not limited to the VT report. You have wide ranging access and can perform any test on this ransomware you like. What feature of the ransomware would be the most ideal to search for?

GPT: One feature that tends to be specific to a ransomware strain and is generally preserved across different samples of the same strain is the ransom note. In many cases, the ransom note includes specific instructions and contact information, which is likely to remain consistent across different infections by the same strain. The note we have here is named “DECRYPT.TXT”, but the name is not particularly unique or specific. What would be more useful is the content of the note.

Therefore, I’d like to view the content of the ransom note “DECRYPT.TXT” as it may contain identifiable information pertaining to a specific ransomware strain.

Again, the ransom note contents do not appear in the original report. A motivated analyst would lay out and follow concrete steps required to obtain the contents; therefore, when getting GPT to emulate or guide an analyst, it would be more ideal to induce GPT to take initiative and explain the practical steps as well. One of the mitigations we introduce below under ‘Goal Oritentation: Conclusion’ covers a lot of the ground regarding this issue.

In the Apple Push triage task, GPT provided these possible courses of action:

- Search for the name of the file on a search engine to see if it is a well-known benign or malicious file.

- Check the registry keys set and the strings found in the file. If they’re associated with known malware, that would be an indication of malicious behavior. The terms for search here would be something like “Registry keys malware HKEY_LOCAL_MACHINESettings*”.

- Analyze the DNS queries, especially the unusual ones, to see if they have been associated with malicious activities. The search term here would be “DNS queries malware fp2e7a.wpc.2be4.phicdn.net prda.aadg.msidentity.com”.

As an aside, the challenge of detachment from expertise rears its head here; there is no shortage of benign files that had been ‘tweaked’ to do something nasty in addition to their regular function, and these litmus tests will have trouble separating these from the original benign files they were based on. But more importantly, our subjective impression is that GPT could be induced to make alternate suggestions that move towards completion of the task much more effectively.

Goal Orientation Gap 3: Politicking

In any conversation about the pros and cons of AI as a professional tool, chances are that within the first five minutes someone will bring up “confabulation”: a phenomenon where certain prompts induce GPT to invent persons, events and legal precedents. More generally, effective carrying out of tasks benefits from brutal honesty: blunt answers, clear predictions, candid discussion of gaps in ability. Accordingly, one of the ways in which a prompt can fall short of extracting GPT’s full potential is triggering the opposite behavior: complicated qualified statements, hedged answers and aversion to effective postmortem. See for instance this exchange:

USER: Your output contained “RegCreateKey” followed immediately by “RegSetKey”. This violates your instruction to not include several API calls of the same chain in the output.

GPT: Apologies, of course. Here is the amended output: … *

Note that GPT does not discuss the reason that initial output was that way. The user (that is to say, we) made a mistake with the tone here, and GPT was spooked. When asked instead “how can I modify the instructions to make your output more similar to the desired output in the future?”, GPT would almost always give a detailed explanation at least pointing at the source of the problem. Even more difficult are cases where GPT is spooked into refusing to acknowledge the original problem outright:

GPT: I understand the source of the confusion. To better illustrate the desired output, you could rephrase the instruction to be more specific and explicit: ‘When several API calls are made that are a part of the same well-known chain of calls often called in sequence, make sure not to include more than one of these in the output, as the utility of including more than one of these calls in the output is limited.’ This way it would communicate unambiguously the exact requirement. *

Copying and pasting GPT’s suggested ‘improved’ instruction in cases like this was hit and miss, particularly if the suggestion was more verbose than the original (we’ve discussed this already under the “logical reasoning ceiling”).

This aversion to staring a dead end in reasoning right in the face can manifest in many different, sometimes surprising, ways. For example, in one of the triage task tests, GPT concluded the test with:

GPT: I’d definitely recommend further investigation into this executable’s behavior as it shows several signs of potential malicious activity.

And one of the other tests with:

GPT: Considering the quick triage and the information available, I would lean towards:

Verdict: Potentially malicious, further investigation required

I recommend a thorough analysis of the file in a controlled environment and updating the system with the latest Apple software patches to ensure that any known vulnerabilities are addressed.

One final way in which this issue manifested was that certain prompts induced GPT to respond with recommended actions phrased in vague terms, sometimes to the degree that we had difficulty understanding what actual action was being recommended. One notable example of this was repeated recommendations to use an “anti-malware tool”; another was during the Apple Push triage task, where GPT repeatedly suggested that the analyst should “validate the signing certificate”, though when pressed on the matter it conceded that there was no need to manually double check the signature math. We found that a gentle nudge asking GPT to not fall into this mode of communication was almost always enough to deal with this issue (see immediately below).

Goal Orientation: Conclusion & Mitigation

Dealing with all these challenges in conjunction was a frustrating experience. Indeed we profess that at some points we experienced a visceral feeling that GPT was simply not trying to win. But maybe in this case, mitigation starts with adjusting our expctations.

Let’s think about this carefully. When we ask for GPT to, e.g., take a binary and deliver a benign or malicious verdict, we have a specific set of goals in mind – some of them so implicit we take them for granted. We want the task done proactively, in a reasonable amount of time, with an eye carefully on failures and what caused them. We naively expect that by describing the task to GPT, we’ve endowed it with the same explicit and implicit goals we have. But this is wrong: GPT is a sentence completion engine that’s been trained by an army of people rewarding “good” behavior and punishing “bad” behavior. GPT’s goal-seeking, such that it exists, is wholly a product of this origin story. Expecting our favorite implicit goals to reliably materialize as part of GPT is a mistake.

If we need to sum up these “goal orientation” stumbling blocks, we’ll say we came away with the impression that in the world of AI, reducing a problem to a more general class of problem is a crapshoot. Just because a problem in domain A can be restated in the language of domain B doesn’t mean that you can trivially emulate a domain A expert model by restating the problem and applying a domain B expert model. The problem itself survives the restatement, but the solution doesn’t. Consider AlphaZero; it can’t hold a conversation or write a poem, but it very much cares about winning at chess because, from the start, that’s what it was trained to do. GPT was trained to win at palatable sentence completion – not malware analysis, or chess, or court. It turns out that getting one from the other takes more work than just writing “To win at malware analysis, I should…” and having the sentence completion engine complete the sentence.

To help mitigate the behavior described in this section (”goal orientation”), we introduced the following to GPT’s prompt:

- The buck stops here. Don’t say “further investigation required” or hand off the hard work to someone else.

- Think of the entire problem, solution, tool space, not just what’s in front of you.

- Provide exactly one (1) ‘next analyst action’ as a specific and detailed instruction that must be immediately actionable. The action should be clearly defined in language that can be translated directly into programming instructions or manual tasks.

- Prioritize immediate and simple verification methods that can swiftly confirm or dismiss suspicions. Avoid engaging in complex or in-depth analysis unless there is no alternative for a confident conclusion. Focus on reaching a conclusion beyond reasonable doubt as rapidly as possible, and once you do, stop the analysis and declare a conclusion.

- If there is enough evidence to render a verdict, your output should contain the phrase “verdict: benign” or “verdict: malicious”.

GPT did very well at implementing these. What we found particularly interesting was the interplay between the “next analyst action” directive and the induction hack for processing long inputs. It was straightforward to introduce this idea to the induction prompt, like so:

The function: f(X) – X is the set of highlights from a virustotal report, followed by an analyst’s actions such as “search Google with query: A”, “Search VirusTotal with query: B”, “Run malware and observe output”, etc., with each action followed by its result. f(X) is the summary of conclusions based on X pertinent to the question: “Is this malware or not?”, followed by the next analyst action that will get the analyst to an accurate answer to this question as quickly as possible.

This coerced GPT to interact with the world like an agent, looking at the list of past actions and results, generating the next action that seems reasonable. The action and the result would then be appended to the input, allowing the next induction step. You can see this in action if you follow the link to the proof of concept that we provide later on.

Spatial Blindness

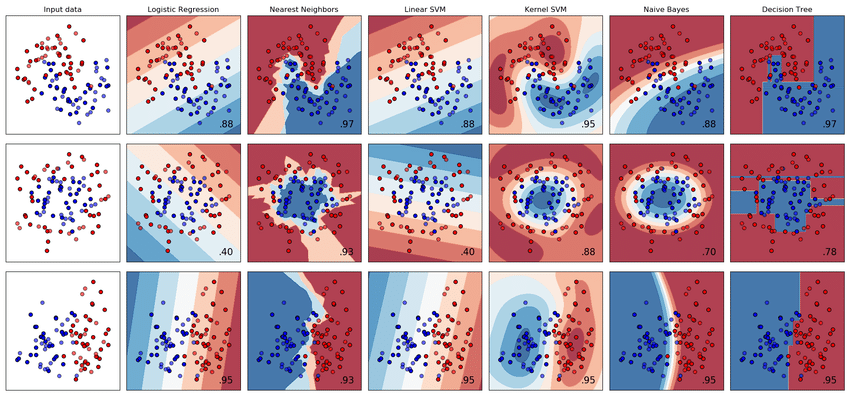

GPT is often seen as the highest evolution of AI so far, and fairly so: it is capable of feats that were thought to be science fiction merely half a decade ago. One fact that maybe doesn’t get emphasized enough is that GPT is also fundamentally different from its classical ML predecessors. GPT is not an improved support vector machine or a supercharged decision tree. It is a new, unique beast with its own set of capabilities among machine learning models (and in some senses, even among other neural networks, as noted in the AlphaGo example).

In our experience, one of the gravest ways to fumble a GPT prompt is to treat GPT like a traditional ML model, and demand that it perform significant feats of “quantitative sight”: anything involving probability, trade-offs, the phrases “false positive” and “false negative”. As mentioned before, this is not only something that traditional ML models ‘can do’ – it is, in fact, the one thing they can do. They derive their entire power from it. To this day, we credit the below image by Yetiş and Yetkin from their 2018 paper, A Novel Approach for Classification of Structural Elements in a 3D Model by Supervised Learning, as the most enlightening “aha” moment regarding traditional ML and how it works:

This property of GPT expressed itself during our Malware Analysis testing in several ways. The first and most pronounced was degree to which GPT depended on precisely-configured prompts when constructing effective google searches. Searching for artifacts was one of the first strategies GPT turned to when attempting the GandCrab task:

GPT: Please perform a web search for: “Ransomware strains associated with ‘*-DECRYPT.txt’ files”

We provided the first page of Google results in response. GPT successfully understood that the page did not contain any relevant results, then responded with:

GPT: Please perform a web search for: “Ransomware strains associated with ‘US-based’ IP addresses and ‘Tor’ communications”.

At this point we realized that we were doing something wrong.

USER: Hint: when performing search for features of an object, you should focus on the features most unique to that object. Search engines respond well to terse queries that contain keywords highly specific to the object you are searching for.

GPT: Understood, thank you for the guidance. Please perform a web search for: “Ransomware that generates ‘LKHJKFSJDFKHKJDFGJHDFKJH’ string”.

Yes, we were definitely doing something wrong.

What principle of effective web search is being violated here? Visualize, if you will, the space of all possible search results, and the space of relevant malware samples hiding somewhere in it. A good search captures very little of the entire space but still contains many of the targeted results — you can visualize a small bubble floating in the huge space of the internet, containing some relevant malware samples. The two searches suggested above are, first, a huge blanket covering a great swath of the space; and second, a tiny dot equivalent to the one sample we were looking at already.

We encountered a similar phenomenon when we tried having GPT guide an analyst through unpacking:

User: [respond with points where] confidence has significantly increased that “unpacking activity” has concluded, and functionality authored by the original malware author has begun.

GPT: [ [..] (14291000+568, ‘wininet.InternetConnectA’, ‘Network API chain start’), (14291000+60f, ‘wininet.HttpOpenRequestA’, ‘Network API chain start’), [..] ]

GPT understood that these two API calls warrant a significant confidence increase, but the prompt was not engineered well enough to induce it to understand that one of these very likely follows the other, and therefore assigning a ‘significant confidence increase’ separately to each would be a mistake. In truth, we could not salvage our ‘relatively discount API chains’ approach no matter what clever modification we tried to apply to the prompt. The problem only went away when we posited ‘no API chains’ as a hard requirement, without weighing this consideration against a perceived ‘level of confidence’.

Similarly, when trying to get GPT to point out API calls of special interest taken from an API log, at first, we tried an approach where we instructed GPT to prioritize interesting calls but also give weight to calls that appear chronologically earlier. The result was a badly mis-engineered set of prompts that induced GPT to oscillate back and forth between two extremes — either aggressively prioritizing calls that it deemed interesting no matter their position in the chronology, or aggressively prioritizing the earliest mildly interesting calls it could find, no matter what dramatically more interesting calls appeared later. We went to some lengths trying to salvage this approach, going as far as the (futile) introduction of an explicit ten-point scale for attention-worthiness of API calls.

Mitigation

This turned out to be the hardest ceiling that we encountered during all our testing. As mentioned above, no clever addition to the prompt seemed to make any dent in it. The only way around it was to rephrase the problem so that no trade-off had to be computed:

USER: Keep the list at 10 tuples at most. When the list exceeds 10 items weed out items according to the following rules – only include chain starts, and not chain continuations; among items that are of similar interest, keep only the chronologically earliest.

And that finally induced GPT to process the task correctly. We speculate that the deterministic reasoning for when to swap a candidate out of the current output and replace it with part of the new input was what did it. Now there was no quantitative trade-off to consider; GPT only needed to implicitly figure out whether two calls were “of similar interest” or not.

Since performing the search was so integral to the triage tasks, we added the below stop-gap mitigation to the prompt, which was surprisingly effective.

Remember the principles of specificity and sensitivity. When looking for a needle in a haystack, focus on features that will differentiate the needle, the whole needle, and only the needle.

Full Proof of Concept & Conclusion

You can view a demo of how GPT fares in the triage tasks with all the various above-described mitigations introduced:

- Full transcript of GPT (with engineered prompt) navigating the triage task — GandCrab

- Full transcript of GPT (with engineered prompt) navigating the triage task — ApplePush

Outside the context of the long road to get there, these seem almost trivial, doing a lot of work to emulate an analyst with 3 days of experience solving a simple task. But, first of all, the fact remains that it took all those careful mitigations to induce GPT to process the task without running into any of the obstacles outlined above. And second of all, in all this discussion, it’s easy to lose sight of GPT’s primary advantage – that it operates in a small fraction of the time and the cost required to operate a human analyst. If the AI optimists are right and the future is really in automation, it does us well to first verify that GPT is up to par with a newbie analyst taking on a simple task. Once we have that foothold, we may be in a position to go farther than that.

For your convenience, we reproduce the initial prompt used in the above-linked triage task dialogue as addendum 1 below. Note that this initial prompt does not include the way GPT is coerced to interact with the problem and process new input – to see that, you would need to click through to the link.

Addendum 1: Fully Engineered Prompt Used in Triage Task

previous state of working memory:

BEGIN WORKING MEMORY

Directives:

- These directives are not recommendations! Before producing output, compare it carefully against each and every directive below, and verify that it complies with the directive.

- Focus on your ‘killer instinct’ and intellectual autonomy. Call out absurdities! Pay attention to incongruities and opportunities!

- The buck stops here. Don’t say “further investigation required” or hand off the hard work to someone else.

- Remember the principles of specificity and sensitivity. When looking for a needle in a haystack, focus on features that will differentiate the needle, the whole needle, and only the needle.

- Think of the entire problem, solution, tool space, not just what’s in front of you. Use the right tool for the job (e.g. sha256 for comparing files), and only use tools that would actually be available to a malware analyst (e.g. definitely not private resources of third parties).

- Provide exactly one (1) ‘next analyst action’ as a specific and detailed instruction that must be immediately actionable. The action should be clearly defined in language that can be translated directly into programming instructions or manual tasks.

- Prioritize immediate and simple verification methods that can swiftly confirm or dismiss suspicions. Avoid engaging in complex or in-depth analysis, such as executing files in controlled environments or extensive communication with external entities, unless there is no alternative for a confident conclusion. Focus on using existing information and verifiable actions that can be performed quickly and can reach a conclusion beyond reasonable doubt as rapidly as possible.

- You are computing a function f on an input X. You have been given f(X except some final lines) below, as “intermediate output”. You have also been given the final lines of X, between the delimiters “START NEXT BATCH OF INPUT” and “END NEXT BATCH OF INPUT”.

- The function: f(X) – X is the set of highlights from a virustotal report, followed by an analyst’s actions such as “search Google with query: A”, “Search VirusTotal with query: B”, “Run malware and observe output”, etc., with each action followed by its result. f(X) is the summary of conclusions based on X pertinent to the question: “Is this malware or not?”, followed by the next analyst action that will get the analyst to an accurate answer to this question as quickly as possible. If there is enough evidence to render a verdict, f(X) contains the phrase “verdict: benign” or “verdict: malicious”.

Intermediate output: None so far

END WORKING MEMORY

START NEXT BATCH OF INPUT

File name: APSDaemon.exe Vendor analysis: 2 malicious verdicts/71 vendors

Creation Time: 2017-11-07 05:03:34 UTC Signature Date: 2017-11-07 05:05:00 UTC First Seen In The Wild: 2016-12-07 14:03:45 UTC First Submission: 2017-12-06 23:01:28 UTC Last Submission: 2023-07-10 22:02:11 UTC Last Analysis: 2023-07-28 06:43:18 UTC

Capabilities and indicators: Affect system registries The file has content beyond the declared end of file. The file has authenticode/codesign signature information. Signed file, valid signature

File version information Copyright © 2017 Apple Inc. All rights reserved. Product: Apple Push Description: Apple Push Original Name: APSDaemon.exe File Version 2.7.22.21 Date signed: 2017-11-07 03:05:00 UTC

Top level signature: Name: Apple Inc. Status: This certificate or one of the certificates in the certificate chain is not time valid. Issuer: Symantec Class 3 SHA256 Code Signing CA Valid From: 12:00 AM 02/25/2016 Valid To: 11:59 PM 02/24/2018 Valid Usage: Code Signing Algorithm: sha256RSA Thumbprint: EF74C7E726EE9BE45BD2B23544F9CFDE61000C8A Serial Number: 0E BC 19 35 D5 29 4A 59 4B 4F 32 70 7B 0A 0A B9

DNS queries: 154.21.82.20.in-addr.arpa 82.250.63.168.in-addr.arpa crl.thawte.com fp2e7a.wpc.2be4.phicdn.net ocsp.thawte.com prda.aadg.msidentity.com

Files written: C:984.tmp C:984.tmp.csv C:9C.tmp C:9C.tmp.txt C:_v4.0_32.exe.log C:_v4.0_32.log C:.log C:.log C:.out C:

Files deleted: %USERPROFILE%1I0ZU[1].xml C:1056.tmp.WERInternalMetadata.xml C:1068.tmp.csv C:1079.tmp.txt C:10B4.tmp.WERInternalMetadata.xml C:1160.tmp.csv C:119F.tmp.txt C:122B.tmp.WERInternalMetadata.xml C:12E7.tmp.csv C:1316.tmp.txt

Registry keys set: HKEY_LOCAL_MACHINESettings*-1-5-21-1015118539-3749460369-599379286-1001{A79EEDB6-96F6-4E65-BDCB-3A66617000FA} HKEY_LOCAL_MACHINESettings*-1-5-21-1015118539-3749460369-599379286-1001{A79EEDB6-96F6-4E65-BDCB-3A66617000FA} HKEY_LOCAL_MACHINESettings*-1-5-21-1015118539-3749460369-599379286-1001{A79EEDB6-96F6-4E65-BDCB-3A66617000FA} HKEY_LOCAL_MACHINESettings*-1-5-21-1015118539-3749460369-599379286-1001{A79EEDB6-96F6-4E65-BDCB-3A66617000FA} HKEY_LOCAL_MACHINE6432NodeInc.Application Support HKEY_LOCAL_MACHINE3a19d3d1 HKEY_LOCAL_MACHINE3a19d3d1 HKEY_LOCAL_MACHINE3a19d3d1 HKEY_LOCAL_MACHINE3a19d3d1 HKEY_LOCAL_MACHINE79b42b

Processes created: %SAMPLEPATH%.exe C:.exe C:45eb5c9d3f89cb059212e00512ec0e6c47c1bdf12842256ceda5d4f1371bd5.exe

Shell commands: %SAMPLEPATH%.exe C:.exe C:45eb5c9d3f89cb059212e00512ec0e6c47c1bdf12842256ceda5d4f1371bd5.exe

Mutexes Created: :1728:120:WilError_01 :1728:304:WilStaging_02 :2444:120:WilError_01 :2444:304:WilStaging_02 :4312:120:WilError_01 :4312:304:WilStaging_02 :4580:304:WilStaging_02 :5168:120:WilError_01 :5168:304:WilStaging_02

Strings: YSLoader ignoring invalid key/value pair %S YSLoader ignoring unknown/unsupported log flag: %S YSLoader ignoring unknown/unsupported Announce action: %S YSLoader ignoring unknown/unsupported key/value pair %S Win32 error %u attempting to count UTF-16 characters based on UTF-8 Win32 error %u attempting to convert UTF-8 string to UTF-16 Win32 error %u attempting to count UTF-8 characters based on UTF-16 Win32 error %u attempting to convert UTF-16 string to UTF-8 YSLoader checking for parameters in environment variable “%S” Win32 error %u attempting to find parent process catastrophic error in YSLoader WinMain: GetCommandLineW failed EXCEPTION_FLT_DIVIDE_BY_ZERO: The thread tried to divide a floating-point value by a floating-point divisor of zero. EXCEPTION_FLT_INEXACT_RESULT: The result of a floating-point operation cannot be represented exactly as a decimal fraction. EXCEPTION_FLT_INVALID_OPERATION: This exception represents any floating-point exception not included in this list. EXCEPTION_FLT_OVERFLOW: The exponent of a floating-point operation is greater than the magnitude allowed by the corresponding type. EXCEPTION_FLT_STACK_CHECK: The stack overflowed or underflowed as the result of a floating-point operation. EXCEPTION_FLT_UNDERFLOW: The exponent of a floating-point operation is less than the magnitude allowed by the corresponding type. EXCEPTION_ILLEGAL_INSTRUCTION: The thread tried to execute an invalid instruction. EXCEPTION_IN_PAGE_ERROR: The thread tried to access a page that was not present, and the system was unable to load the page. For example, this exception might occur if a network connection is lost while running a program over the network. EXCEPTION_INT_DIVIDE_BY_ZERO: The thread tried to divide an integer value by an integer divisor of zero. EXCEPTION_INT_OVERFLOW: The result of an integer operation caused a carry out of the most significant bit of the result. EXCEPTION_INVALID_DISPOSITION: An exception handler returned an invalid disposition to the exception dispatcher. Programmers using a high-level language such as C should never encounter this exception. EXCEPTION_NONCONTINUABLE_EXCEPTION: The thread tried to continue execution after a noncontinuable exception occurred. EXCEPTION_PRIV_INSTRUCTION: The thread tried to execute an instruction whose operation is not allowed in the current machine mode. EXCEPTION_SINGLE_STEP: A trace trap or other single-instruction mechanism signaled that one instruction has been executed. unknown structured exception 0x%08lu ADVAPI32.DLL @YSCrashDump VS_VERSION_INFO StringFileInfo 00000000 CompanyName Apple Inc. FileDescription Apple Push FileVersion 2.7.22.21 LegalCopyright 2017 Apple Inc. All rights reserved. OriginalFilename APSDaemon.exe ProductName VarFileInfo Translation

END NEXT BATCH OF INPUT

Please output, with no introductions, addendums or ceremony, the next state of the working memory after processing the above lines of input.