Problem Statement

You attempt to analyze a binary file compiled in the Rust programming language. You open the file in your favorite disassembler. Twenty minutes later you wish you had never been born.

You’ve trained yourself to think like g++ and msvc: Here’s a loop, there’s a vtable, that’s a global variable, a library function, an exception. Now you need to think like the Rust compiler. Maybe you’ve heard about “sum types” and “generics” and “iterators”, maybe you haven’t, and in both cases you are going to have an exceptionally bad time.

Scope and Audience

The goal of this publication is for you to become familiar with the assembly code idioms the Rust compiler uses to implement the language’s core features (as they appear in Klabnik’s and Nichols’ “The Rust Programming Language”), and more generally, the frame of mind required for reverse-engineering such programs. To this end, the publication includes several sample programs that showcase core Rust features; the programs are compiled using the Rust compiler rustc, and are followed by a guided reverse-engineering of the resulting assembly.

This document is not an end-to-end how-to guide for doing a blind RE of a stripped Rust binary. It strictly tackles the problem as per the problem statement: hopefully once you’ve read this, you can think like the Rust compiler, and you are at peace again with having been born, at least to some degree. In practice, this means analyzed programs are compiled targeting Windows OS in the default “debug” mode, with their symbols intact. Still, there is a dedicated section analyzing an optimized “release” build and presenting some commentary on the similarities and differences between the two resulting output binaries.

We assume fluency in at least one programming language and familiarity with the ins-and-outs of reverse engineering C/C++ programs. Fluency in Rust is not necessary and Rust features will be briefly explained as we go. All sample programs are available in this document’s accompanying Github Repository and should be compiled with a simple cargo build. All compilations generating the pictured assembly were performed using rustc 1.60.0 (7737e0b5c 2022-04-04) targeting 64-bit Windows, and analyzed using IDA 8.2.230124.

Basic Programming Language Concepts

This part covers features of Rust that are universally common among programming languages: variables, data types, structs, enums, modules, references, functions, comments, loops, if/then/else, match statements and constants. None of these should trip you up per se, but this is a good opportunity to become familiar with basic rustc output and its idiosyncrasies. Sample program 0, used for this section, is available here. Below is an excerpt from the program’s main function.

let alice = Person {

name: String::from("Alice"),

age: constants::ALICE_AGE,

favorite_beatle: Beatle::George

};

let bob = Person {

name: String::from("Bob"),

age: 71,

favorite_beatle: Beatle::Ringo

};

let carol = Person {

name: String::from("Carol"),

age: 45,

favorite_beatle: Beatle::Paul

};

for i in 0..10 {

if i>5 {

println!(

"In {} years, Alice will be {}", i, age_in_future(&alice,i)

);

}

}

Most of the program should be self-explanatory. Below we list Rust quirks of note, and include snippets of the full program where the feature is not visible in the program section above.

- Declaring variables is done using the

letkeyword. - Variable types are declared after variables, following a colon. Some built-in types are

i64,i32(signed integer) andu64,u32(unsigned integer). Sometimes the compiler can tell the correct type on its own (“type inference”) — e.g. above instead of writinglet Alice: Person = Person { ..., writinglet Alice = Person { ...is enough. - Function return types are declared after function parameters, following the sigil

->. e.g.fn age_in_future(p: &Person, years: i64) -> i64 - Instead of

return x;it is possible to simply writexwithout the semicolon. This can be seen in the functionage_in_future:

fn age_in_future(p: &Person, years: i64) -> i64 {

p.age + years

}

- There are two built-in types for handling strings –

String(full data structure with bells and whistles) and&str(a bounded pointer, “slice”). String literals such as “Alice” have type&str, which necessitates the callString::from("Alice"). println!(...)is a macro, not a function call, hence the!sigil.matchis roughly analogous to C’sswitchand can be used as an expression:

let song = match carol.favorite_beatle {

Beatle::John => "Imagine",

Beatle::Paul => "Yesterday",

Beatle::George => "Here Comes The Sun",

Beatle::Ringo => "Don't Pass Me By"

}; // should evaluate to "Yesterday"

- Modules (

mod a_useful_module { … }) are roughly analogous to namespaces.

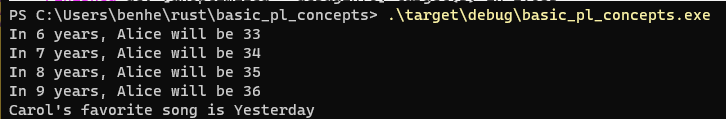

The program output:

Since this is the first sample program, naturally the moment we look at the compiler output we run into every one of its basic idiosyncrasies. Below we list several of these that we found to be noteworthy.

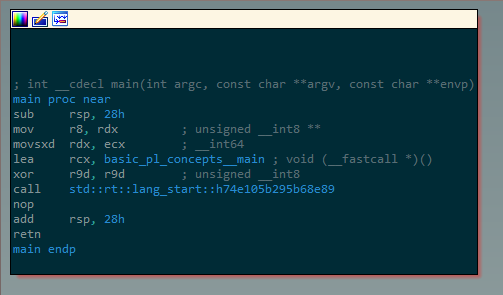

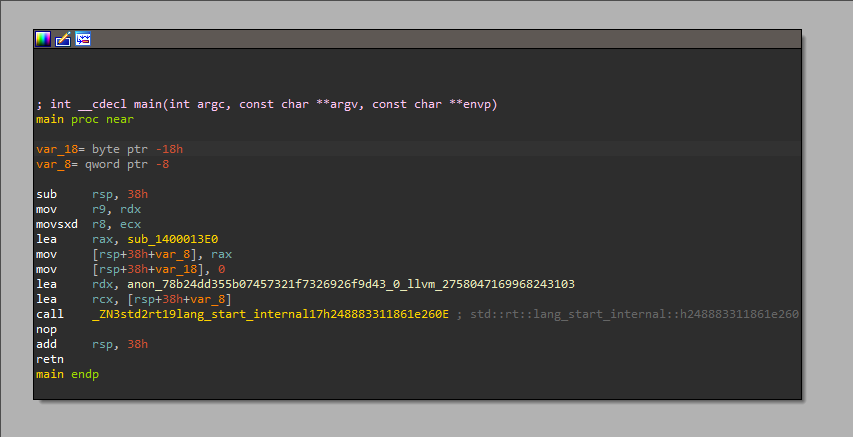

Indirect Main Function Call

The program entry point is at a short basic block that does not call the program’s actual main function directly. Instead, it provides the main function as a parameter to another function, called lang_start. This is not astronomically different from a C program first calling crt_main which only then invokes the program’s actual main function. The function itself has the full name basic_pl_concepts__main because basic_pl_concepts is the name of the crate, which is Rust lingo roughly meaning “project”. As per the official documentation: “A crate is the smallest amount of code that the Rust compiler considers at a time. Even if you [..] pass a single source code file [..] the compiler considers that file to be a crate.”

Broken Disassembly

While this document does not focus on the disassembly process itself, we would be in dereliction of our duty not to mention that once you click through to the main function, some earlier versions of IDA may choke on it and fail to parse the function properly:

When this sort of thing happens due to a program’s malicious intent it is called “anti-disassembly” and is dealt with using fancy Python scripts and manual patching of code flow cross-references. Here, it is an accidental hiccup due to a benign IDA interacting with a benign program, and it is solved by sighing, navigating to each of the un-parsed bytes, pressing c (convert to code), then once done retreating all the way back to the function start and pressing p (convert to function). If IDA is stubborn about this, watch the console output (e.g. maybe you’ve missed a rogue byte, and IDA will explicitly complain about it, including its exact address).

Mangled Names

We have reached our first Painful Conversation.

Rust internal function names assigned by the compiler are a disaster. We’ll get to the “why” later, but right now the bottom line is that compiler-generated function name symbols, which are normally an incredible boon for a curious reverse engineer, look like this in Rust:

This doesn’t mean the symbol is useless. In fact, this is a function that a clinically sane programmer would simply call by the name next, which in fact does appear somewhere in there (so why all the noise? Good question! Patience). Maybe you’re savvy enough to try Options -> Demangled Names... -> Show Demangled C++ names as Names, but sadly this barely makes a dent.

We’re trying to be careful not to deep-dive into the root of the issue right this second, but familiarity with Rust commonly used types and their available methods can short-circuit a lot of grief here. If you know to expect a call to next at that location, or even some call to an Iterator method, you are well-positioned to parse that name correctly and move on.

The same core issue that induces these verbose names, which we’ll revisit later, is also at the source of another point of interest: if your favorite function similarity engine assigns a name to a function in a Rust binary, be mentally ready even more than usual to disregard the match with prejudice. Again, we will revisit this point in more detail later.

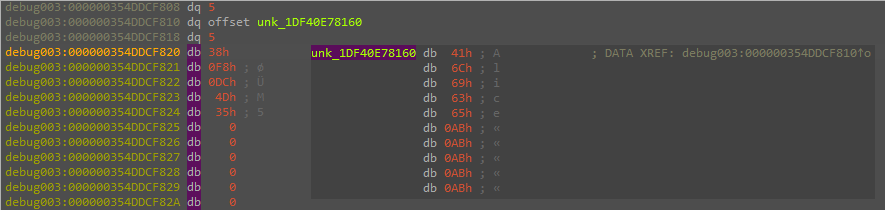

Contiguous Strings

This is the proverbial “welcome to REing modern compiled languages” gotcha; this quirk also appears in, for example, Golang binaries, and will greet you the moment you naturally start your analysis with a string search hoping to get lucky. Strings data is kept as a contiguous blob without null terminators. The bounded pointers (“slices”) we mentioned earlier are responsible for keeping track of where the relevant data ends (so, under the hood "Alice" is a struct containing a pointer to the A and the number 5). In-memory (address 0x1400204A0) you can see an example of this, where an ascii blob goes attempt to add with overflowAliceBobCarilIn years, Alice will be, a concatenation of several strings which the program uses for separate purposes.

IDA naturally fails to correctly partition the blob’s constituent strings. To manually remedy that, hover over the beginning of the relevant strings, press a as usual, then press * and enter the new correct length of string in the “array length” field. Also you might want to edit the autogenerated name IDA will assign to the string the moment you press a. IDA will get characters from the entire blob and not stop when the actual string is exhausted (e.g. the “Alice” string location will be auto-named aAliceBobSyntaxError etc etc until the name length limit is hit).

rsp-Only Stack Frame

The generated code uses rsp only to manage the stack frame. Function prologues are a simple sub rsp, <size_of_frame>, with a matching epilogue of add rsp, <size_of_frame>.

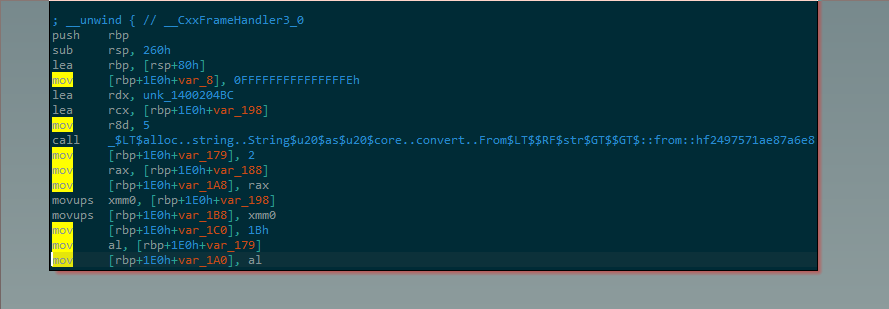

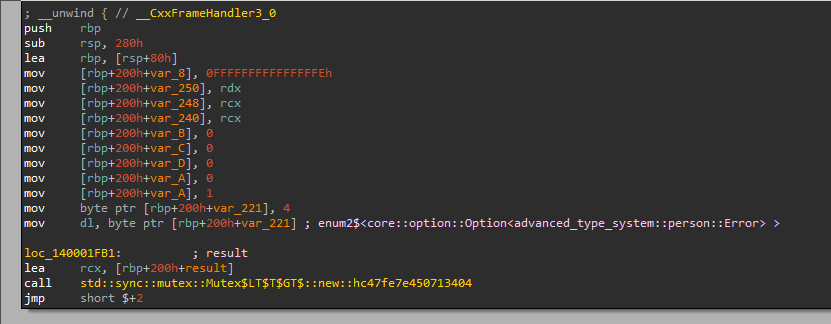

The one exception is the main function. Its prologue does assign a value to rbp:

push rbp sub rsp, 0x260 lea rbp, [rsp+0x80] (function body here) add rsp, 0x260 pop rbp

When the main function assembly references an argument or a variable, it does so relatively to rbp (IDA aligns its stack view relatively to the old value of rbp, which is rbp+0x1E0).

Happily this complicated calling convention is limited to the main function.

Structs on a Stack

Like C and C++, Rust is a system programming language, which among other things implies it will only dynamically allocate memory if you tell it to. In idiomatic C or C++, though, dynamically allocating memory is the most natural thing in the world; once you’ve defined SomeStruct and want to use it, your fingers will already move by muscle memory to type: SomeStruct* some_struct = malloc(sizeof(SomeStruct)) (C) or SomeStruct* some_struct = new SomeStruct (C++). By contrast, idiomatic Rust is not so happy-go-lucky about heap allocations. There is no malloc; a sort-of equivalent does technically exist (let some_struct : Box<SomeStruct> = new(default()); using Box::new and Default::default) but peppering your code with these for no reason will get you tasered by angry people on Stack Overflow. By default, a Rust programmer will simply write let some_struct = … , and only resort to explicit heap allocations using Box or another container when strictly necessary. rustc in turn will implement the let statement by reserving memory for the struct on the stack, unless it can’t (typically because the object size is not known at compile time).

This results in Rust programs where objects live on the stack that in a typical C or C++ program would have very definitely lived on the heap. For example, in sample program 0, every single Person lives in a contiguous piece of memory on the stack. This doesn’t sound so bad until you consider what interactive disassembly of these variables is like. There is no standard helpful sign explaining where a SomeStruct on the stack begins or ends — that information is lost during compilation. Furthermore, a “Debug” compilation will produce many copies of the same variable data; first the correct data is created, then copied to its proper place as a variable, and sometimes even a third time. The end result of all the above is a flurry of reads, writes and function calls using stack offsets without any immediately apparent rhyme or reason. Also, what’s up with that xmmword?

When starting out reverse-engineering C binaries, one will inevitably run across a very similar experience dealing with their first serious struct. The flow of data does not seem to make sense, then after some web searching and deep thought there’s the lightbulb moment. 344 iterations of this later, it’s become second nature: you see mov rcx, [rdx+0x12] and say “oh ok, here we go again”, and go look for the allocation size and reach for the “Structs” tab and so on. Rust’s eagerness to put variables on the stack takes away this easy alarm bell and the easy fix. So, first you have to fall back on that primordial understanding that if the program is writing data to the stack only to apparently never use it again, or conversely reading data from the stack that apparently had not been written to before, then you are probably dealing with a struct. Then you need to somehow figure out the struct’s address and size, both of which strictly speaking are not guaranteed to appear anywhere.

This is a task best approached with flexibility and caution. Maybe the struct’s address will be used as a parameter for a function call that evidently expects a struct pointer (again, [rdx+0x12] and all that), or a convenient destruct / dealloc. This is a clue, not a guarantee. Maybe the pattern of initialization can help; if several close stack offsets are being modified in sequence without having been modified before, the highest up the stack among them (low addresses higher) is a candidate for the struct address. If some address is clearly part of the struct but is interacted with once and never used again, it is probably not the struct address. Probably the most practical approach is trial and error – define a speculative defined struct and size and see how well the pieces fit.

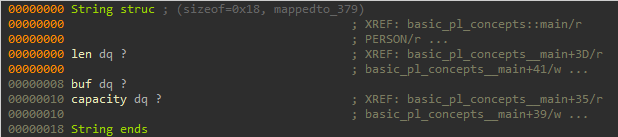

Do not underestimate how some things are immediately obvious from one look at runtime memory during a debugging session. For example using the debugger it can be seen that the String struct is implemented as 3 qwords: “string length”, buffer pointer and, uh, “string length 2”:

Some further research reveals that “string length 2” is a “capacity” field. This in-memory visual sweep is a technique that is equally applicable to binaries spawned by any programming language, but the lack of clarity in the static assembly makes it extra useful here.

The String struct and the fully IDA-ed construction of Alice’s Person object are given below.

Your For Loop is in Another Castle

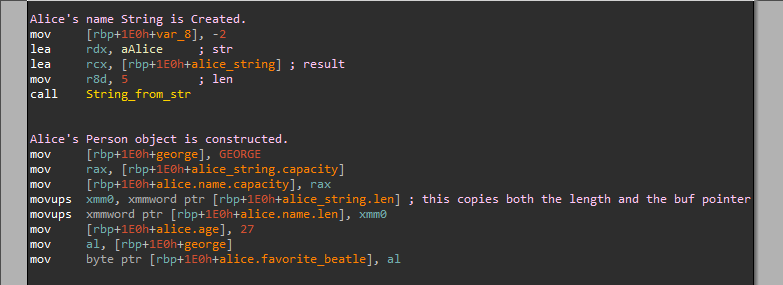

The above program contains a for loop because that’s a basic programming language feature. Unfortunately, under the hood, Rust’s for loop is an alien wearing human skin. for i in 0..10 may appear syntactically similar to for (i=0; i<10; i++) but actually here 0..10 is an expression signifying something called a “Range Object”, which implements – no, look, the entire point of having a “basic programming language features” section was to get a foothold without getting into that.

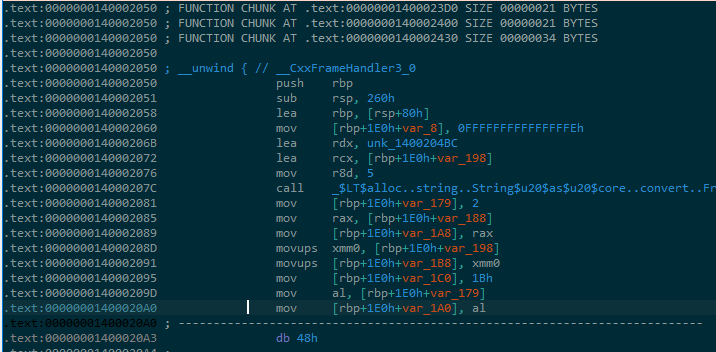

So let us say that rustc builds a for loop by creating this struct called a Range. Under the hood the Range is a very simple creature: it has two fields, start and end. There’s a function called next which you can call on a Range and this function returns two values, “value” in rdx and a boolean “got_something” in rax. If start < end then next returns value=start, got_something=1 and increments start. Otherwise next returns an undefined value and got_something=0.

To implement the above for loop first a Range with start=0, end=10 is constructed. Then next is called on it again and again, with the resulting value being used as i for the loop body every time, until next finally returns got_something=0. A moment’s consideration will show that indeed the loop body will run with i=0 then 1, 2, 3, ... 9, with the next call that yielded value=9, got_something=1 also incrementing start to 10 and advancing the Range to its final resting state of start=10, end=10. When next is called again it checks if 10<10, concludes the answer is no, and therefore returns got_something=0, terminating the loop.

Maybe this will be clearer with an image:

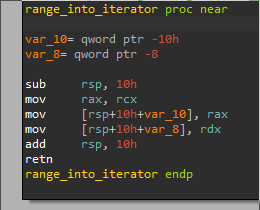

You can see here the industrious copying of local variables that doesn’t strictly need to happen that we talked about earlier. We manually assigned the names range__next and range_into_iterator (you already saw earlier the less intuitive names auto-provided as symbols). What does range_into_iterator do? Well:

It doesn’t do anything. There is a rich and amazing story here that really does not belong in the current section, so hold tight and we will get to it later.

Panics

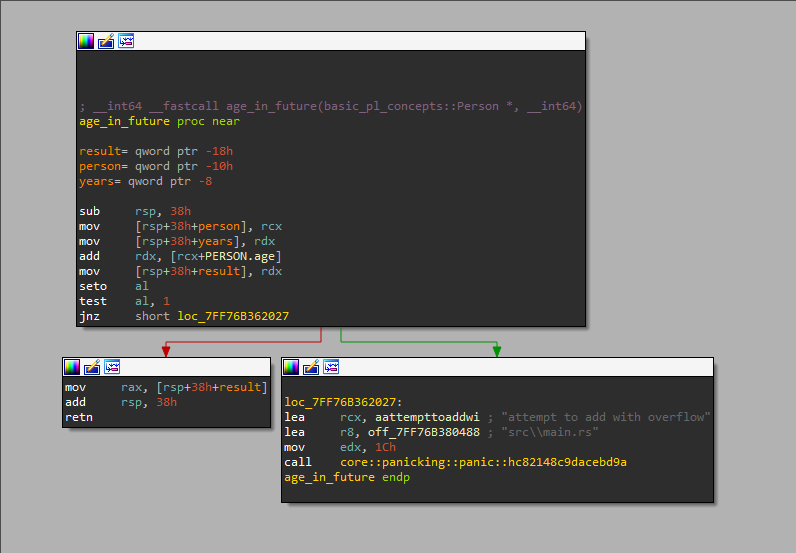

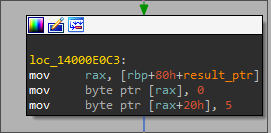

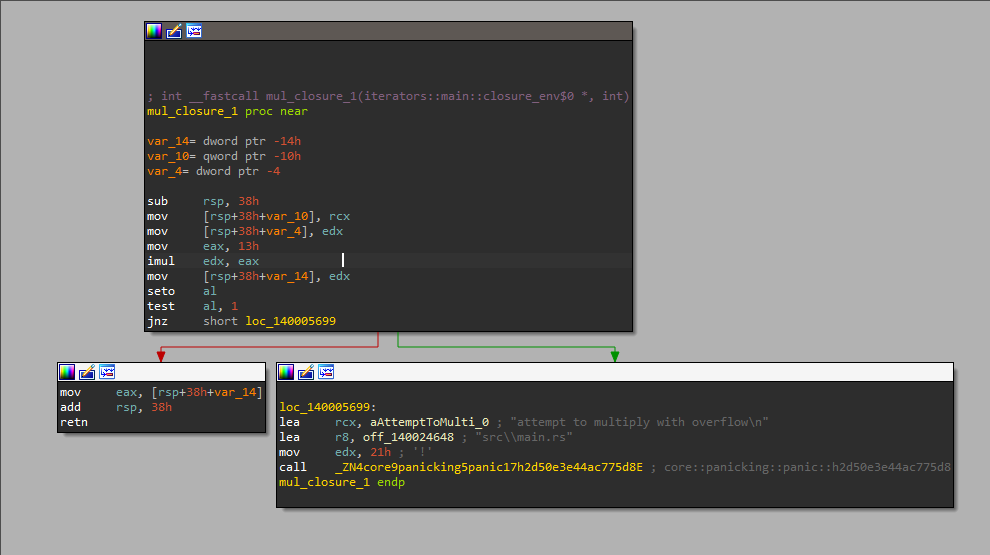

The relatively simple function age_in_years, when compiled, showcases a Rust feature called “panic”:

From looking at the strings involved it’s easy to understand more or less what’s going on, even without symbols. The code attempts to perform the addition as specified in the source, but if overflow occurs (checked using the seto instruction) execution flow is immediately diverted to a panic function call. A panic is similar to an exception, but catching panics is not idiomatic and generally frowned upon (error handling is done using a different mechanism, which we will encounter later).

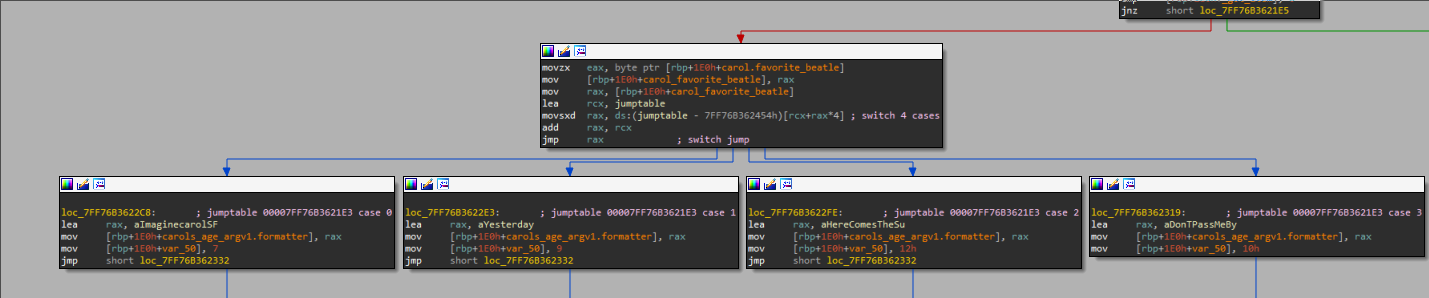

Match Statement

If Rust’s for loop is familiar at the source level and alien in its implementation, the match statement is the other way around: at first sight it appears strange, like possibly a close relative to C’s switch – and some of its more advanced features (not used in this program) are just outside the scope of C’s switch at all – but under the hood the two basically share the same implementation. The code that uses a match to infer Carol’s favorite song is compiled to the assembly given below.

Lost in Compilation

Note that the original code contained a constant ALICE_AGE declared inside a module but this was optimized away by the compiler. Some constants in the later programs are complex enough that the compiler dutifully includes them as hardcoded data, but as for modules, what happens here is a faithful representation of what happens usually. The compiler enforces module boundaries during compilation, but once that’s done, modules simply do not exist in binary-land. Later we will see several more examples of language features that don’t exist past the stage of compilation, or exist in a diminished capacity. We hope you’re not wondering where in the binary you can find the comments.

The Type System

This part covers Sum Types, Error Handling with Option and Result, Traits, impls and derives, and Collections (Vec, HashMap, …). The sample program used for this section (sample program 1) is available in full here.

Sum Types (aka enums, revisited)

A Sum Type is a type T that can be either one of a finite list of types T1, T2, T3, ... Tn. Technically something like this feature exists in C and is called “Unions”, but it is not very common in idiomatic C and was not designed to implement load-bearing logic; you can define a union MonetaryResource{char donor[50]; int account;} but there’s no out-of-the-box way to even check if a given MonetaryResource instance is a donor or an account. In Rust the situation is very different: a significant part of idiomatic language use – as well as the internal implementation of several language features, including error handling – hinges on Sum Types and their built-in features.

Rust supports sum types by allowing enum variants to have an associated object type. So, for example, you could write:

enum SampleSumType {

Number(i32),

Name(String)

}

let sample_name = SampleSumType::Name("Aaron");

let sample_number = SampleSumType::Number(997);

sample_name and sample_number both have the same type, which makes it possible for the same function to potentially return either.

It is tempting to now dive directly into the several instances of sum types in sample program 1 and analyze the assembly used to implement them. Trust us, though: it is better to first fully understand how Rust implements sum types in general, and only then go back to sample program 1.

For this purpose of illustrating how Rust implements sum types in general, we have constructed three programs: sum_types (here), more_sum_types (here) and truly_pathological_sum_types (here).

Let us, naturally, begin with the sum_types program. It starts off innocuously enough:

enum SumType0 {

JustOneOption(i64)

}

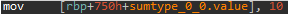

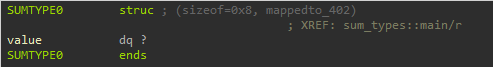

This is a degenerate sum type – there is only one possible variant of it (JustOneOption) which is a signed integer. This variant is instantiated in the main function with the line let sumtype_0_0 = SumType0::JustOneOption(10);. This source is compiled to the following assembly:

Later when the program checks which variant sumtype_0_0 is, the check is compiled away to nothingness – the compiler recognizes that there is only one possible answer, and just proceeds to the corresponding execution path directly. Straightforward enough, right? OK, clearly this can’t be done with every possible sum type, but we can’t blame the compiler for not keeping track of “which variant is this” when there is only one possible answer.

Let us proceed to the next sum type, which is defined as:

enum SumType1 {

OneOption(i64),

AnotherOption(i64)

}

This type can be either an integer, or an integer (what can possibly be the difference between an integer and an integer? NASA can tell you about that). Each variant is instantiated once:

let sumtype_1_0 = SumType1::OneOption(11); let sumtype_1_1 = SumType1::AnotherOption(12);

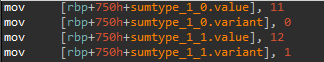

And this source is compiled to the following assembly:

So far so good. This is probably close to what you expected: a field keeping track of the instance’s variant, followed by the data itself. In some contexts, sum types are simply called “tagged unions” because of this natural way to implement them; the “variant” field is called the “tag”. When the program later checks which variant an instance of this sum type is, it simply checks if the tag is 0 (OneOption) or 1 (AnotherOption).

Confident in our grasp of how Sum Types are implemented, we proceed to the next one:

enum SumType2 {

OneOption(i64),

AnotherOption(String)

}

Again, in the main function, each variant is instantiated once:

let sumtype_2_0 = SumType2::OneOption(13);

let sumtype_2_1 = SumType2::AnotherOption(String::from("Fourteen"));

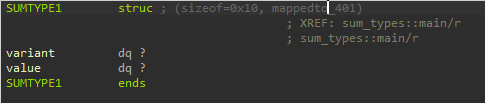

This source is, of course, compiled to the following assembly:

So now that we’ve got that finally sorted out, we can – wait, what?

Based on what we saw with SumType1, we’d expect the struct for SumType2 to include a tag – let’s say, 0 for OneOption and 1 for AnotherOption seems fine – followed by a String struct if it’s AnotherOption and a single qword for the integer of it’s OneOption. Maybe the OneOption variant will need to have some unused padding, to get it to be the same size as the AnotherOption variant.

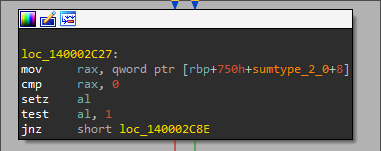

Except this isn’t what happens at all. There’s no tag anywhere, and it’s not clear how the program should be able to tell what variant it’s looking at. We turn to the part of the program which does just that:

match sumtype_2_0 {

SumType2::OneOption(x) => println!("2_0: OneOption, {}", x),

SumType2::AnotherOption(x) => println!("2_0: AnotherOption, {}", x),

}

This is compiled to the following assembly:

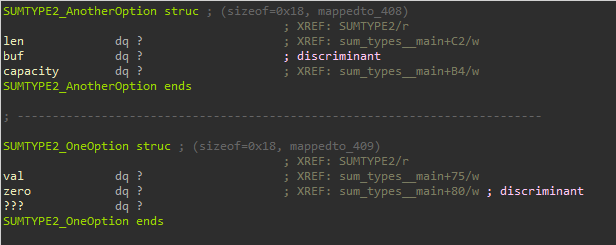

What’s happened here is that the compiler, in its quest for memory efficiency, has decided that it’s not going to do with 4 fields what it can do with 3. Instead of keeping a separate tag field, the compiler figured that it can piggyback off the String structure’s buf pointer. In case of AnotherOption, this pointer can’t be 0 (Rust doesn’t support that “feature”), so the possible value 0 is instead appropriated to signify”this is a OneOption instance”. Essentially a SumType2 is represented by these two possible structs:

This echoes what we saw with SumType0 in that it’s completely reasonable, and also apparently impossible to generalize. It happened that AnotherOption contained a field that had one certain impossible value, and the compiler capitalized on that. But what if that wasn’t possible? What would the compiler do?

Analyzing SumType3 unfortunately doesn’t help much with that specific question, but is still informative for other reasons, so we will dutifully go over it as well. It is defined like so:

enum SumType3 {

JustANumber(i64),

Happy(HappyStruct)

}

where:

struct HappyStruct {

a: String,

b: i64,

c: String

}

By now we know enough to suspect the compiler will piggyback off the buf pointer of the a string, and use a possible value of 0 to denote a JustANumber variant, and that’s indeed what happens. A point of interest is that the fields of the HappyStruct are shuffled during compilation, and it is kept in memory with b (the i64) first and the two Strings later. A C programmer would balk at this – what’s a struct worth if you can’t do pointer arithmetic on it? – but in Rust you’re Not Supposed to Do That(tm).

With some giddiness we reach the final type of the first sum_type program:

enum SumType4 {

Happy(HappyStruct),

Sad(SadStruct),

JustANumber(i64)

}

Where:

struct SadStruct {

a: i64,

b: i64

}

The main function instantiates the three possible variants like so:

let sumtype_4_0 = SumType4::Happy(HappyStruct {a: String::from("Eighteen"), b: 19, c: String::from("Twenty")});

let sumtype_4_1 = SumType4::Sad(SadStruct {a: 21, b: 22});

let sumtype_4_2 = SumType4::JustANumber(23);

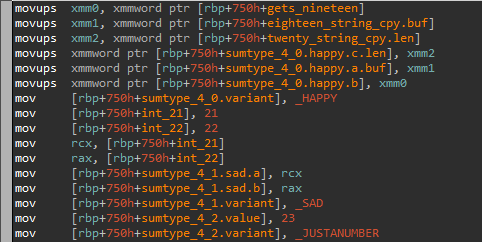

This compiles to the following assembly:

Look at all the xmmword moves! Fortunately we’ve already seen it’s just something rustc would rather do than move two consecutive qwords.

More importantly, the variant tag is back. The compiler must have reasoned approximately like so: “the first two fields can be anything, because in case of a Sad variant they can be an unsigned integer. So this leaves the c member of the Happy struct to piggyback off of. I only have one value that’s impossible in a Happy variant and that’s 0. Which variant will it signify? I can’t signify both Sad and JustANumber, so… all right, a variant tag it is”.

Through analyzing these examples, we’ve reached an understanding: there is no one single implementation of a sum type, exactly. The compiler reasons about where and how it can piggyback off a struct field’s otherwise impossible values. This field can then be used to discriminate between the different possible variants, and for this reason it is called the sum type’s “discriminator”.

Two particular sum types that are of special interest to us are Option and Result, which are part of the Rust standard library. Right now we will deliberately ignore their actual idiomatic use and view them simply as two more examples just like the many sum types we’ve already seen, because fundamentally that’s what they are. Consider the following simple sum type:

enum SumType6 {

OneOption,

AnotherOption(i64)

}

Well, the standard library’s Option is exactly this, other than the name labels.

enum Option_i64 {

None,

Some(i64)

}

There’s also an Option_String, an Option_Happy and an Option_(Your Favorite Type Here). In actual code you’re not going to literally see Option_i64 and instead it’s going to be Option<i64>, and Option<String> and Option<Happy>. You are free to not worry about the exact mechanism at play here, but we explain it later anyway.

Result is very similar to Option. Consider:

enum SumType7 {

OneOption(i64),

AnotherOption(String)

}

Other than the name labels, a Result is exactly the same thing:

enum Result_i64_String {

Ok(i64),

Err(String)

}

Again as with Option, there’s a Result type for whatever two types you want to pick other than i64 and String, and in the source you’re going to see the above type as Result<i64,String>.

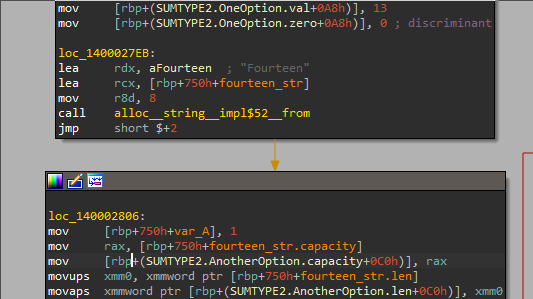

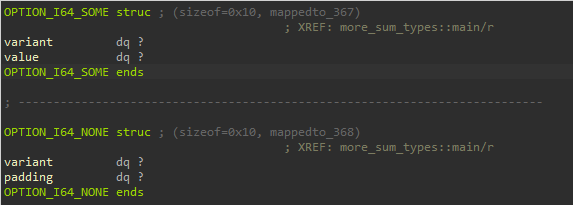

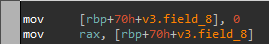

Armed with our intuition about how Sum Types are implemented in assembly, we can proceed to the program more_sum_types where several Options and Results are defined. For example, two Option<i64> instances:

let v1 : Option<i64> = None; let v2 : Option<i64> = Some(31);

The compiler is forced to allocate a variant tag for Option<i64>, because there is no “impossible value” that an i64 cannot legally take. This tag is 0 for a None variant and 1 for a Some variant, like so:

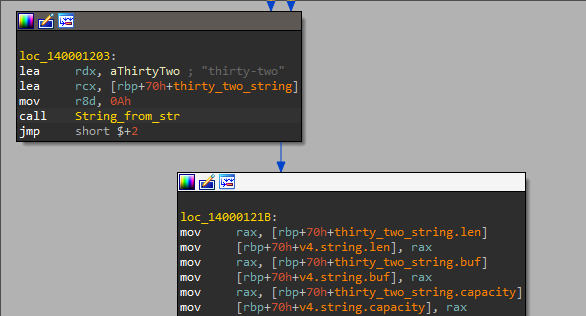

This is followed by instantiating two Option<String> variables:

let v3 : Option<String> = None;

let v4 : Option<String> = Some(String::from("thirty-two"));

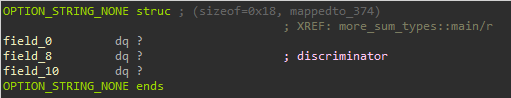

By now we know enough to guess what the compiler is going to do:

The struct of an Option<String> is given below.

If you’re interested in how aggressive the compiler can get in optimizing sum type sizes, you’re welcome to take a look at the program truly_pathological_sum_types (here) and its compilation output. Among other things, a sum type with only one member and no data is optimized into literal nothingness, and a sum type with 3 variants none of which contain any data is implemented as a single-field integer.

We’re going to see some more surprising examples of sum type construction right in the next section, but now we’re mentally ready to deal with sum types when they appear in assembly. In particular, if some struct field is suddenly compared to a small integer and this is followed by a branch, consider that you might be dealing with a sum type and react accordingly, starting by creating the variant structs as seen above.

Error Handling with Option and Result

Consider the following code:

fn age_in_future(&self, years: &impl quackslike::_i64) -> Result<i64, Error> {

if *years < 0 {

Err(Error::NegativeYear)

} else {

Ok(*years + self.age)

}

Forget about that impl quackslike argument type, we’ll get to that soon. Just pretend for the time being it says years: &i64 instead. For now focus on the function return type, which is Result<i64,Error>; as we just saw in the previous section, this means the function returns either an Ok(i64) or an Err(Error) (and both can be seen to happen in the function body). Here Error is a simple enum that lists things that can go wrong in functions from the person module:

pub enum Error {

EmptyName,

NegativeYear

}

This is how sum types are used for handling errors, or – more subtly – situations where sometimes a value is there, and sometimes it… isn’t. A classic experience with dynamic languages is writing e.g. res = get_num() + 5 in Python and getting hit with a run-time TypeError because get_num() returned None. In Rust, get_num would have a return type of Option<i32> and the compiler would complain at compile time that you are trying to add an Option<i32> and a plain i32. (If you find yourself saying “wow! I actually want that feature in Python”, you might want to check out mypy). Similarly, a function that can fail in an interesting way – such as the above age_in_future – should idiomatically return a Result.

How is Result<i64, Error> implemented in Assembly? We already know the answer. Ok(i64) can take any numerical value, and so this sum type can’t fit in one field because the discriminator would have nowhere to go. The compiler is forced to use 2 fields: a tag field signifying the variant followed by either an i64 or an integer value from the Error enum, depending on the variant.

Option and Result appear several more times in sample program 1. One particularly interesting place an Option shows up is in the definition of the _Person struct:

pub struct _Person {

name: String,

age: i64,

favorite_beatle: Option<Beatle>

}

A Person can have a favorite Beatle, or lack a favorite Beatle. That’s straightforward, but how is it going to look in assembly? Take note that rustc constructs Option<Beatle> as a sum type, not _Person. So it’s not going to piggyback off the buf pointer of the String in the name field: the algorithm for deciding assembly representation of a struct works bottom-up, and it’s first going to ask itself “how do I implement an Option<Beatle>” and only once that’s decided it’s going to move on to the question of how to represent an entire _Person. So, given that we already know how String and i64 look when compiled, what we’re really asking about here is an implementation of an Option<Beatle>.

You might groan “stop, I get the point already” but actually this triggers compiler behavior that we haven’t seen in any of the earlier examples. A convenient function to showcase this behavior is implied_favorite_song, which behaves conditionally on a Person having or not having a favorite Beatle:

pub fn implied_favorite_song(beatle: Option<impl quackslike::Beatle>) -> String {

if beatle.is_none() {

return String::from(song_name::MACARENA);

} else {

// (...more conditions here...)

}

}

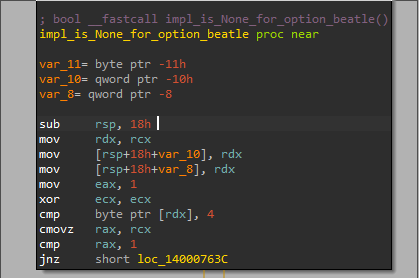

The is_none() function used above is comprised of the following assembly:

Option<Beatle> is still a single field just like Beatle, but now it supports another value: 4. 4 is None! Having seen everything we’ve seen, and knowing how the compiler approaches the construction of sum types, in retrospect this makes perfect sense: a Beatle can only take the values 0, 1, 2 or 3, so when tasked with representing an Option<Beatle> of course the compiler would piggyback off of the myriad other impossible values and simply encode “No Beatle” as a phantom fifth Beatle.

The true cherry on top is the function person::new which returns a Result<_Person, Error>. The compiler already has as given fact its own implementation of _Person that we had just discussed, and now must construct an assembly representation for the sum type Result<_Person, Error>. So what does it do?

It posits the sixth phantom Beatle. If she’s your favorite then you’re an Error, not a Person. Note that to do this the compiler needs to have kept track of the possible values that an Option<Beatle> can obtain.

Having walked this entire road, we can look nonchalantly at the above image and see nothing unusual about it. Of course that in order to understand whether a Person has been successfully constructed or not, the program is going to ask that person whether they happen to really like the Phantom Sixth Beatle. But for someone trying to cold RE a Rust binary using only C reversing experience, this is maybe not super intuitive, which is why we insisted on the long scenic route of seeing a lot of sum type examples first, and only then analyzing their application in sample program 1.

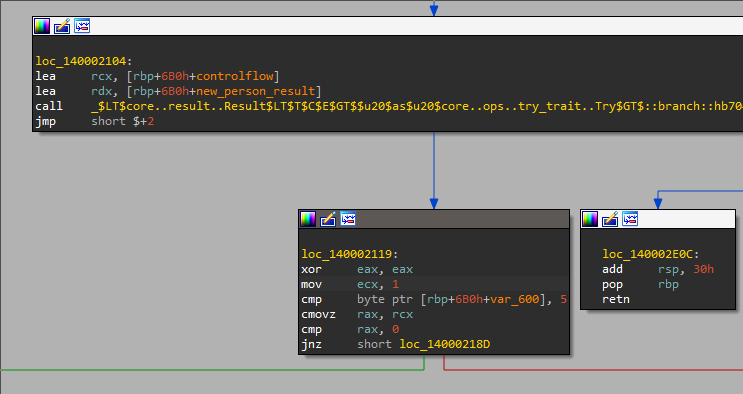

The Try Operator (?)

It is possible to perform fairly idiomatic error handling in Rust using just the machinery described so far. But checking for errors after every line can quickly get cumbersome, especially in functions that perform many operations in sequence that can each fail (see for instance this typical lament of a Golang programmer and the proposed solution).

For this reason Rust supports the “try operator”, written as the sigil ?. This operator can be appended to a Result, or an Option, or any type with “possible-fail-nature” (we’ll later explain what exactly we mean by that). For a Result the try operator instructs the program to check if it’s an Err variant. If so, function execution stops and the Err value is returned immediately. Otherwise, the Ok(v)? evaluates to simply v. Similarly in the earlier example where res = get_num() + 5 wouldn’t compile because get_num() returns an Option<i32>, we could instead write res = get_num()? + 5 and this would be fine, as long as the function we’re in also returns an Option<i32>.

The try operator appears in several more places in sample program 1; for example the main function, which returns Result<(),Error>, initializes the Person object alice like so:

let alice = person::new(&String::from("Alice"), &_const::age::ALICE, Some(&Beatle::George))?;

Here person::new produces a Result<_Person,Error>. If the person::new call succeeds and returns an Ok(Person) variant the ? simply unwraps the Result and assigns the Person value to the variable alice. The main function then continues execution. But if person::new fails and returns an Err(something_or_other), the ? operator causes the main function to return immediately, with that Err(something_or_other) as the return value.

Understanding the way this is implemented in assembly is just understanding sum type construction over again. Under the hood, to implement ? the compiler calls the function branch on the Result object returned by person::new. This function takes a Result<_Person,Error> and returns the below ControlFlow sum type:

enum ControlFlow_Person_Error {

Residual(Result<Infallible,Error>),

Output(Person)

}

Where Infallible is basically a placeholder variant that never gets instantiated. So at the assembly level Result<Infallible,Error> is going to be identical to an Error, and so the construction of this sum type is subject to exactly the same constraints as a Result<_Person, Error>. This leads to the presence of our friend, the Phantom Sixth Beatle. The compiler checks against the value 5 to ascertain whether it is looking at a Residual variant (stop execution and return) or an Output variant (continue execution), in exactly the same way it performed the check for Result<_Person,Error>, and for the same reason.

Traits, inheritance, impls

This is a meaty subject which influences compiler behavior in important, indirect ways. It is also a building block of many basic Rust features; a lot of explanations in the sections before this one are simplified to gloss over the pivotal role that traits play.

A trait is a basically set of function declarations. It is a lot like an interface from your favorite OOP language like C++ or Java. Here is a trait from sample program 1:

pub trait Person : Sized {

fn name(&self) -> String;

fn age_in_future(

&self,

years: &impl quackslike::_i64

) -> Result<i64, Error>;

fn favorite_beatle(&self) -> Option<Beatle>;

}

Again, forget about &impl quackslike::_i64 for a moment and pretend it just says i64, we’re almost at the part where we explain that. The above means that in order to satisfy the Person trait, a type needs to implement these three functions: name, age_in_future, and favorite_beatle, with the given signatures. Person : Sized is inheritance syntax – to implement Person a type must first also implement Sized. This is the only kind of inheritance that Rust supports. No inheritance of classes / structs, no public, private, protected.

Sample program 1 defines a struct _Person and then implements the Person trait for it:

pub struct _Person {

name: String,

age: i64,

favorite_beatle: Option<Beatle>

}

impl Person for _Person {

fn name(&self) -> String {

self.name.clone()

}

fn age_in_future(&self, years: &impl quackslike::_i64) -> Result<i64, Error> {

if *years < 0 {

Err(Error::NegativeYear)

} else {

Ok(*years + self.age)

}

}

fn favorite_beatle(&self) -> Option<Beatle> {

self.favorite_beatle

}

}

Traits support a convenient “and” operator using the sigil +. For example, one of the traits defined in sample program 1, inside the quackslike module, is given below.

pub trait _String : PartialEq<String>+Into<String>+Clone+Display {}

This means that to implement quackslike::_String a type has to implement PartialEq<String> (can compare with String), Into<String> (can be converted to a String), Clone (can be deep copied) and Display (can be pretty-printed). The trait body is empty {} which means that quackslike::_String is defined as the conjunction of all these other traits, and nothing more.

How does this influence the assembly? As we said, not by much directly. Traits are mainly used to enforce type safety and other sorts of boundaries. Once the compiler verifies that nothing is out of place and all the types implement the traits they should, in assembly-land all the traits outright vanish, and all the impl items are just functions. There are no vtables (ok, you can get them if you really want to, and we’ll later see how, but forget about that for a moment).

With that said, we can think of at least two good reasons that an understanding of traits is useful for reverse engineering Rust binaries.

The first reason is that traits are everywhere in Rust. Every single thing we’ve discussed so far, we’ve had to bend over backwards to talk around how the thing is built out of traits. The for loop that operates on a Range is possible because Range implements the IntoIterator trait, allowing it to be converted to something implementing Iterator so that next can be called on it. That function, branch , which was called on a Result to implement the ? operator, could only be put there because the Result type implements the Try trait. All the println!s can only work because the names, numbers and strings implement the Display trait. As a consequence, many (most) functions in a Rust program are going to be there in their capacity as an impl of some trait for some type. Sure, at the assembly level they’re just functions, and you can technically go forth and analyze them as usual; but as a general plan, it’s lunacy.

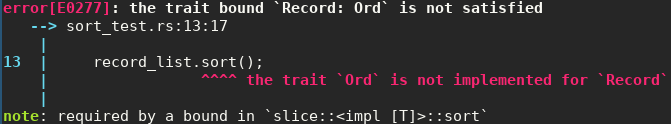

The second, and more material reason, is that the trait system and the way it is implemented completely breaks the typical analyst’s preconceived notions of boundaries between what’s thought of as “library code” vs “user code”. Let’s look at a simple example: suppose Ella is writing a Rust program involving a list of birth records including name, year and place of birth. At some point she wants to sort the list and calls record_list.sort(). She then attempts to compile the program and gets this error:

Ella doesn’t have to be an expert on the Rust type system to understand she needs a working implementation of impl Ord for Record. Soon, she has the implementation working, and the program compiles successfully. Now, three months later, you’re trying to analyze the resulting binary and specifically understand the logic by which the Records are sorted. In another programming language the de-facto idiom would be for Ella to just write her own sort from scratch, or at least for the comparison method to be called via dynamic dispatch, and be accessible directly via some kind of vtable for Record. Instead, the Rust standard library has generated an impl sort for Record which calls presort_0, sort_shape, dijkstras_super_optimized_secret_subroutine, each of which calls 6 more subfunctions, repeatedly for several levels until finally one of these sub-sub-sub-sub-… functions calls impl Ord for Record, spliced directly into the call tree as a plain function address, to decide which of two Records should go first. This is the one exact function that contains the answer to your original question, buried under 6 function call levels of standard library code. Good luck!

Because of this, reverse engineering a Rust binary can require a shift in perspective. Identifying library code is still essential, but there’s no longer the convenient guarantee that user code is always going to meet library code neatly by calling it and providing user variables as arguments. Instead one must deal with inconvenient questions: What is this library code doing? Is it going to call a user-defined impl? Which? Where?

It is also possible to directly implement functions for a given type, without involving a trait, inside an impl SomeType {...} block, or to ask the compiler to automatically implement a trait for a specific structure by invoking #[derive(SomeTrait)]. The discussion in the next section will help shed some light on how the resulting assembly behavior compares to that created by implementing a trait in the usual way.

impl trait

Designing software around traits has many advantages, probably too many to give a full exposition here. First it has all the traditional advantages of vanilla OOP (modularity, inheritance, encapsulation, …); then the advantages of interface-based design over vanilla OOP (specifying contract without behavior, allowing otherwise unrelated objects to share code, …), then finally the advantages that arise from traits being “tags” that freely apply to types, and are not themselves part of a type hierarchy (here is a good explanation on this subject).

So, it is only natural that we should be able to create a function that takes an argument not of some concrete type, but “some type that implements SampleTrait”; and even to create a function that returns such a type. Rust has two mechanisms for this, but one of them is much more syntactically simple than the other so we’ll talk about it first. This is called impl trait and it works exactly like you’d figure: Instead of writing an actual type, you can (in some situations) instead write impl SampleTrait.

Finding an example of this in sample program 1 is not too difficult. Sample program 1 is spammed to the brim with impl traits. Probably the most egregious example is this function from the state_verbose module:

pub fn future_age(p: &impl Person, years: &impl quackslike::_i64) -> Result<impl Display, Error> {

Ok(format!("In {} years, {} will be {}", years, p.name(), p.age_in_future(years)?))

}

This function takes and returns impl traits. The quackslike module was specifically created to allow use of impl trait here instead of a concrete type. For example, the quackslike::_i64 trait is defined in the following way:

pub trait _i64 : Add<i64,Output=i64>+PartialOrd<i64>+Into<i64>+Copy+Display {}

In English, this says that something “quacks like” an i64 if you can add it to an i64 and get an i64 back out; and can compare it to an i64 to see which one is larger; and can convert it to an i64; and it has a well-defined deep copy operation (which i64 itself trivially does), and can be printed to the terminal.

This is what we earlier meant when we said “just pretend it says i64”, because what other type is really going to satisfy all those requirements? Well, that’s neither here nor there; the important thing is that we get to see how the compiler implements impl trait, and the interesting thing is that there are two answers, because impl trait as an argument and impl trait as a return value behave differently.

Let’s start with impl trait as an argument. Some piece of code calls state_vebose::future_age with a list of parameters, and so the compiler duly checks that each of the given types indeed impls the correct trait: the type of the p argument (let’s call it TP) implements Person and the type for years (let’s call it TY) implements quackslike::_i64.

The compiler then generates a function from scratch: state_verbose::future_age_TP_TY(p: &TP, years: &TY) -> Result<impl Display, Error>. Where p.name() is called, the compiler inserts a call to the function impl name for TP (this is an abuse of notation: we mean the function name as it appears in impl Person for TP, but this is the last time we will mention this pedantry). The same goes for the subroutine age_in_future. Its declared signature is

fn age_in_future( &self, years: &impl quackslike::_i64) -> Result<i64, Error>;

The compiler generates a age_in_future_TY(&TY) -> Result<i64, Error>, and this is what state_verbose::future_age_TP_TY calls internally. Every other call to state_verbose::future_age using some sequence of types other than TP and TY would result in another function at the assembly level state_verbose::future_age_SomeType_SomeOtherType to accommodate the call, including all the required subfunctions and sub-sub-functions. This is called monomorphization, and its importance for understanding the structure of Rust code cannot be overstated. First of all, it means that the execution path of calling some function on a given list of arguments is determined at compile time, in contrast to “dynamic dispatch” where a run-time pointer can influence which function will be called. Second of all, it means that when looking at a Rust function the cardinal question is “is this an impl? Of what function? For what type?”.

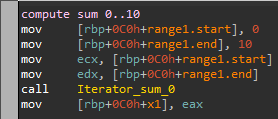

Also consider that different monomorphs of the same function will tend to be structurally similar, but this is not generally true for different impls. For example, state_verbose::future_age is going to look more or less the same no matter what types it was monomorphized for; the call targets for name and age_in_future will vary, but otherwise the function structure will stay the same (barring inlining and all of that, but let’s not try to swallow every possible problem at once). Below is a bird’s eye view of future_age monomorphized for i64 and _Person, with the calls to name and age_in_future highlighted in red.

If this function is monomorphized for another sequence of arguments types, these two calls will be to the appropriate impls of those two functions, but otherwise the function structure will remain the same. In contrast, every type implementing Person is free to implement name however it sees fit (_Person just accesses a private field, but maybe _RemotePerson accesses a remote DB?).

The same “arbitrary structure” phenomenon applies to direct impls that are just defining methods for some structure, without involving a trait; and to the output of #[derive(SomeTrait)] which is so struct-specific it had to be handed directly to the compiler. (If this sounds too theoretical, think of a function that prints an integer vs a function that prints a list of integers; they are obviously different, and there is no “ah, let us just call the print function” moment – that is what the compiler is trying to implement in the first place!)

As we alluded to before, this has important implications for your favorite function similarity engine, and how much you can trust its output. If your similarity engine slaps the label impl some_func for SomeType on a function and you actually suspect this verdict is not just a hallucination and the engine is on to something, consider whether this impl is a monomorph; if it is, then take the impl some_func part seriously, but the for SomeType part not so much. In itself this is not such a great setback: when dealing with impls, identifying what the impl does is much more critical than identifying what type it is doing it to. The second question can be answered from the context, or from any 9 other impls being invoked on the same object, and so on. The first question is just a black hole of ignorance until dealt with directly.

One way or another, once all the required functions are monomorphized and assembled, the compiler doesn’t really have much left to do. The required concrete types already exist, and so do the functions.

impl trait as a return value type is a different story. The source doesn’t specify a concrete type at all. The developer can call future_age, get its return value which is an impl Display, then print it, without knowing or caring what the type actually is, or what it’s called.

In this case, under the hood the compiler creates a new type: “the impl Display type returned by future_age”, which we will name TidTrbf for the sake of convenience. Assembly-wise TidTrbf is going to be identical to the String returned by format!, because TidTrbf needs to implement Display somehow; and String’s impl functions will be used every time some function from Display is called with a TidTrbf as an argument. Within the source code, there is no way to define a new variable of type TidTrbf, or even refer to the type at all; it cannot be named (developers formally refer to such a construction as a Voldemort Type).

Containers (Vector, HashMap, HashSet, …)

The Rust standard library provides several container types (the full list is here). They each support a truly enormous list of functions (e.g. see here the methods supported by Vec) – some implemented directly, others via an implementation of a trait. For almost anything you might want to do with one of these, the implementation will depend on the element type (a vector of what? Strings? Persons?) and so is going to be a monomorph. The good news is that this implies function auto-recognition has good theoretical potential to tell you a vector is being manipulated, and what’s being done to it. The bad news is the possibility of things like the vector.sort() scenario we had discussed earlier, where one of these is going to call a piece of user logic. But at least now you know about that, and knowing is half the battle.

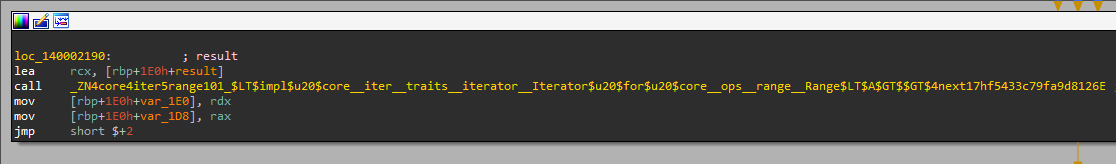

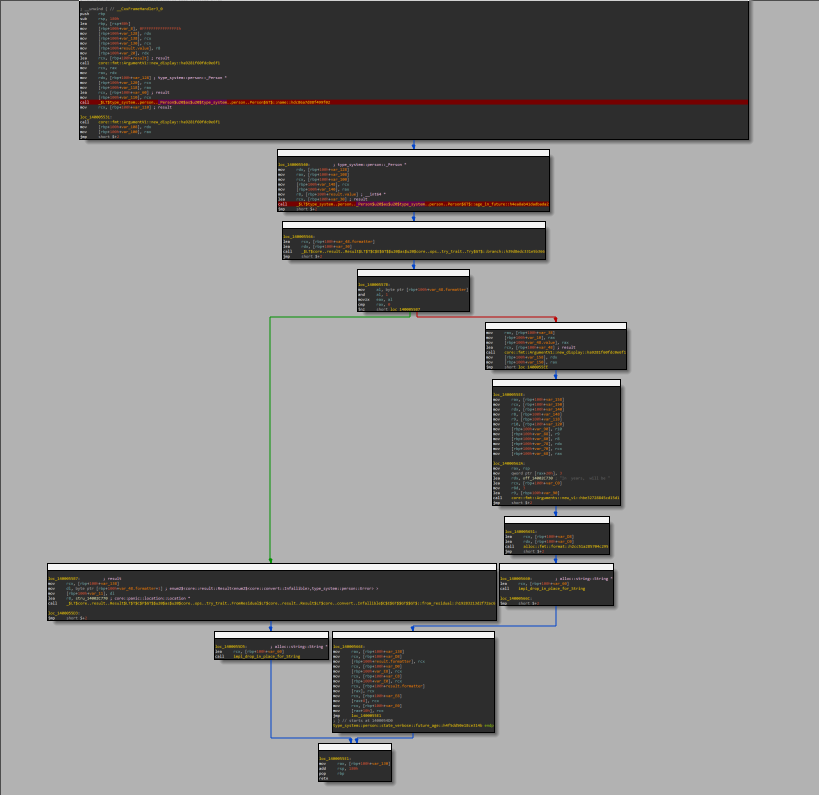

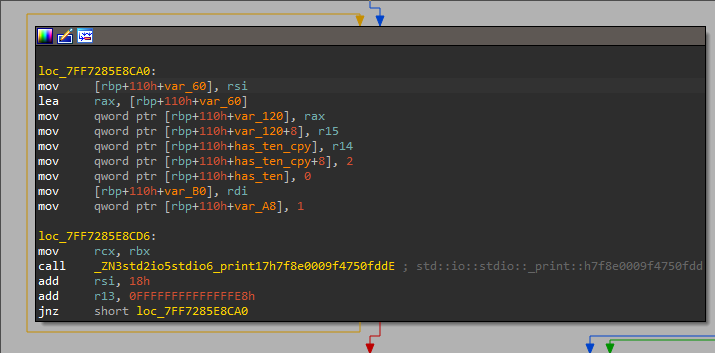

This code from sample program 1 constructs a vector and then iterates over the Vector using a for loop in order to construct a HashMap:

for person in vec![&alice, &bob, &carol, &david] {

age_by_name.insert(

person.name(),

person.age_in_future(&0)

?);

}

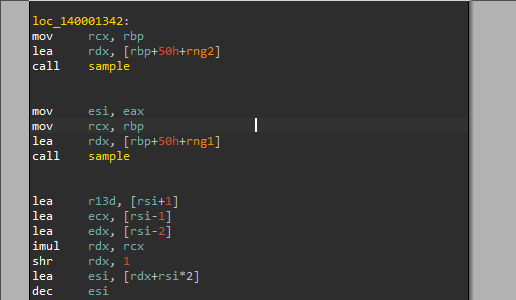

We have already discussed every single thing you need to know to understand how this will look in the assembly. Let’s look how it bears out in reality. The below image illustrated the basic call structure and where the relevant function calls are situated (that is: no, you’re not supposed to try and read the assembly).

Highlighted in red, from top to bottom:

IntoIter<type=&_Person>object, which is the result of callinginto_iteron theVec<&Person>created by thevec![...]macro, moved to local variable.- Call to

impl next for IntoIter<type=&_Person>(IntoIter<type=&_Person>implementsIterator<type=&_Person>) - Call to

impl name for _Person(_PersonimplementsPerson) - Call to

impl age_in_future for _Person(_PersonimplementsPerson) - Call to

impl branch for Result<i64, Error>(Result<i64, Error>implementsTry<Output=i64,Residual=Result<Infallible,Error>>) - Call to

impl insert for HashMap<String,i64>(HashMap<String,i64>implementsinsertdirectly)

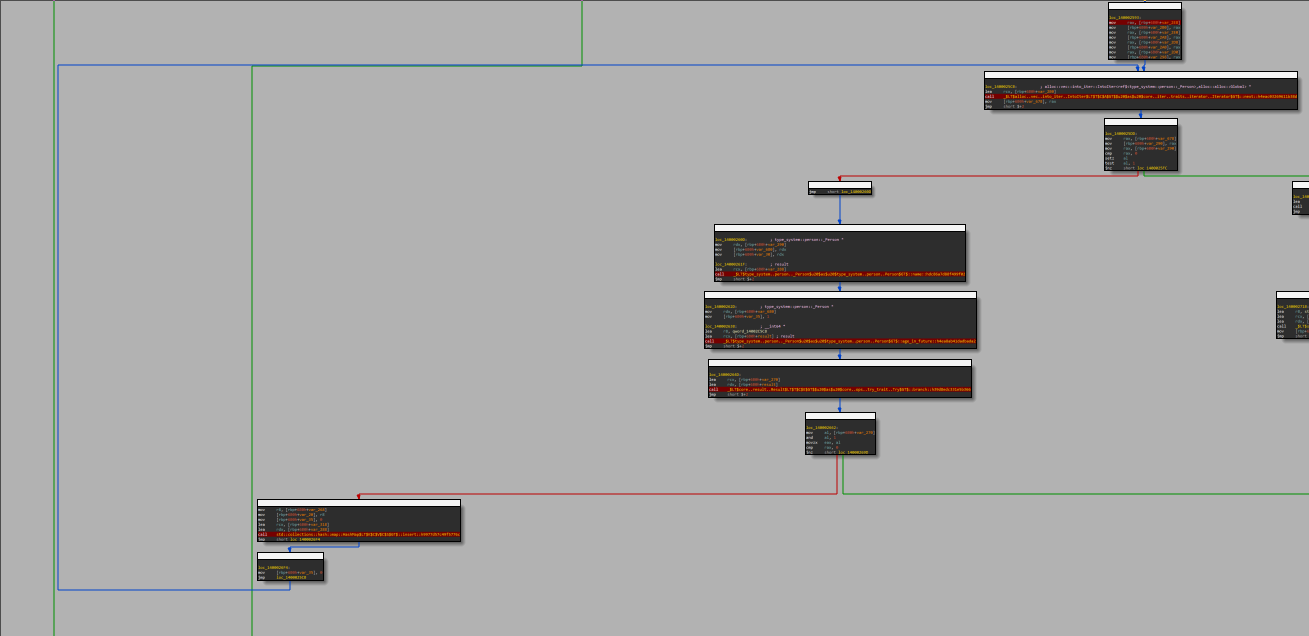

The inner workings of these container types can get fairly complicated (e.g. HashMap includes a random seed and an implementation of SipHash 1-3), but Vec, at least, is pretty straightforward, and is identical to the in-memory layout of String that we saw earlier. Below is the in-memory evaluated vec![&alice, &bob, &carol, &david].

Advanced Features

This part covers generics, trait bounds, the borrow checker, iterators, closures, Box, trait objects, as well as a footnote about the parallelism library Rayon and a brief exploration of release builds. A lot of these features have been effectively discussed already in one of the earlier sections, or have surprisingly little influence at the assembly level, or are just simple and straightforward in their implementation. The major exception is iterators and closures. Yes, we’ve already talked about iterators, but we haven’t really talked about iterators.

The sample program used for this section (sample program 2) is available in full here.

Generics

Actually we’ve talked about generics an awful lot up until now. Instead of saying the word “generics” we just implicitly assumed they were there, because without them very little of everything we’ve discussed makes proper sense. Everywhere you see these angular brackets <>, that’s generics at work. We’ve talked about types like Option<i64> and Result<Person,Error> and just assumed that these implement various traits and have various properties, but this is too much clever logic for the compiler to handle from the ground up. Instead, the Rust standard library has definitions and implementations for an Option<T>. The compiler can take that and understand how to deal with an Option<i64> when it needs one.

Generics are a powerful and flexible system, but actually we’ve already explored most of what makes them interesting when doing RE work – mostly when we earlier discussed impl trait. Specifically, impl trait in argument position is just a convenient shorthand that Rust supports for a common idiom when using generics. To illustrate this, the following from sample program 1:

pub fn future_age(p: &impl Person, years: &impl quackslike::_i64) -> Result<impl Display, Error>

is rewritten equivalently in sample program 2:

pub fn future_age<T1,T2>(p: &T1, years: &T2) -> Result<impl Display, Error>

where

T1: Person,

T2: QuacksLike<i64>+Algebra<i64>+Copy

These lines in the where clause are called trait bounds. It is also possible to write more succinctly future_age<T1: Person, T2: QuacksLike<i64>+...> and so on without a where clause. Note the introduction of the generic traits QuacksLike<T> and Algebra<T> which replace the awkward quackslike::_i64. Their definitions are below:

pub trait QuacksLike<S> :

PartialEq<S>

+Into<S>

+Clone

+Display {

// no addditional requirements

}

and

pub trait Algebra<S>:

Add<S,Output=S>

+Sub<S,Output=S>

+Mul<S,Output=S>

+Div<S,Output=S>

+PartialOrd<S> {

// no additional requirements

}

Notably, trait bounds in themselves don’t support impl trait in return position (if you try, the compiler complains you’re trying to return some type R that implements e.g. Display, but it still doesn’t understand what type you mean). impl trait in return position is a separate feature internally, and is not a synonym for any trait bound syntax. At any rate, we’ve already discussed at length the influence generics have on assembly output while exploring the construction of sum types and later the implications of monomorphization.

Ownership, Borrowing and Lifetimes

This is, very probably, the most infamous part of Rust language. Rust has memory safety without a garbage collector. This is achieved by enforcing a rigid set of rules that sometimes feels like the compiler hates you on a personal level, and has decided that the best way to prevent run-time errors is just to make sure that your code never, ever successfully compiles. It starts with jolly surprises like

let v1 = Coordinate{x:3, y:4};

let v2 = v1; //Ok

let v3 = v1; //ERROR!!!

And gets even better from there.

Happily, the borrow checker does not survive the trip to assembly-land. Unlike the trait system, where there are no traits in memory but you can still say “the program is constructed this way because of the trait system”, with the borrow checker you can’t even say that. The borrow checker runs during compilation, and once it’s satisfied, all the structs are just structs, and all the references are just pointers like in your first C program. Maybe “you probably won’t find a null pointer dereference even if you look really hard” counts as a way the program is constructed because of the borrow checker? One way or the other, it seems like after all these years, we’ve finally found one front on which the developer writing the code suffers more than the reverse engineer who has to understand the assembly later.

This is the last we’re going to talk about borrowing and lifetimes in this document. For example, if two code snippets from now you’re having the nagging thought “Wait, move? What is that there for? What does it do?” then simply stop having that thought.

Iterators and Closures

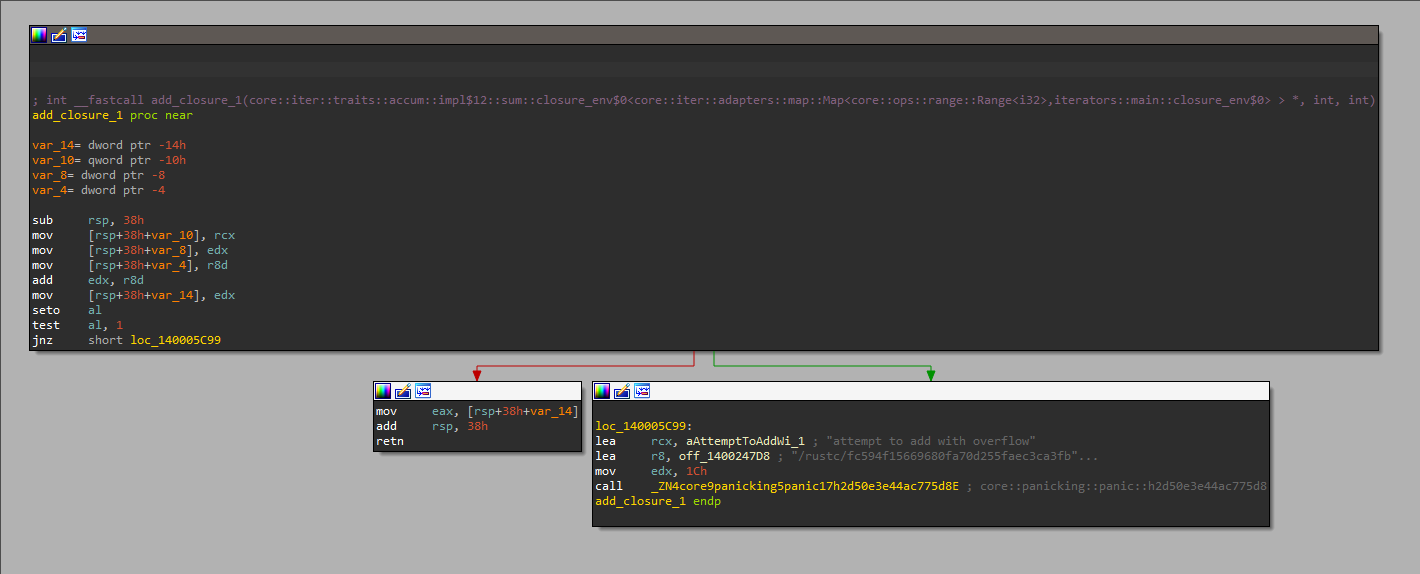

Recall how, when introducing sum types, we opted to first explore auxiliary programs containing the simplest possible examples, and understand the way the features are implemented that way; we’re going to do that again. In case you can’t see that for the warning sign that it is, and you’re thinking “what, you mean iterators like the one used in that for loop?”, we further include the below excerpt from sample program 4’s main function:

//state everyone's future ages, starting 6 years in future

people

.values()

.cartesian_product(0..10)

.filter(|(_,y)| far_enough_in_future(y))

.map(|(p,y)| state_verbose::future_age(p, &y))

.collect::<Result<Vec<_>,Error>>()?

.iter()

.for_each(|s| println!("{}",s));

This is the world we are entering now; an imposing world full of filters, maps, Cartesian products and ::<<<_>,>>()?s. Some people testify that this is their favorite feature of Rust, and when writing Rust they try to use it as much as possible. So, whether we like it or not, any analysis of Rust binaries should deal with iterators at this level.

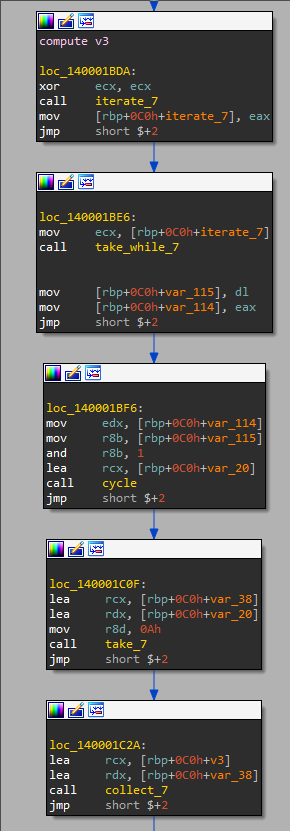

Having seen that, let’s unsee it, and talk in brief about closures. A closure is a shorthand for declaring an anonymous function. In some programming languages there’s a similar concept called “lambdas”, e.g. in Python you can write lambda x,y: 2*x+3*y, and in Rust you would instead write |x,y| 2*x+3*y. Closures are especially useful when a function needs another, typically simple, function as input. An important feature of closures is their ability to capture environment variables, like so:

fn get_closure() -> impl Fn(i32,i32) -> i32 {

let z = 10;

move |x,y| x+y+z

}

fn main() {

assert!(get_closure()(30,2)==42)

}

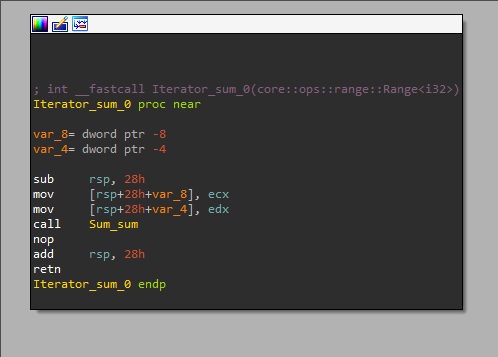

With that explained, we are ready to properly approach the subject of iterators. Just like with sum types, we’re going to look at some basic iterator manipulations, explain what they do, then see what the resulting assembly looks like. All the examples below can be found in the iterators sample program, here. We begin with

let x1 =

(0..10)

.sum::<i32>();

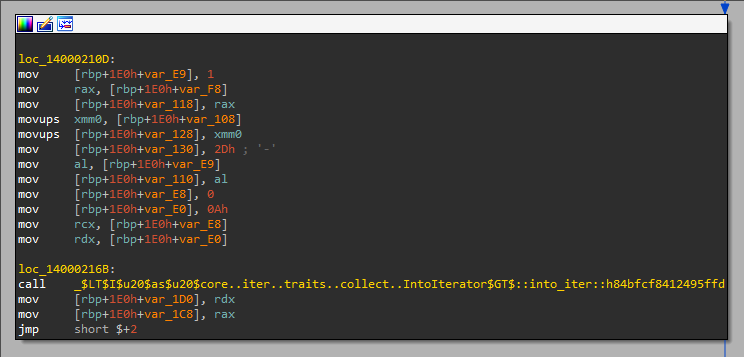

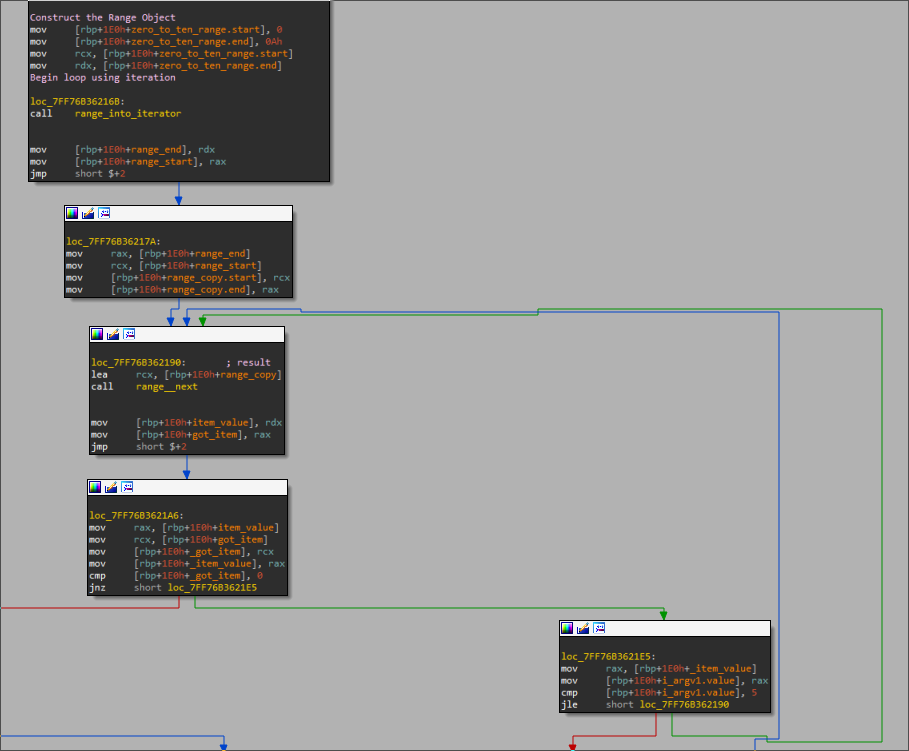

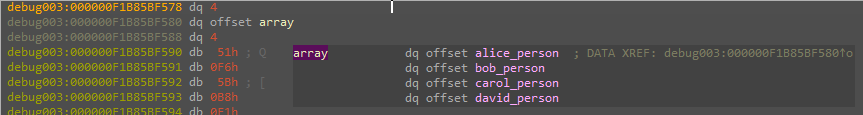

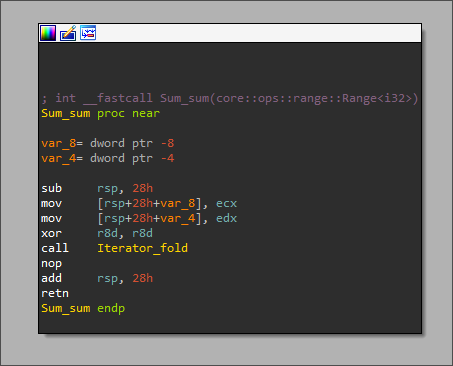

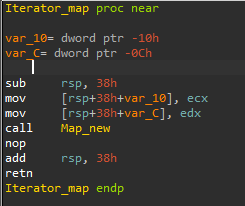

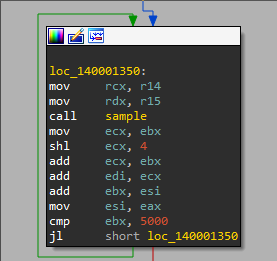

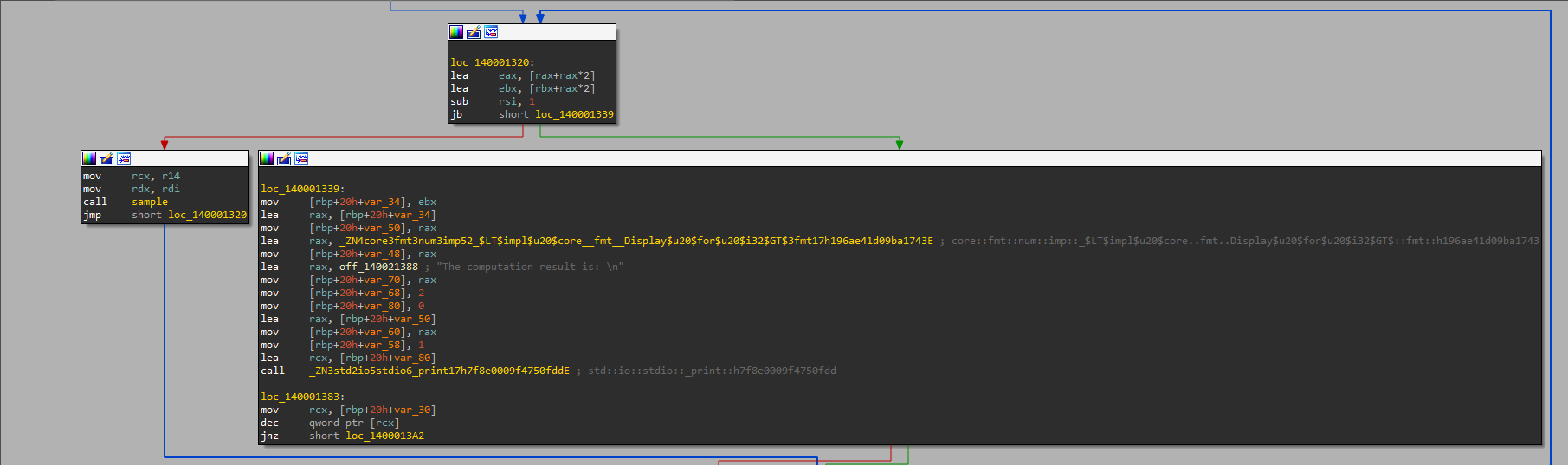

0..10 is a Range<i32>, which implements Iterator<type=i32>. sum is an iterator consumer that takes an iterator and produces an object, in this case an i32. As a result, x1 is assigned the sum of all numbers in the range 0..10. This simple ask, when compiled into assembly, generates the following code:

That doesn’t look so bad. Unfortunately, if we stop here without investigating what happens down the call chain to sum, we’re going to have a bad time later. So let’s take a look.

Iterator::sum calls Sum::sum:

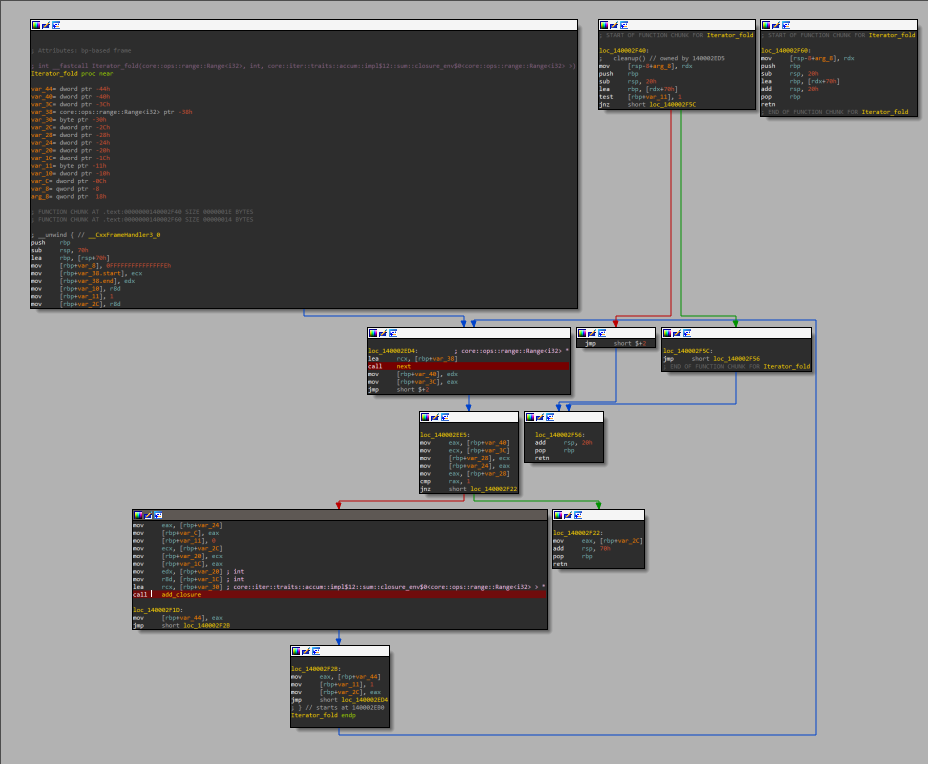

Sum::sum calls Iterator::fold:

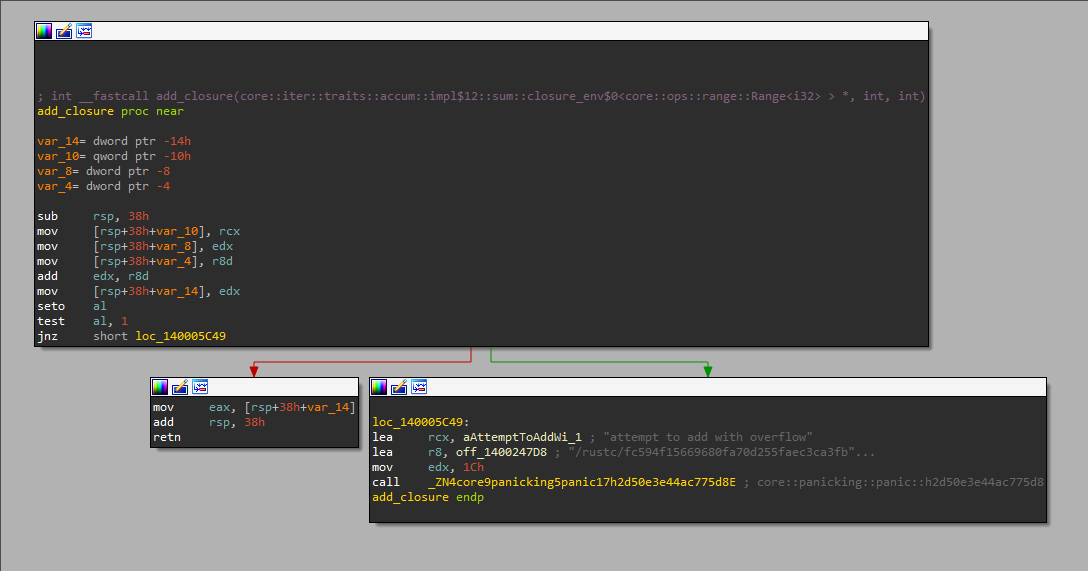

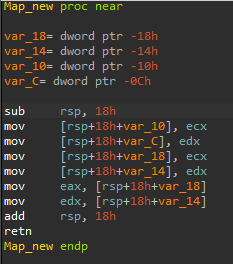

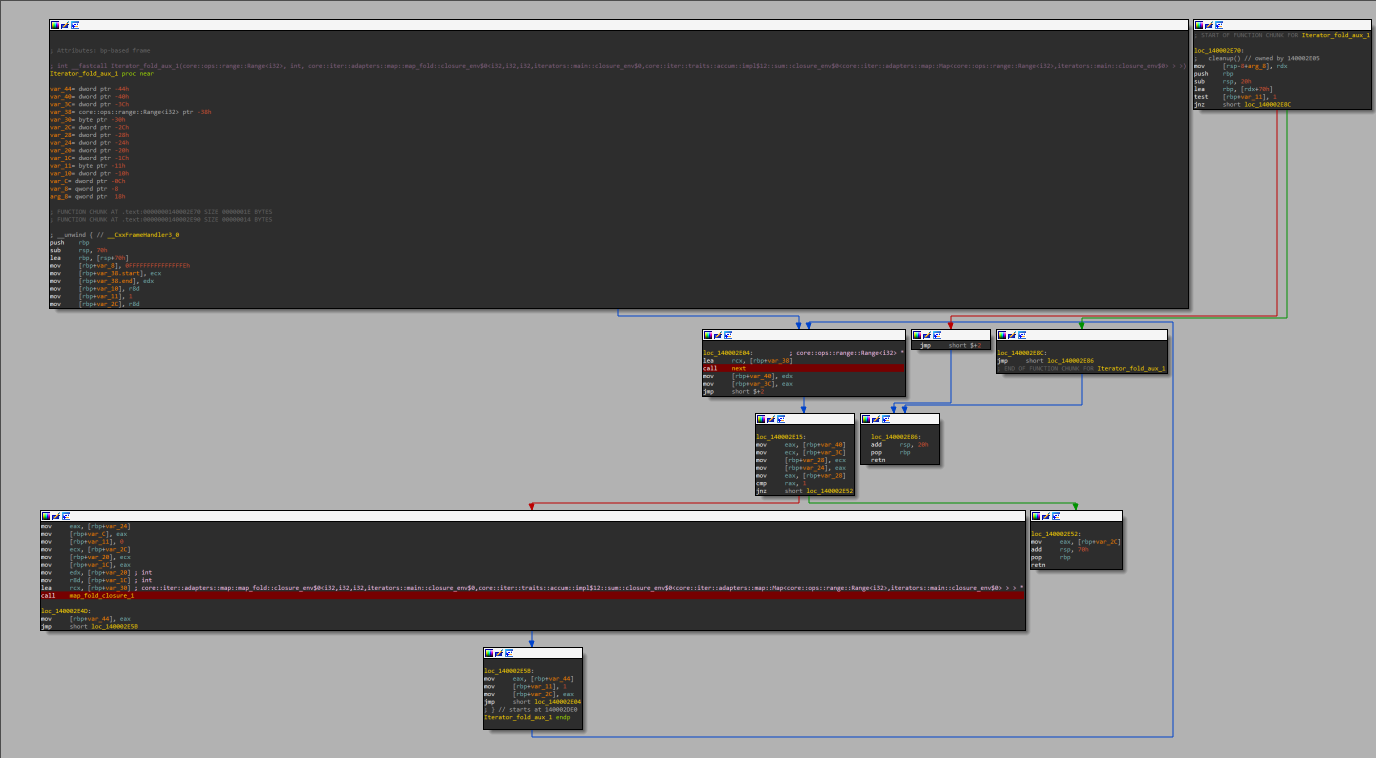

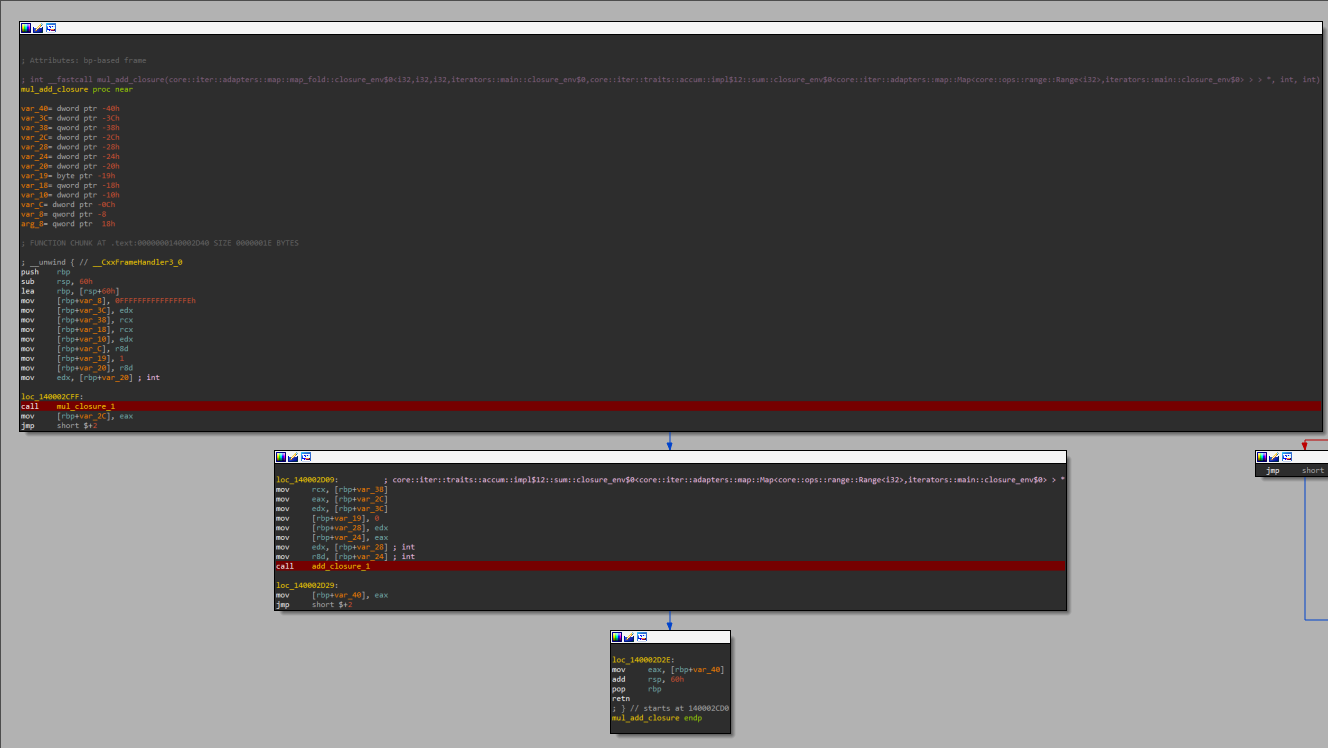

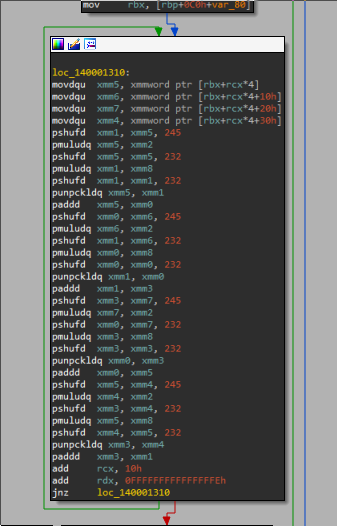

Iterator::fold loops with Iterator::next, calling an ad-hoc internally generated addition closure each time on the running sum and the next element (the calls to next and the closure are highlighted in red):

The addition closure |x,y| x+y performs its simple function, adding the given element to the running sum when it’s called:

Almost everything that makes iterator-generated assembly vexing is already visible in this example. Two points are of special interest.

First: the multiple layers that mostly function as glue, and most of which lack any actual functionality. Rust Debug build call graphs can be a needlessly exhausting lasagna; going through them, one must be in the correct mental state to successfully consume this lasagna. Function similarity engines can maybe help a bit, but no engine is going to give you the courage to take a deep breath and go “ok: So out of those 14 functions, this one doesn’t do anything, and that one just calls those other two, combining the return values…”

Second: it’s easy to miss in this simple example, but there is something weird going on here. The iterator consumer fold is not a fancy synonym for sum. It is a more general tool that can be called with any starting value and any closure, not just a starting value of 0 and that ad-hoc addition closure. Sure, you can sum iterator values with some_iterator.fold(0, |x,y| x+y) as happens above. But you can also compute a product instead by writing some_iterator.fold(1, |x,y| x*y).

This is why we carefully avoided saying “Sum::sum calls Iterator::fold”. Iterator::fold has a hole in it where a compiler will put a call to whatever closure it sees fit. The result is behavior similar in effect to monomorphization: Figuratively you can think of a fold<add>, a fold<multiply>, and a fold<take_your_pick> generated by the compiler.

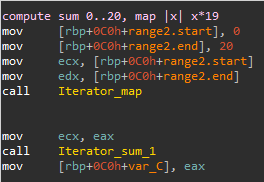

Let’s move on to the next iterator:

let x2 =

(0..20)

.map(|x| x*19)

.sum::<i32>();

This is not very different from the previous iterator, except for an addition call to map. map is an iterator adapter that transforms an iterator into another iterator by applying a function to each element of the original iterator. So this call to map results in a new iterator that yields (0*19, 1*19, 2*19, ... 19*19). The sum of all these numbers is now computed, like in the previous example.

Now, how do you imagine that is implemented in assembly? Let’s take a look:

Makes sense, right? map should return a Map type that implements Iterator<type=i32>, and under the hood this Map object would keep some machinery, like a pointer to that |x| x*19 closure or something… Let’s take a look at this call to map:

Ok, it’s just some glue that calls Map::new, which will surely contain the functionality we’re expecting. Another level down we go:

So now that’s sorted out, we can – wait, what? Where’s the Map object? Where’s the closure? Where’s anything at all? This function doesn’t do anything! It just moves its input into some local variables and back out! What is this cruel joke? At this point, years of conditioning tempt you to stare at the screen until the assembly begins to make sense (more on this in a moment), but actually the right play is to forget you saw anything and instead look at the version of the Iterator::sum function called for x2, which is not the same function as the Iterator::sum called for x1, but has identical structure. This function calls its own personal Sum::sum, which calls another intermediary glue function that doesn’t appear in x1’s version, which then finally calls its own personal Iterator::fold:

This is exactly the same picture as x1’s fold. Well, almost: next is the very same function (the same address), but the closure is different. The compiler internally calls it a “map-fold closure”, and it calls two sub-closures. The first performs the map and the other performs the fold. The two calls are highlighted in red.

The map closure multiplies elements by 19:

And the fold closure is functionally identical to the addition closure we saw earlier during the computation of x1:

We’ve seen this principle several times up to now, but here is where it well and truly smacks us in the face. Analyzing enough C or C++ binaries, you learn an unconscious habit of examining every API contact point and asking yourself, “does the ontology check out?”. A function returns the nth element of a linked list: It’s going to need n and the list. A function multiplies each element in a list by 19, then another function sums the result; the first function is going to need the original list, and the second function is going to need the list with its elements already multiplied. You get used to binary constructions that are self-contained, reusable, agnostic about what code will call them and what for, and have exactly as much hardcoded knowledge as they need to fulfill a contract. If the ontology doesn’t check out, it’s a top priority alarm bell. Stop, you’ve missed something big, you’ve misunderstood something fundamental. This alarm bell is an invaluable tool while performing binary analysis. Let’s call it the “principle of binary encapsulation”.

Instead what you get in Rust binaries is stuff like, for example, the Repeat object. This object is what the repeat function returns; you can write repeat(5) and you get a Repeat object which implements Iterator. Specifically, this iterator just yields Some(5), again and again, forever. Now you’re imagining an object containing some returning-subroutine, and the hard-coded value 5, but no, actually, the Repeat object in memory is just the number 5. That’s all. How can the number 5 return Some(5) repeatedly? It’s not a function. It doesn’t know about Option. It doesn’t have an API or a vtable. It’s a dumb number. But, you see, when it’s time to create an impl next for Repeat<i32>, the compiler just (figuratively) writes return Some(value_of_field). There, done.

Rust binaries don’t obey the principle of binary encapsulation. Whenever you find yourself asking “but how will object x know…”, be ready to blame the compiler. Maybe it just deals with the gap directly, like with repeat. Maybe it gives the object that knowledge by transmogrifying it, and to begin with you’re looking at a transmogrified object already. How can fold create a sum of mapped elements if given a sum of non-mapped elements? It doesn’t. map doesn’t map, fold maps in addition to folding. Any energy spent being upset about this is energy wasted

Equipped with this grim knowledge, we can finally look at something more like a real iterator expression:

let v3 : Vec<i32> =

iterate(0, |x| x+2)

.take_while(|&x| x<10)

.cycle()

.take(10)

.collect();

This says to start at 0, adding 2 every step; as long as the resulting value is smaller than 10; when the values are exhausted, go back to the beginning and start again; but do everything we just said only to obtain 10 elements, and no more; then finally put the elements that result in a Vec<i32>.

At the top level this translates to the following assembly:

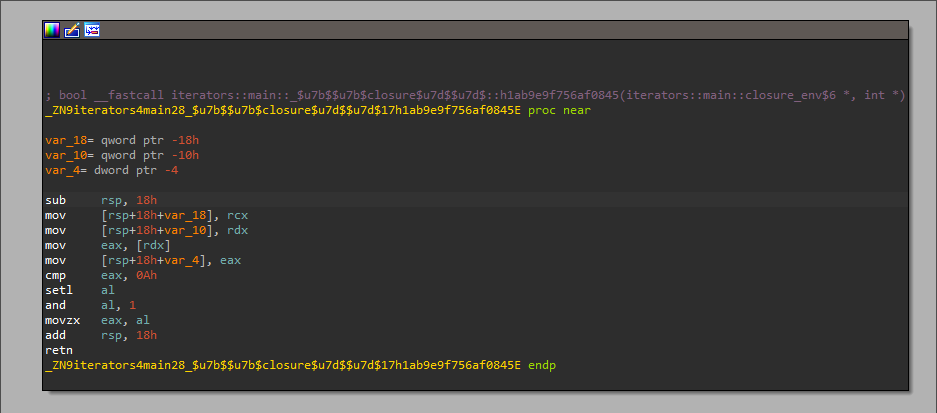

We doubt you’ll be well-served by 18 assembly screenshots of the entire call graph of each function invoked here, so we will shortly describe what happens. iterate and take_while are hollow shells with no actual functionality, akin to map in the example above. Finally, cycle is forced to perform an actual action: it must clone the iterator in order to create a functioning Cycle object (this object needs to produce the first element again once the iterator is exhausted; it does this by creating a backup copy of the pristine, untouched iterator on object creation, and each time its current iterator is exhausted; the rest of the magic happens in the call to the monomorphized impl next for Cycle<...>). Actual functionality can also be observed in the call to take where a proper Take object is constructed that has the input iterator as well as a field keeping track of the number of elements still left to exhaust before stopping iteration (initialized to 10, obviously). The actual logic for iterate and take_while is baked directly into the monomorphized, specialized, impl next for ThisVerySpecificIteratorExpression. The take_while condition can be seen below:

Found inside a next call, inside a next call, inside a next call, inside an internally generated closure, inside an auxiliary function, inside the call to collect. So, the “layers of objects that implement Iterator” vision does apply, but sometimes the compiler decides that it’s better to inline the logic somewhere.

v3 is the same type of creature as the “future ages, starting with 6 years in future” expression that we glimpsed earlier. One change of note is the cartesian_product which implements relatively complex logic; To explain it by example, the Cartesian product of the two iterators 0..10 and 20..30 is an iterator that yields every possible (x,y) pair where x is in 0..10 and y is in 20..30. Another difference is the sheer size of the lasagna: the call to far_enough_in_future lives down a rabbit hole fourteen (14) levels of call stack down from main. Truly, one of the most underrated skills of reverse engineering is the ability to first take a deep breath.

For those who want to dive into the resulting call tree (and see where the compiler moved in-line functionality), now is probably the time to revisit those impenetrable auto-generated function name symbols. For example, this:

enum2$<core::option::Option<ref$<advanced_type_system::person::_Person> > > __fastcall _$LT$std..collections..hash..map..Values$LT$K$C$V$GT$$u20$as$u20$core..iter..traits..iterator..Iterator$GT$::next::h846a4f1508fbb15b(std::collections::hash::map::Values<alloc::string::String,advanced_type_system::person::_Person> *)

Now we’re better-equipped to understand how the “official” function name grew to this size and complexity. There’s a lot of blame to go around — the use of full scope paths for everything surely doesn’t help, and the trait system means that at the top level the function is often of the form impl SomeTrait::somefunc for SomeType so that’s two opportunities for complexity already. But the true catalyst for the chain reaction is generics: SomeTrait is SomeTrait<S,T> so now S and T need to be named, and maybe in this case S is a Result<W,Z>, and possibly SomeType is an Fn(A) **->** R where A and R , in turn… etc, etc, etc…

Focus on the core questions: impl what? for what argument types? (and, ergo,) returning what? The answers to all three questions are buried in that blob above. By looking at the above example and below answers you should get a pretty good idea of where to look for the answers in other functions. In this case, the function is:

- impl

next - for

Values<String,_Person> - returning

Option<Person>

Another useful trove of knowledge when untangling these call trees is the official Rust documentation, which contains direct links to how Rust standard library functions are implemented in source. For example looking at the implementation of collect we can click the “source” link to go look at its source, where it turns out to be a wrapper for FromIterator::from_iter. The documentation of that method is here, and we find that one of its implementors is the HashMap type. We can then in turn go look at that implementation and see that it calls HashMap::with_hasher, Default::default and so on. If you can get your bearings and correctly identify a single standard library function, even just at the “impl what” level, the above process should theoretically net you the corresponding “impl what”s for every single function down the call tree from it. Of course, plenty of things in RE are possible in theory and insane to attempt in practice — this kind of reasoning should be applied in small doses when done manually. You probably don’t want to go down a call tree and manually label 19 functions just because you can.

Box and Dyn Trait

Now, having dealt with this unsettling sea of unfamiliar features, let’s cleanse our palate with something comforting and familiar. Like we mentioned before, Rust has many container types (such as Vec and HashMap). One of the more fundamental ones is Box. Usually introductory Rust material struggles to simply explain Box to people who spend most their lives repressing the separate existence of the Stack and the Heap, but happily for our current target audience we can just say that a Box<T> is a heap pointer to a T, and be done. In sample program 2, the entire song module exists to showcase the behavior of Box. For example, inside impl Song for _Song, one can find the following function:

fn title(&self) -> Box<String> {

Box::new(String::from(self.title))

}

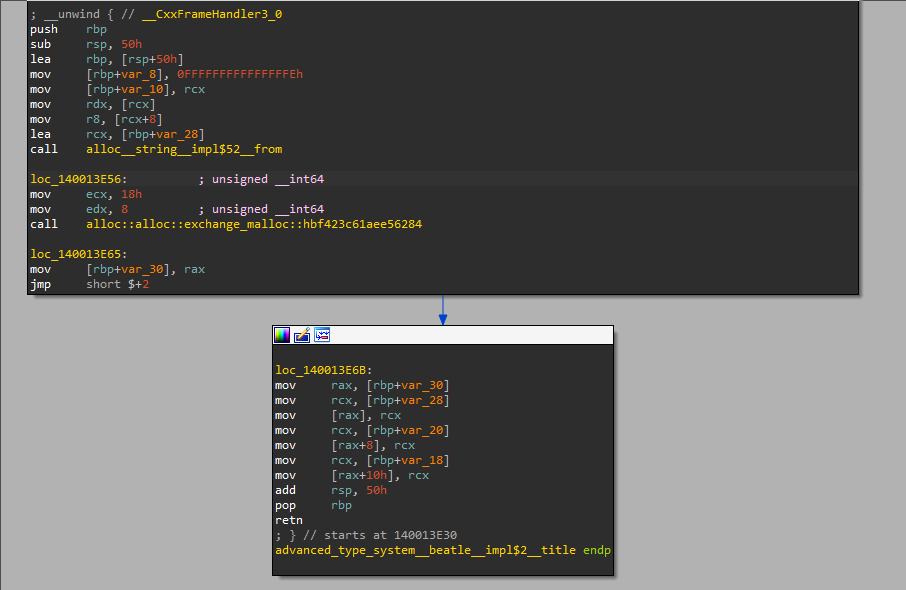

Which compiles to the following assembly:

Memory is allocated on the heap for the String object. The data of the object is moved to that memory. The pointer to the memory is then returned. This wouldn’t look out of place in some run-of-the-mill C malware.

A more complex, but also very familiar, construct is Box<dyn Trait>. This signifies what Rust calls a ‘trait object’ and is the closest thing you’re going to see in a Rust program to a C++ class doing its usual C++ class thing. This can be seen in the function implied_favorite_song, which has the following signature:

pub fn implied_favorite_song(beatle: Option<Beatle>) -> Result<Box<dyn Song>,Error>

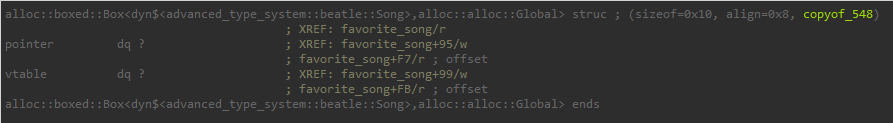

The function body doesn’t contain anything new or exciting; we could have written impl Song instead of Box<dyn Song> in the signature and get the same behavior we’ve discussed earlier with impl Trait. In-memory the Box<dyn Song> has the following structure:

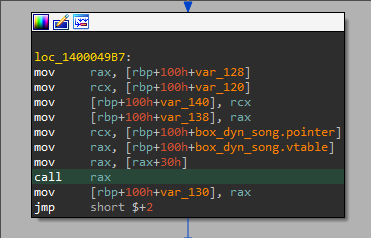

Where pointer points to the underlying _Song returned by implied_favorite_song. Here’s what happens when one of the object’s methods is invoked inside the function favorite_song:

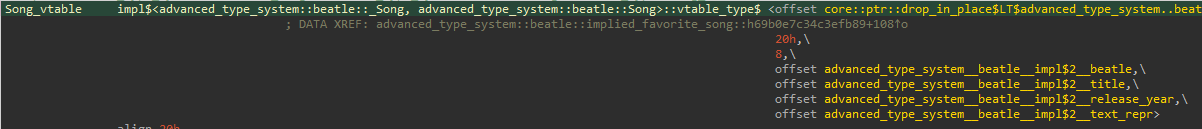

And the vtable:

What a sight for sore eyes!

Rayon and Parallelism

This is more of a footnote than anything else. One of Rust’s selling points is its support for threaded programming with safety guarantees, and one of the most intuitive ways to make use of this feature is via the rayon library. This library allows taking any iterator expression and changing iter to par_iter, or into_iter to into_par_iter, etc, transforming the serial computation into a parallel computation. To make this work, Rayon implements its own shadow ecosystem of iterator adapters: you might call

some_vector .into_par_iter() .map(...)

and not think much of it, but now the map you’re calling is a separate implementation of map maintained by Rayon devs. To showcase this, sample program 2 invokes Rayon when construction the HashMap<String,_Person>:

let people =

[

(&"Alice", &_const::age::ALICE, Some(&Beatle::George)),

(&"Bob", &_const::age::BOB, Some(&Beatle::Ringo)),

(&"Carol", &_const::age::CAROL, Some(&Beatle::Paul)),

(&"David", &_const::age::DAVID, None::<&Beatle>)

]

.into_par_iter()

.map(|t|

person::new(t.0, t.1, t.2)

.map(|p| (p.name(), p))

)

.collect::<Result<HashMap<_,_>,Error>>()?;

The actual parallelized implementation appears inside the function from_par_iter (documentation here, specifically of HashMap implementation here, source here). While Rayon is not a part of the standard library, it is a fairly popular library for using one of Rust’s core features, and is worth it to at least recognize if you come across it. There’s a tell-tale call creating a mutex at the start of from_par_iter:

A very slight taste of Release Builds

As emphasized during the introduction, this document focuses on the way rustc behaves when generating Debug builds, which is what happens by default when one simply invokes cargo build on a project. In this section we dip the soles of our feet into some of the implications for invoking cargo build --release.

First of all, the location of the main function inside the basic block that calls the language runtime seems to have moved slightly.

Other than that, the good news is that the lasagna is mostly squashed together into one discernible layer again. For instance, the iterator expression showing everyone’s favorite song is converted into the following assembly: