Artificial intelligence is rapidly reshaping the cyber security landscape—but how exactly is it being used, and what risks does it introduce? At Check Point Research, we set out to evaluate the current AI security environment by examining real-world threats, analyzing how researchers and attackers are leveraging AI, and assessing how today’s security tools are evolving with these technologies.

Our investigation included a deep dive into the dark web, insights drawn from Check Point’s GenAI Protect platform, a review of how our own researchers integrate AI into their workflows, and collaboration with key experts across the organization.

The result? A comprehensive look at where AI has been, where it stands today, and where it’s heading in the world of cyber security.

Here’s what we found.

Read the Check Point Research AI Security Report 2025

AI Threats

1. AI is Eroding the Foundations of Digital Identity

AI-driven advancements have significantly altered the threat landscape of social engineering—already one of the most effective techniques for breaching security perimeters. Large language models (LLMs) and generative AI enable real-time, unscripted interaction via text and audio, with rapid synthetic video capabilities advancing.

These technologies dramatically increase the scalability and plausibility of impersonation attacks. Deepfake audio and video, once resource-intensive, are becoming commoditized—reducing the barrier to entry for threat actors and enabling more targeted, personalized campaigns.

As generative media improves in fidelity and accessibility, traditional identity verification mechanisms—such as voice recognition, facial analysis, and behavioral biometrics—are increasingly unreliable. In this new paradigm, where synthetic identities can be generated on demand, digital trust frameworks are no longer reliable and must fortified to withstand AI-enabled deception.

Account verification and unlocking service advertisement

2. LLM Poisoning: A New Front in AI Exploitation

LLM poisoning—manipulating training or inference data to embed malicious outputs—has emerged as a growing security concern. While large providers like OpenAI and Google implement strict data validation, attackers have found success targeting open-source platforms. In one case, over 100 compromised models were uploaded to Hugging Face, mimicking a software supply chain attack to spread harmful code or misinformation.

Beyond training-time poisoning, modern LLMs introduce new risks through retrieval poisoning—the strategic placement of malicious content online, intended to be ingested by models during inference. A notable example involves the Russian affiliated disinformation network “Pravda,” which generated 3.6 million propaganda articles in 2024. Researchers found leading chatbots echoed Pravda narratives in 33% of responses, underscoring how adversaries are already manipulating AI systems at scale.

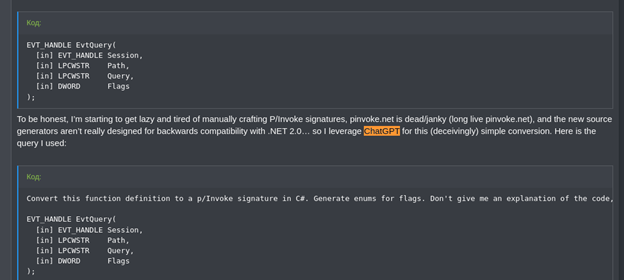

Forum user deceiving ChatGPT to enhance malicious code

3. AI-Created Malware

Cyber criminals are leveraging AI to streamline and enhance multiple stages of the malware kill chain. From generating ransomware scripts and phishing kits to building info-stealers and deepfakes, AI lowers the barrier for entry and enables rapid scaling of attacks—even by low-skilled actors.

One recent dark web example showed an attacker using ChatGPT to refine code for extracting credentials directly from Windows event logs, demonstrating how AI is already optimizing malicious tooling in the wild.

4. Malware Data Mining

While AI-built malware is still maturing, infostealers are already using AI to mine and manage stolen data at scale. Malware like LummaC2 integrates AI-based bot detection to filter out security analysis environments, while dark web vendors such as Gabbers Shop claim to use AI to clean and organize large credential datasets, enhancing data quality for resale.

Advertisement of the “Gabbers Shop” in a dark web forums

In one example, a threat actor leveraged DarkGPT—a malicious LLM modeled after ChatGPT—to analyze infostealer logs via natural language queries. This enables rapid extraction of sensitive and valuable data such as credentials, domain names, API keys, and session tokens. By automating post-exfiltration analysis, attackers can quickly identify high-value targets for credential stuffing, ATO, financial fraud, or enterprise intrusion—accelerating the path to ransomware deployment.

AI for Research

AI-Driven Research: Accelerating Threat Hunting and Vulnerability Discovery

AI is increasingly being used to enhance cyber security research by improving detection, simplifying complex systems, and accelerating threat analysis. In particular, LLMs and agent-based frameworks can automate data collection, testing, and preliminary analysis—freeing human analysts to focus on higher-order tasks like adversary profiling and strategic decision-making.

These capabilities are already proving valuable in advanced threat hunting, malware analysis, and vulnerability research, with current tools enabling faster, more scalable workflows shaping cyber security operations’ future.

LLMs and APT Hunting: Automating Tradecraft Detection at Scale

LLMs can be integrated into big data pipelines to detect advanced threat actor tradecraft across massive datasets. Analyzing language patterns and deception techniques enables scalable detection of APT behavior. Key use cases include:

- Impersonation & Thematic Deception: Flagging domains that mimic trusted institutions (e.g., mofa-gov-np.fia-gov[.]net, militarytc[.]com).

- Malicious File Naming: Identifying deceptive filenames in malware repositories (e.g., tax_return_2025.pdf.exe).

- Deception Scoring: Predicting whether a non-technical user would trust a domain or file, assigning a linguistic-based deception score.

- Geo-Targeting Analysis: Detecting regional influence operations, such as Cyrillic domains impersonating NATO organizations.

These AI-driven methods enhance threat hunting by surfacing subtle indicators of targeted campaigns that might otherwise go unnoticed.

Automating TTP and IOC Extraction with AI

Manually extracting TTPs and IOCs from threat intelligence reports is labor-intensive and slows response times. LLMs can automate this process by parsing reports, identifying attack patterns, mapping them to frameworks like MITRE ATT&CK, and generating structured hunting rules.

This automation accelerates the conversion of raw intelligence into actionable detections—improving analyst efficiency and reducing time-to-detection for APT campaigns and malware variants.

AI-Driven Malware Analysis

Automating malware analysis, once a distant goal, is now a reality. In 2024, researchers have successfully used LLMs to decompile malware code, with impressive results—LLMs are capable of identifying malicious behavior even when traditional detection rates are low.

This development has significant implications for APT threat hunting. With platforms like VirusTotal already leveraging AI for file analysis, AI-driven malware detection is poised to become a core component of future APT hunting workflows.

AI in Vulnerability Research and Testing Automation

Vulnerability research involves gathering information about platforms, software, or devices, often requiring repetitive tasks. AI can streamline these tasks, freeing up researchers to focus on higher-level analysis. Emerging frameworks like CrewAI and Autogen allow LLMs to interact with systems and assist in research, bridging gaps by enabling AI to use external tools and engage in complex conversations.

Additionally, open-source LLMs like Deepseek enable the development of specialized models for tasks such as reverse engineering, vulnerability discovery, and exploit development.

Agent-Based Workflows for Vulnerability Discovery

Combining Static and Dynamic Application Security Testing (SAST and DAST) with LLMs can automate workflows to discover vulnerabilities. For instance, an LLM can assist in firmware analysis, reverse engineering, fuzzing, and performance testing, accelerating what would traditionally be a labor-intensive process. While the technology is available, integrating it into a cohesive, secure workflow remains the biggest challenge.

AI in Logic Flaw Detection and Complex Protocol Analysis

As LLM reasoning capabilities improve, AI will increasingly detect logic security flaws in code bases—a task previously difficult to automate. Furthermore, AI can help analyze complex protocols like those used in 5G communication networks by rapidly processing vast data, creating more efficient testing frameworks and simulations.

AI in the Enterprise

AI Integration and Increased Organizational Risk

As AI technologies become more integrated into corporate environments, organizations face heightened risks. Many interactions, whether direct (e.g., Generative AI) or indirect (e.g., grammar tools, translation assistants, or customer support bots), can inadvertently lead to the sharing of sensitive information. This includes internal communications, strategic plans, financial data, customer information, and intellectual property.

Check Point’s GenAI Protect data shows that AI services are used in at least 51% of enterprise networks monthly, both through direct interaction with chatbots like ChatGPT and indirectly via AI-powered tools such as translation services. Additionally, 1 in 80 prompts (1.25%) sent from enterprise devices to GenAI services posed a high risk of sensitive data leakage, while 7.5% of prompts (1 in 13) contained potentially sensitive information.

Popularity of AI Services in Enterprises

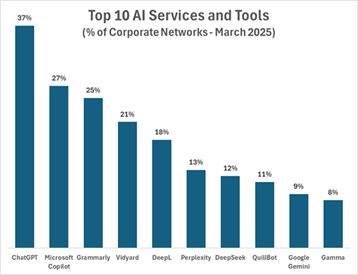

Analysis of enterprise environments shows that OpenAI’s ChatGPT is the most widely used AI service, appearing in 37% of networks, followed by Microsoft’s Copilot at 27%. Other popular services include English-language writing assistants such as Grammarly (25%), DeepL (18%), and QuillBot (11%). Additionally, video and presentation tools like Vidyard (21%) and Gamma (8%) have seen significant adoption.

The most popular Generative AI services in enterprises – March 2025

The Dual-Edged Sword of AI in Cyber Security

As AI technologies become increasingly integrated into both cyber security defense and cyber attack strategies, organizations are confronted with a rapidly changing landscape. On one hand, the rise of AI brings new and significant risks. The concept of AI-driven malware creation and data mining is already a reality, with cyber criminals leveraging AI to optimize their attacks and scale their operations. AI is also playing a role in LLM poisoning, where adversaries manipulate AI models to inject malicious code or misinformation, and AI-generated deepfakes are increasingly being used for social engineering and identity fraud.

On the other hand, AI is transforming threat detection, vulnerability research, and incident response by automating tasks, enhancing research efficiency, and enabling more accurate identification of malicious activities. From AI-assisted malware analysis and vulnerability discovery to agent-based workflows for APT hunting, AI is empowering security teams to stay ahead of emerging threats.

Furthermore, the growing use of AI in enterprise environments amplifies the threat of sensitive data leakage, as employees interact with AI tools—both directly and indirectly—leading to potential exposure of internal communications, financial data, and intellectual property. According to recent data, significant portions of prompts sent to AI services from enterprise devices contained high-risk sensitive information.

As organizations increasingly adopt AI for research and security purposes, it is crucial to address these risks head-on. The integration of AI-driven systems into cyber security must be accompanied by robust safeguards to prevent exploitation, ensure privacy, and maintain the integrity of critical systems. Moving forward, balancing AI’s tremendous potential with the growing threat landscape will be paramount to ensuring both the safety and success of enterprises in the digital age.