Executive Summary

- Check Point Research analyzed the construction and control flow of Akira ransomware’s Rust version that circulated in early 2024, which has specific features uniquely targeting ESXi server. Our analysis demonstrates how Rust idioms, boilerplate code, and compiler strategies come together to account for the complicated assembly.

- The report outlines principles to follow when analyzing ITW Rust binaries in general.

- We present an analysis of the design strategies used by the malware’s authors, as indicated by the assembly and parts of the reconstructed source code.

Introduction

Earlier this year, Talos published an update on the ongoing evolution of Akira ransomware-as-a-service (RaaS) that has become one of the more prominent players in the current ransomware landscape. According to this update, for a while in early 2024, Akira affiliates experimented with promoting a new cross-platform variant of the ransomware called “Akira v2.” This new version was written in Rust and was capable of targeting ESXi bare metal hypervisor servers.

Executables written in Rust have a reputation for being particularly challenging to reverse-engineer. While it is often possible to answer specific research questions, such as “What file extensions are being targeted?” or “What encryption method is being used?”, this is usually achieved by finding ways to circumvent a comprehensive analysis at the assembly level. This is, of course, a pragmatic and cost-effective approach. However, if we set it aside for a moment, we might glean some interesting insights.

While we do answer many of the above-mentioned ‘usual’ research questions about a given ransomware strain in this text, this is not the focus nor the main objective here.

Our earlier research, Rust Binary Analysis Feature by Feature, was conducted in a laboratory setting. We examined the language features as they appear in Klabnik’s and Nichols’ “The Rust Programming Language”, and observed the idioms the Rust compiler uses to implement them in assembly. We controlled the source code and took care to compile in Debug mode so that the compiler would not complicate our lives with surprising optimizations.

In this publication, we engage that theory with the real world: an actual Rust malware that uses various standard library and third-party crates, and was compiled in Release mode. We note the design choices made by the malware authors and the involved Rust constructs and see how both translate into assembly – sometimes in surprising ways that lab work did not prepare us for. Our main aim is to break through that technical barrier and reach a capital-U Understanding of this Rust binary and its control flow.

Overview

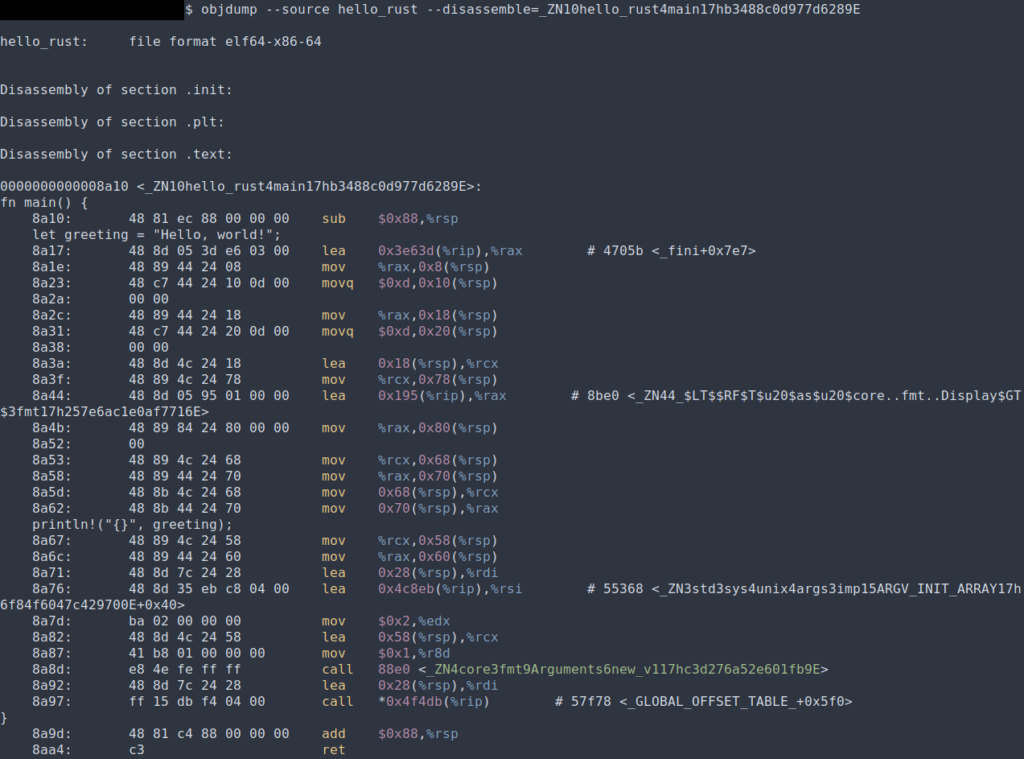

We earlier noted that the binary was compiled in Release mode; however, there isn’t a clear flag indicating this. We assume this since firstly, some compiler optimizations we discuss later definitely should not be present in a Debug build; and secondly, the binary debug information has no source lines, which are typically included by default in a Debug build, as seen below (a good way to test for these is by using the command objdump --source <binary> | grep "let ").

The ransomware’s control flow, from its root to the encryption logic, is as follows:

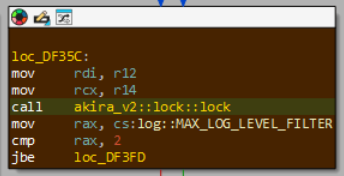

Main -> default_action -> lock -> lock_closure

Broadly speaking, Main parses arguments, default_action determines program behavior and collects targeted files, and lock is a wrapper that launches threads that carry out the actual encryption logic that lives in lock_closure.

Maybe you are unpleasantly surprised by how cyber criminals are so far gone that the “default action,” in their eyes, is destroying your machine and encrypting all the files on it. However, as we will later see, there is a perfectly reasonable explanation for why this is so.

We will now sequentially analyze each of the above-mentioned functions and observe how control flows from one to the next.

Main function

Command Line Arguments

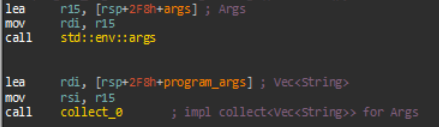

This malware has a full-fledged CLI, and as a consequence, command-line arguments play a significant role in the way it functions. The first thing the malware does is obtain them (the original source is let program_args: Vec<String> = args().collect()).

args.().collect()This brings us to our first natural question: How are program arguments stored in memory?

According to the official documentation, std::env::args returns std::env::Args, a type that implements Iterator<Item=String>, meaning that it implements a function next() -> Option<String>. However, this does not guarantee what the underlying implementation looks like. (In fact, Rust supports dynamic dispatch, allowing some objects to implement a trait even when the underlying implementation is not known at compile time — although this is not the case here, we will see this feature in action later.)

std::env::Args is implemented as a wrapper for another type called ArgsOs. This type, in turn, is implemented using sys::args::Args, a type returned by args_os(), which calls the function sys::args::args(). At this point, you may dutifully look for sys::args::args in the Rust standard library sources. You will not find it there; since this code is OS-dependent, it is part of the Platform Abstraction Layer (PAL), which swaps in the correct code at compile time depending on the targeted OS. So, the implementation is in std::src::sys::pal::unix::args. It first invokes an arcane function (argc_argv, implementation here) that directly pulls these values via calling the C FFI. Then it manually, painstakingly converts this tuple into a Vec<OsString>. The complete code of sys::args::args is included below.

pub fn args() -> Args {

let (argc, argv) = imp::argc_argv();

let mut vec = Vec::with_capacity(argc as usize);

for i in 0..argc {

let ptr = unsafe { argv.offset(i).read() };

if ptr.is_null() {

break;

}

let cstr = unsafe { CStr::from_ptr(ptr) };

vec.push(OsStringExt::from_vec(cstr.to_bytes().to_vec()));

}

Args { iter: vec.into_iter() }

}

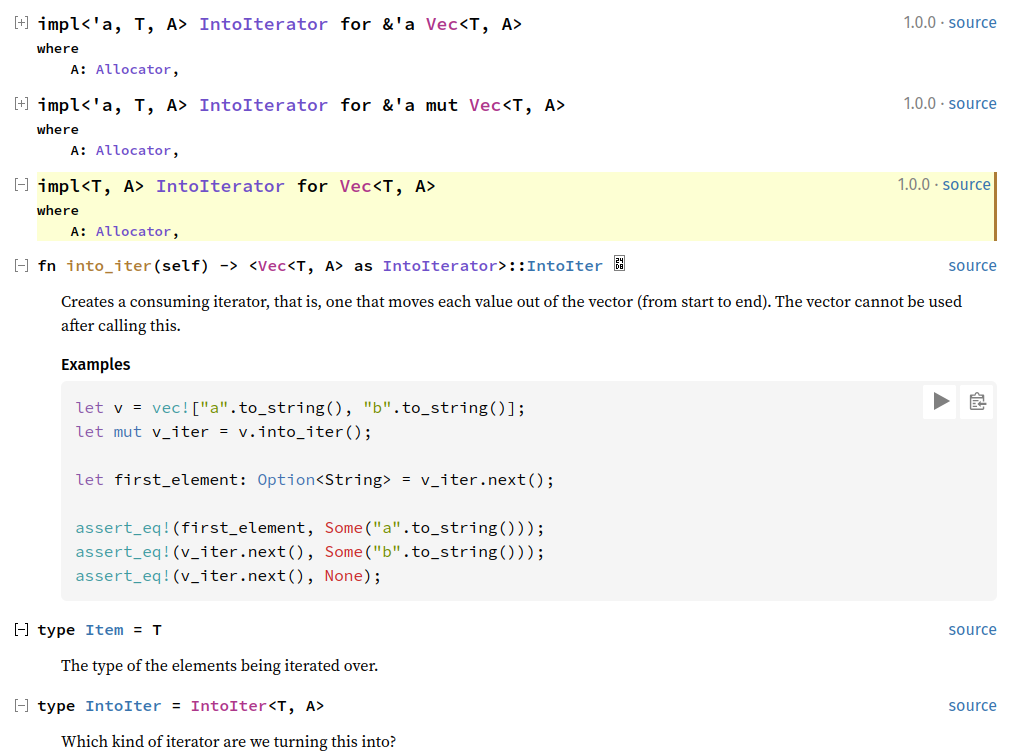

So, if we squint at that last line and put together all the pieces of the puzzle, we can conclude that Args under the hood has the output type of impl into_iter for Vec<OsString>. However, figuring out the actual type still requires navigating murky waters. The into_iter method is provided by the IntoIterator trait (std::iter::IntoIterator), which has a type parameter called IntoIter. Meanwhile, the documentation for std::vec::Vec lists three different implementations of impl into_iter for Vec:

into_iter for Vec.One of these returns a type named IntoIter (std::vec:::IntoIter), unrelated to the associated type parameter IntoIter of std::iter::IntoIterator – they happen to have the same name. At this point, we will mercifully note that the third implementation, highlighted in yellow above, is the one invoked by sys::args::args (the other two are for a reference to a vector). This is to say, Args are kept in memory as an IntoIter<OsString>.

We still need to determine the implications of this, concrete-byte-wise. The vec::IntoIter documentation can be found here, and this structure is defined as follows:

pub struct IntoIter<T> {

buf: NonNull<T>,

cap: usize,

start: *const T,

end: *const T,

}

An OsString is implemented as a sys::os_str::Buf, and its precise implementation varies between operating systems. In Linux it is represented as a standard three-field Vec<u8> consisting of length, buf, and capacity. In contrast, for Windows the compiler uses a custom solution called WTF8 encoding – “a hack intended to be used internally in self-contained systems with components that need to support potentially ill-formed UTF-16 for legacy reasons”. The relevant type, Wtf8Buf, includes an additional field, is_known_utf8, bringing the total number of fields to four.

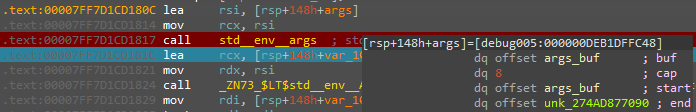

Looking at the args variable in memory right after std::env::args is called, we can confirm the structure of the IntoIter<OsString>:

IntoIter<OsString> structure in memory.Below is the internal buffer of OsStrings for the arguments which we ran a test Windows program compiled from the same source: (the value 0x274ad87f101 is an artifact of the WTF8 encoding)

OsStrings in sequence, pointed at by the buf pointer of the IntoIter<OsString>.CLI Parser

After the command line arguments are collected, many writes to stack memory are followed by a call to a function called default_action. We will explore the details of this function later, but its name raised our suspicions that it might be a sample or boilerplate code of some kind. After all, it is hard to imagine someone deliberately naming a function “default action”. A quick web search for this function name locates the seahorse crate, which is a CLI framework. In one of its numerous “hello world” examples, we find the following code:

use seahorse::{App, Context, Command};

use std::env;

fn main() {

let args: Vec<String> = env::args().collect();

let app = App::new(env!("CARGO_PKG_NAME"))

.description(env!("CARGO_PKG_DESCRIPTION"))

.author(env!("CARGO_PKG_AUTHORS"))

.version(env!("CARGO_PKG_VERSION"))

.usage("cli [name]")

.action(default_action)

.command(add_command())

.command(sub_command());

app.run(args);

}

fn default_action(c: &Context) {

println!("Hello, {:?}", c.args);

}

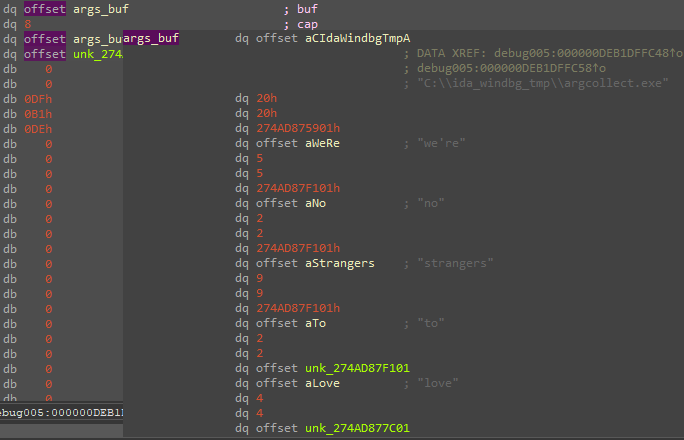

Now we know where the name default_action came from. The malware sources must have contained this code block or something a lot like it (the string cli [name] also appears as-is). It is clear that the struct being initialized with all those stack writes is a seahorse App. This struct is implemented in the following way:

pub struct App {

pub name: String,

pub author: Option<String>,

pub description: Option<String>,

pub usage: Option<String>,

pub version: Option<String>,

pub commands: Option<Vec<Command>>,

pub action: Option<Action>,

pub action_with_result: Option<ActionWithResult>,

pub flags: Option<Vec<Flag>>,

}

This allows us to decipher the initialization of the App struct:

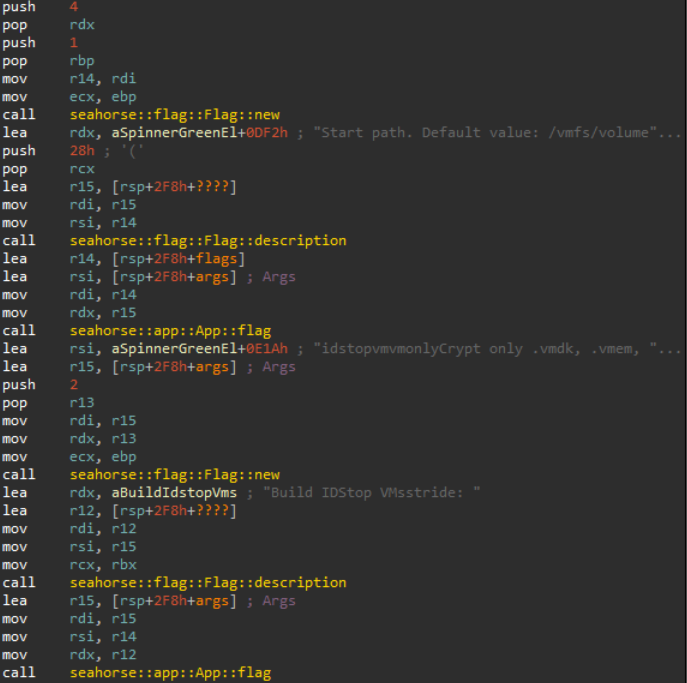

App object.The assembly then proceeds to initialize the long list of supported CLI flags (the below image accounts for the first two):

App object in preparation for calling run.Then finally calls run, transferring control to default_action.

The Immediate Puzzle

This is the function that is launched by the seahorse boilerplate code we saw earlier. When we look at its disassembly, we are immediately greeted by a startling sight:

default_action overview.

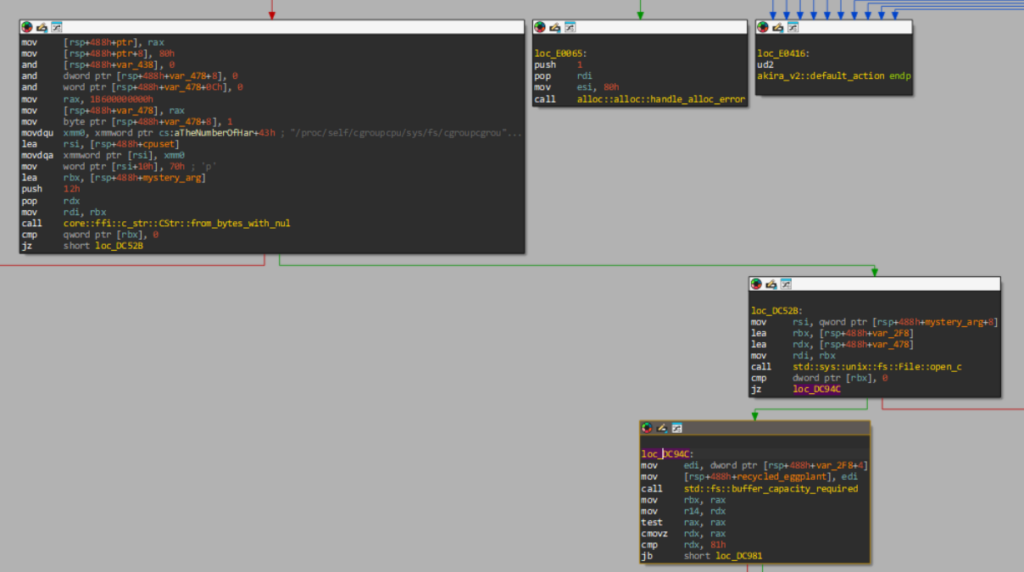

default_action first few basic blocks.The malware authors apparently implement their desired functionality by… directly calling the C FFI function from_bytes_with_nul and then std::fs::buffer_capacity_required? This is unexpected. There are several dozen more eyebrow-raising basic blocks of this kind — the entire upper half of default_action (diagram above) is code like this. The pièce de résistance is this basic block, which should be the final clue that if you have been trying to parse and decompile this assembly directly in your brain, then you should probably stop.

default_action basic block featuring optimized instructions.Actually this is just one-tenth of that basic block, which goes on for a mind-numbing 200-odd instructions.

What gives?

Pull the Thread

To provide an operator-friendly visual representation of what the malware is doing, the authors used the library indicatif, which is “a Rust library for indicating progress in command line applications to users [.. that] provides progress bars and spinners as well as basic color support, but there are bigger plans for the future”. indicatif output is true eye candy for CLI fans:

We invite you to put yourself in the malware operator’s shoes for a moment, seeing this colorful box full of emojis and progress bars as it cheerfully declares “destroying backups”, “generating symmetric session key”, “encrypting VM”, “writing ransom note”. Truly, the future is here! Just like the seahorse project page, the indicatif project page contains several ‘hello world’ examples, and one of them is the source for the above eye-catching demo: yarnish.rs. We will get to the full code later, but one particular feature of it is that it is multithreaded, and has the following outline:

let handles: Vec<_> = (0..4u32).map(|i| {

...

thread::spawn(move || { ... })

...

})

.collect();

...

We have good reason to suspect that the source in yarnish.rs, or rather, code heavily inspired by it, appears verbatim in the malware source (more on this later). Sadly, this alone still does not explain why Akira’s default_action begins with a deluge of basic blocks invoking the C FFI and other low-level functionality. To account for that, we need to consider that the malware authors introduced a small modification to the above source — one that, on their own end, must have been an afterthought. This snippet in the original boilerplate:

let handles: Vec<_> = (0..4u32)

.map(|i| ...

Hard-codes the number of threads to 4, which, as the malware authors noted correctly, is nice for a “hello world” example but not befitting of production code. So, first of all, they added seahorse code allowing the malware operator to control the number of launched threads directly, using the following command line argument:

--threads <int> Number of threads (1-1000)

Then they (again correctly) asked themselves, “but what if the operator doesn’t supply a value for this flag? What is the right thing to do?” then, after a moment of thought, they added the following:

--threads <int> Number of threads (1-1000). Default: number of logical CPU cores

And modified the source code to something like:

let threads : u32 = match threads_flag_value {

Some(n) => n,

None => available_parallelism().unwrap()

}

...

let handles: Vec<_> = (0..threads)

.map(|i| ...

We will now delve into the functions that available_parallelism calls, the functions these functions call, and so on for several layers of the call stack. The grim motivation for this will become clearer soon (and you might be able to already guess at it).

Inline-ception

The function available_parallelism() -> Result<NonZero<usize>> (source), according to its documentation, “returns an estimate of the default amount of parallelism a program should use [..] This number often corresponds to the number of CPUs a computer has, but it may diverge in various cases”. The implementation of this function for Linux targets is below.

{

quota = cgroups::quota().max(1);

let mut set: libc::cpu_set_t = unsafe { mem::zeroed() };

unsafe {

if libc::sched_getaffinity(0, mem::size_of::<libc::cpu_set_t>(), &mut set) == 0 {

let count = libc::CPU_COUNT(&set) as usize;

let count = count.min(quota);

if let Some(count) = NonZeroUsize::new(count) {

return Ok(count)

}

}

}

}

match unsafe { libc::sysconf(libc::_SC_NPROCESSORS_ONLN) } {

-1 => Err(io::Error::last_os_error()),

0 => Err(io::const_io_error!(io::ErrorKind::NotFound, "The number of hardware threads is not known for the target platform")),

cpus => {

let count = cpus as usize;

let count = count.min(quota);

Ok(unsafe { NonZeroUsize::new_unchecked(count) })

}

}

The undocumented function quota() -> usize (source), according to the in-source comments, “returns cgroup CPU quota in core-equivalents, rounded down or usize::MAX if the quota cannot be determined or is not set.” The implementation of this function, which we’ve again redacted for brevity, goes like so:

pub(super) fn quota() -> usize {

let _: Option<()> = try {

let mut buf = Vec::with_capacity(128); // find our place in the cgroup hierarc

File::open("/proc/self/cgroup").ok()?.read_to_end(&mut buf).ok()?;

quota = match version {

Cgroup::V1 => quota_v1(cgroup_path),

Cgroup::V2 => quota_v2(cgroup_path),

};

};

quota

}

The undocumented quota_v1 (source) and quota_v2 (source) are sub-implementations of quota for different versions of the Linux Control Groups API.

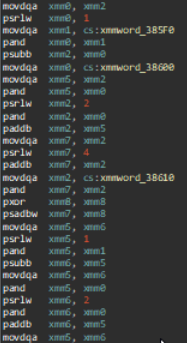

Meanwhile, the function libc::CPU_COUNT (source) is a thin wrapper for CPU_COUNT_S (source), which has the following implementation:

pub fn CPU_COUNT_S(size: usize, cpuset: &cpu_set_t) -> c_int {

let mut s: u32 = 0;

let size_of_mask = core::mem::size_of_val(&cpuset.bits[0]);

for i in cpuset.bits[..(size / size_of_mask)].iter() {

s += i.count_ones();

}

s as c_int

Where count_ones() is a wrapper for an LLVM intrinsic popcnt64. The assembly it will get expanded to depends on the platform and language — here is one Fortran example which contains the same tell-tale constants we observed in the Akira assembly:

inline constexpr int BitPopulationCount(INT x) {

x = (x & 0x5555555555555555) + ((x >> 1) & 0x5555555555555555);

x = (x & 0x3333333333333333) + ((x >> 2) & 0x3333333333333333);

x = (x & 0x0f0f0f0f0f0f0f0f) + ((x >> 4) & 0x0f0f0f0f0f0f0f0f);

x = (x & 0x001f001f001f001f) + ((x >> 8) & 0x001f001f001f001f);

x = (x & 0x0000003f0000003f) + ((x >> 16) & 0x0000003f0000003f);

return (x & 0x7f) + (x >> 32);

}

The (predictable, depressing) explanation for the indecipherable flood of basic blocks that appear in default_action is that ALL of them are various degrees of inlined library code.

What we mean by this is that instead of a function call to available_parallelism, the implementation of that function appears as-is in default_action. But then, instead of the call to quota that should appear there, the entire implementation of quota is spliced into the assembly — except the implementations of quota_v1 and quota_v2 are also spliced directly into the function instead of called. The same goes for CPU_COUNT, which gets expanded into its implementation, which is repeatedly calling count_ones except the loop is unrolled and each single count_ones is expanded into its implementation. What this all results in is that the entire complex, tangled upper half of default_action is five level deep inlined library code, with the different functions and levels side by side, all downstream from that one single innocuous decision by a malware author to call available_parallelism.

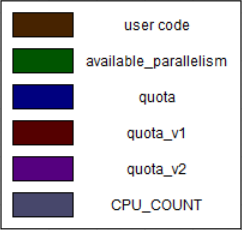

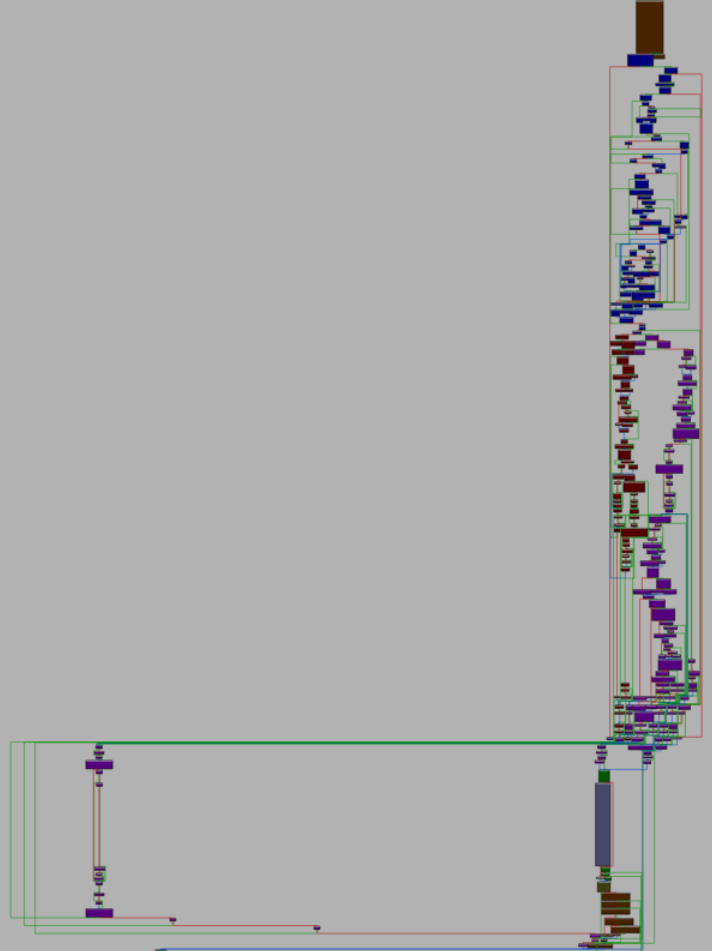

default_action by source of functionality.We will see more examples of this theme later, and in fact, between the argument initialization and the call to app.run, the main function we discussed earlier also contains a large amount of inlined seahorse code.

Default Action User Code

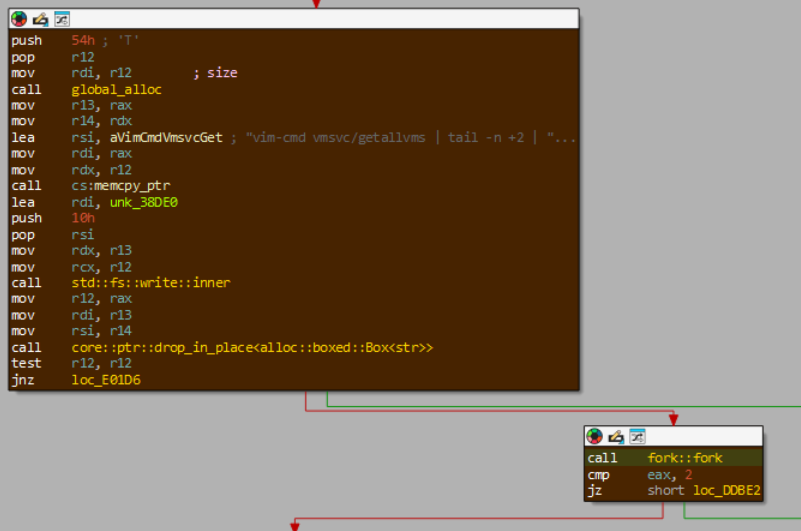

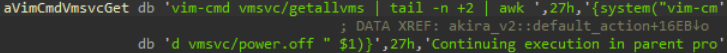

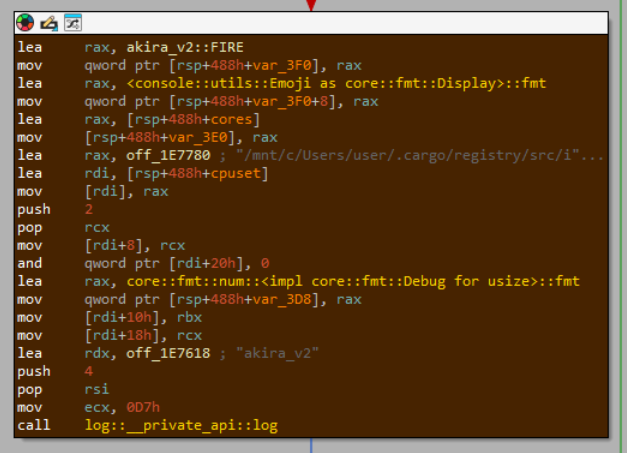

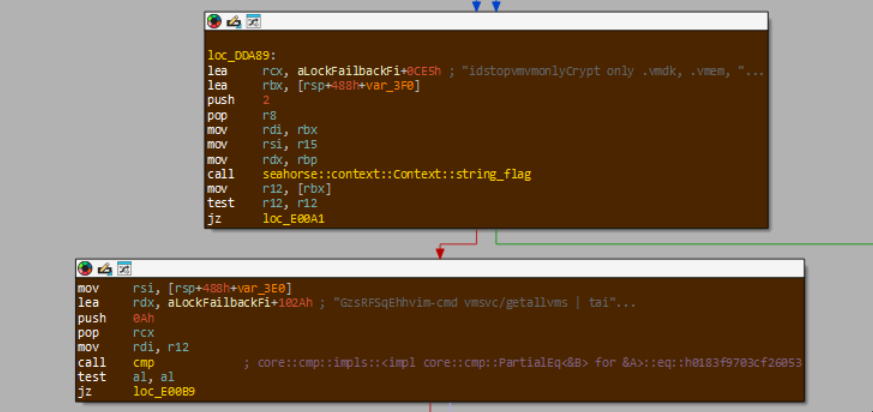

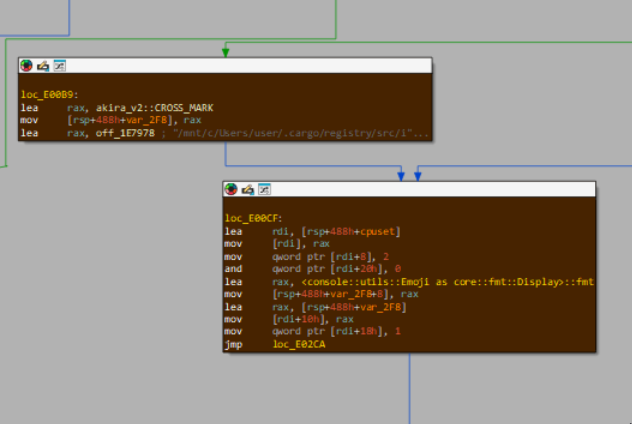

Once all the inlined library code is accounted for, the actual manually authored user code remains. You would expect that the assembly would launch user threads here as per yarnish.rs, but no. The rest of default_action is mainly dedicated to checking the values of various user-provided flags and adjusting malware behavior accordingly. One example is checking for the --stopvm flag; if this is set, the malware forks a new process with the command vim-cmd vmsvc/getallvms | tail -n +2 | awk '{system("vim-cmd vmsvc/power.off " $1)}'.

default_action turning off all hosted VMs in the ESXi server.

The behavior of the --path flag is an artifact of how this ransomware was made to target ESXi servers by default, and Linux environments in general only as an additional feature. Its official documentation is:

--path <string> Start path. Default value: /vmfs/volumes

This means that unless other behavior is specified, the ransomware will attempt to target ESXi VMs specifically. Still, we should stress that this malware has all the required functionality to act as general-purpose Linux ransomware, and this specific focus on ESXi is merely a default.

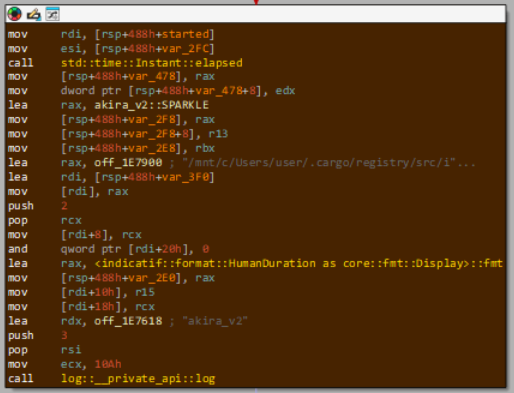

Of course, indicatif is used to its full potential to inform the malware operator of every detail of the current execution status — for example, reporting the ✨elapsed time:

Reporting the (meticulously-calculated, as we earlier saw) ☀️number of available threads:

And refusing to run when provided the ❌wrong build-id:

Finally, the lock function is called, and the next stage of execution begins.

Lock function

Thread Spawning

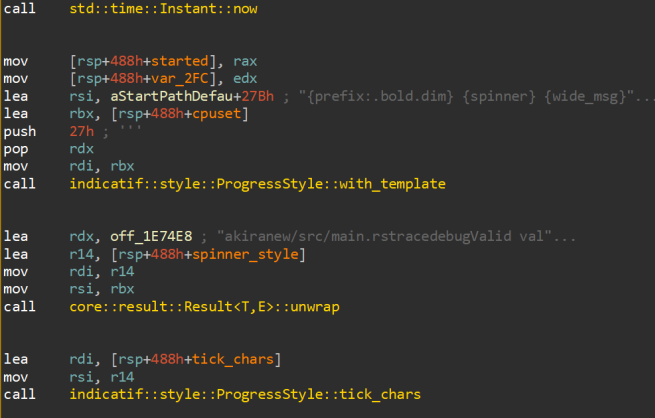

As we mentioned before, we have good reason to believe that yarnish.rs was used as a prototype during the writing of this malware — specifically for this function, lock, which was moved to a separate module (lock.rs). The code of yarnish.rs is reproduced below, redacted for brevity:

let mut rng = rand::thread_rng();

let started = Instant::now();

let spinner_style = ProgressStyle::with_template("{prefix:.bold.dim} {spinner} {wide_msg}")

.unwrap()

.tick_chars("⠁⠂⠄⡀⢀⠠⠐⠈ ");

let m = MultiProgress::new();

let handles: Vec<_> = (0..4u32)

.map(|i| {

let count = rng.gen_range(30..80);

let pb = m.add(ProgressBar::new(count));

pb.set_style(spinner_style.clone());

pb.set_prefix(format!("[{}/?]", i + 1));

thread::spawn(move || {

let mut rng = rand::thread_rng();

let pkg = PACKAGES.choose(&mut rng).unwrap();

for _ in 0..count {

let cmd = COMMANDS.choose(&mut rng).unwrap();

thread::sleep(Duration::from_millis(rng.gen_range(25..200)));

pb.set_message(format!("{pkg}: {cmd}"));

pb.inc(1);

}

pb.finish_with_message("waiting...");

})

})

.collect();

for h in handles {

let _ = h.join();

}

m.clear().unwrap();

The strings ⠁⠂⠄⡀⢀⠠⠐⠈ and {prefix:.bold.dim} {spinner} {wide_msg} appear as-is in the lock function, used in the same way as in the source above.

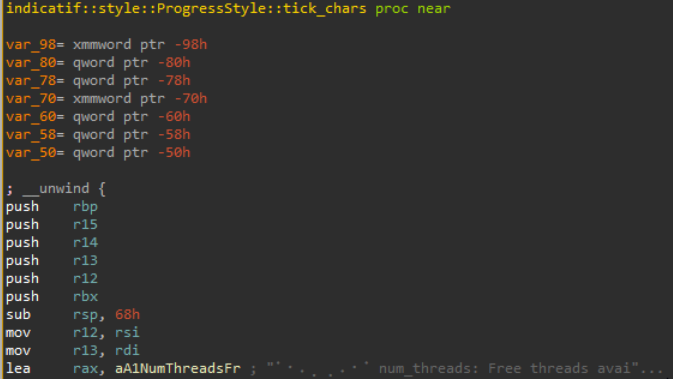

indicatif boilerplateThe hardcoded ⠁⠂⠄⡀⢀⠠⠐⠈ is spliced directly into the tick_chars implementation by the compiler (instead of the function taking it as an argument as you’d expect from the source):

⠁⠂⠄⡀⢀⠠⠐⠈ appears directly in the function body instead of being passed as an argument.Despite its name, at the assembly level lock does not itself perform any cryptographic operations; it delegates these to separate threads that it spawns. To get a better grip on how the call to thread::spawn cashes out in assembly, we go back to the lab setting for a short moment and use a toy program where we control the source:

use std::thread;

use rand::Rng;

fn main() {

let _ = thread::spawn(move || {print_rand_char()}).join();

}

fn print_rand_char() {

let s = "astitchintime";

let chars: Vec<char> = s.chars().collect();

let mut rng = rand::thread_rng();

let idx = rng.gen_range(0..chars.len());

println!("{}", chars[idx]);

}

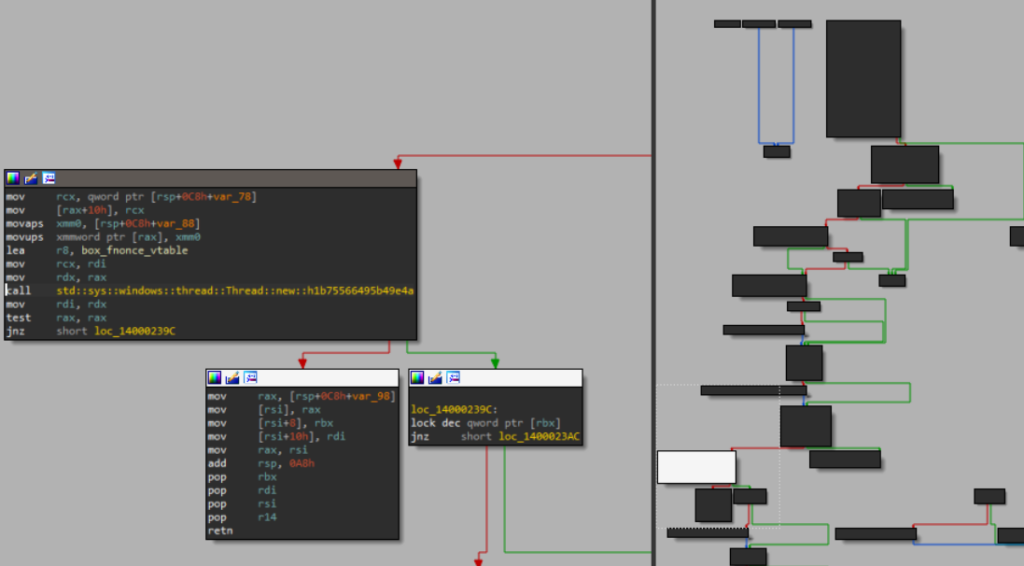

Checking the generated assembly, the main function calls std::thread::spawn, except (again) a specific key argument is spliced directly into the code instead of given as an argument. This is box_fnonce_vtable which is directly hardcoded as an argument for std::sys::windows::thread::Thread::new. The basic block containing the call is highlighted in white.

sys::windows::thread::Thread::new.What gets passed in the register r8 is evidently a vtable containing a pointer to a function executed by the thread (we mean this is evident from looking at the assembly, not the variable name box_fnonce_vtable, which we named after the fact). We could have just included the screenshot and left it there, but it’s more instructive to understand what object is being constructed and operated on here and why, as this pertains to all Rust binaries that use thread::spawn.

Thread::new, which does the actual thread-creating, has the prototype new(stack: usize, p: Box<dyn FnOnce()>) -> io::Result<Thread>. Effectively, this means that under the hood, the creation of the new thread is handled using a dynamic dispatch object with a type known at runtime — this is a Box<dyn Trait>, possibly also known to you as a “struct with a vtable pointer”, and in this case the trait is FnOnce, meaning the object can call a function. This is nice to know, but it doesn’t answer the question of what underlying type is being used here, and how it is represented in memory byte-wise. To answer that, we need to look at the implementation of spawn.

This function is implemented using an object called Builder. The implementation of that object is here:

pub struct Builder {

name: Option<String>,

stack_size: Option<usize>,

}

The function impl spawn for Builder has the prototype:

pub fn spawn<F, T>(self, f: F) -> io::Result<JoinHandle<T>>

where

F: FnOnce() -> T,

F: Send + 'static,

T: Send + 'static,

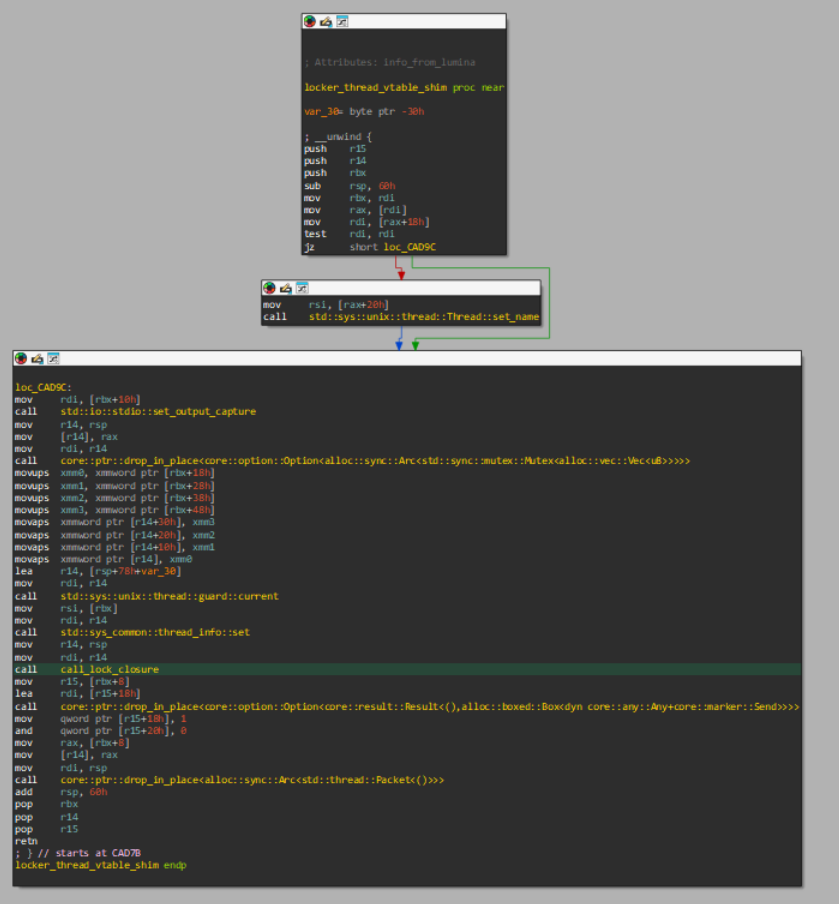

The argument f implements FnOnce; that is what the thread will execute. This argument is wrapped in some padding to create a new function (main, the ”shim”) that performs some bookkeeping surrounding the launch of the f logic, and the resulting closure is the object that gets passed to Thread::new here:

let main = move || {

if let Some(name) = their_thread.cname() {

imp::Thread::set_name(name);

}

crate::io::set_output_capture(output_capture);

let f = f.into_inner();

set_current(their_thread);

let try_result = panic::catch_unwind(panic::AssertUnwindSafe(|| {

crate::sys::backtrace::__rust_begin_short_backtrace(f)

}));

...

Ok(JoinInner {

native: unsafe { imp::Thread::new(stack_size, main)? },

thread: my_thread,

packet: my_packet,

})

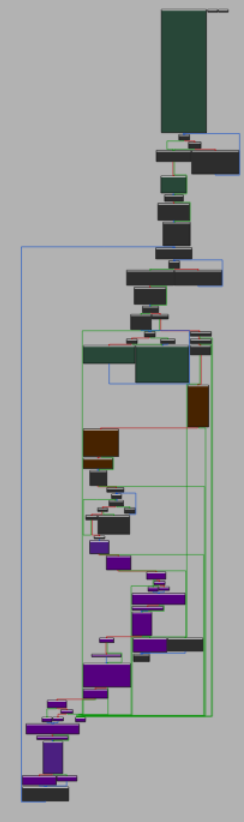

We can now take a proper look at the assembly implementing the multithreaded ransomware logic. The entire call chain thread::spawn -> Builder::spawn -> Builder::spawn_unchecked -> Builder::spawn_unchecked_ is inlined (highlighted in purple) directly into the lock function:

Builder::spawn call chain inlined into user code.The call to thread::new appears here:

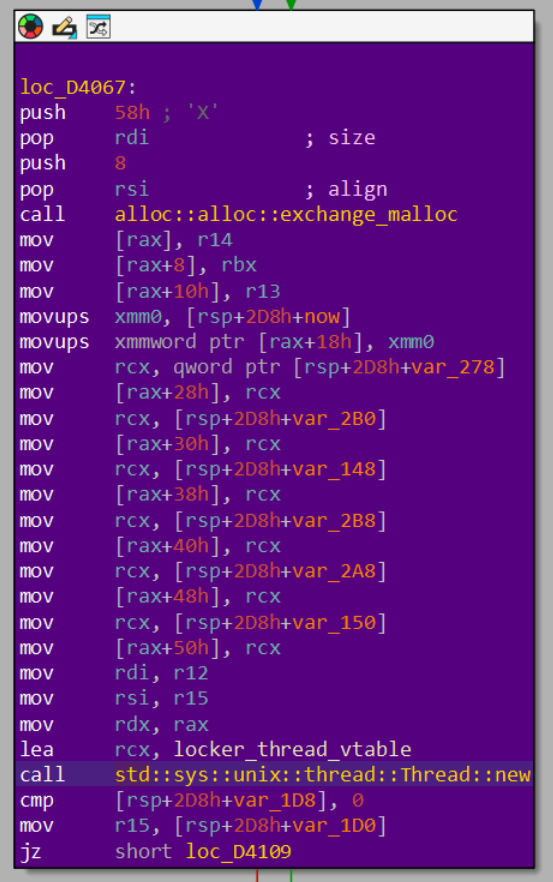

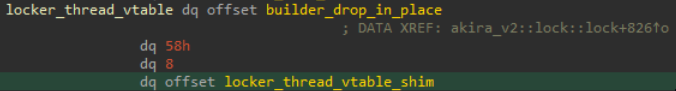

Box<dyn FnOnce()> object leading up to the call to sys::unix::thread::Thread::new.The locker thread vtable:

Box<dyn FnOnce()> object in memory.The shim is below (e.g. the call to set_output_capture is visible here):

let main = move || …)For the sake of simplicity, we assigned the name call_lock_closure to the call where the shim invokes sys::backtrace::__rust_begin_short_backtrace with lock_closure as a parameter.

By default, the malware recursively targets encryption files in the directory /vmfs/volumes. Apart from the --vmonly flag, the malware offers the operator sophisticated control of exactly what files will be targeted using the --exclude flag, as detailed in the help text:

Exclude - skip files by "regular" extension. Example: --exclude="startfilename(.' *).(.*)" using this regular expression will skip all files starting with startfilename and having any extensions. Multiple regular' expressions using "|" can also be processed: --exclude="(win10-' 3(.*).(.*))|(win10-4(.*).(.*))|(win10-5(.*).(.*))"

Once the thread is launched, the process of encrypting its victim file begins.

“Lock Closure” & Encryption Logic

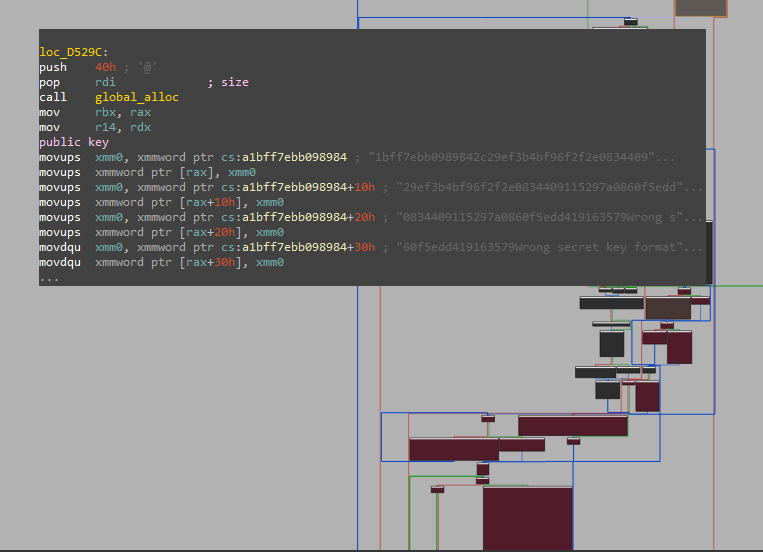

This is what the authors of this venerable ransomware placed inside thread::spawn(move || { ... }) in their modified yarnish.rs. Encryption is implemented using a standard hybrid approach of an asymmetric and symmetric cipher. That is, first a per-file symmetric key is generated, and then it is encrypted using the per-binary hardcoded public key. For asymmetric encryption, the malware uses dalek.rs implementation of curve25519. The embedded public key, which varies from sample to sample, is found embedded in the binary as a hexadecimal string:

For the symmetric per-file encryption, the malware somewhat unusually uses SOSEMANUK. This stream cipher has seen some documented malicious use since at least 2022 in Pridelocker, a fork of Babuk. For this specific cipher, the malware uses the rust-crypto crate. As a point of interest rust-crypto has its implementation of encryption using curve25519, separate from that of dalek.rs.

The use of SOSEMANUK is (again) not unprecedented and documented in other versions of this ransomware. However, we can still clearly visualize the malware author picking this specific cipher and subconsciously snickering, “Heh heh, have fun.” Indeed, with this decision they forced the hand of a heroic soul to implement Sosamanuk from scratch in Python specifically to deal with this ransomware.

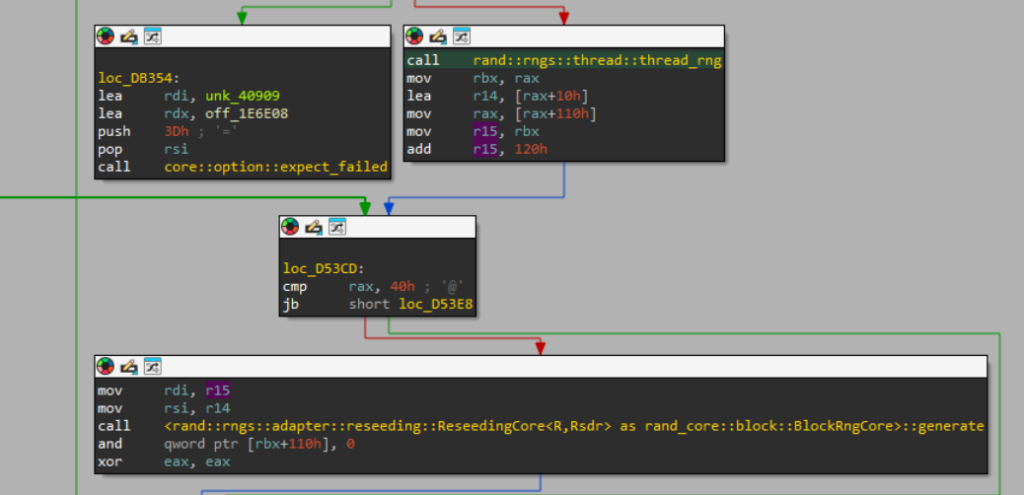

The symmetric session key is generated immediately after the public key is loaded into memory. This is done by calling thread_rng and obtaining a ThreadRng object, then calling generate on its internal ReseedingCore. The authors would have used the user-facing Rng trait, which offers functions like random and fill for this purpose (the underlying layers of logic eventually pass through the PAL and query /dev/(u)random); what we see is the inlined internal implementation down to the level of generate (part of the BlockRngCore trait).

The same is true for the curve25519 and the SOSEMANUK implementations, which appear as-is in the lock closure. We visualize the spliced basic blocks below.

Whereas the dalek.rs implementation of curve25519 leaves some identifiable traces in the form of function calls, the SOSEMANUK implementation is completely in-lined, and the cipher has to be identified using the traditional method of identifying incriminating constants (for example 0x54655307, which when converted to decimal gives the first 10 decimals of pi). The image above only has the tip of the iceberg of the inlined SOSEMANUK implementation; it is one single, gigantic basic block spanning hundreds of instructions. Once the target file is encrypted, the malware appends the extension akiranew. And, once all files in a target directory have been encrypted, the malware writes a ransom note in that directory to akiranew.txt, which begins:

Hi friends, Whatever who you are and what your title is if you're reading this it means the internal infrastructure of your company is fully or partially dead, all your backups - virtual, physical - everything that we managed to reach - are completely removed. Moreover, we have taken a great amount of your corporate data prior to encryption. [..]

Tragically, the person reading the above is not likely to appreciate the great effort made by the Rust compiler to bring this result to them with fearless concurrency and in a blazingly fast manner.

Conclusion

The combination of being cross-platform, having good ergonomics, and the availability of many ready-made libraries have made Rust language a surprisingly strong contender in the malware landscape despite its notoriously steep learning curve. To experiment with Rust, malware authors by necessity have left behind many of their favorite anti-analysis tricks; unfortunately for reverse engineers, the very nature of the language combined with the compiler’s drive to optimize its output can often result in forbidding disassembly that seems to put pressure on the analyst to look at literally anything else. Apart from the counter-intuitive effects of generics and monomorphization (which we have covered in a previous publication), when analyzing a Rust binary, we need to contend with aggressive in-lining that, as it turns out, can easily reach five levels deep into library code.

In this publication, we leveraged some favorable conditions and reached a state where we could map the control flow of an actual ITW Rust binary end to end. One of the most crucial tools in our arsenal was the authors’ reliance on ready-made boilerplate code associated with each of the third-party libraries they used. It should go without saying that we won’t always be so lucky and that the already troubled ecosystem of RE plugins and kludges now has an additional gap to bridge. The situation seems to call for automatic tooling that can isolate and identify spliced in-line code, even as it recursively contains other spliced in-line code, and this is a formidable challenge — especially in an environment where analysts didn’t have it down to an exact science 100% of the time identifying pretty, encapsulated functions with well-defined calling conventions, either.

Still, we hope you gained something from the journey of analyzing an actual ITW Rust binary and sorting through the various hairy internals that get spliced into top level user code, where they have no business being. Once upon a time, reverse-engineering C binaries were also primordial and scary; eventually, understanding improved, tooling caught up, and the task became much less formidable than it once was. We can only reason and hope that even the occasionally painful output of the Rust compiler will meet the same fate — one day in the not extremely far future.

Protections

Check Point Threat Emulation and Harmony Endpoint provide comprehensive coverage of attack tactics, filetypes, and operating systems and protect against the types of attacks and threats described in this report.

- Ransomware_Linux_Akira_C

- Ransomware_Linux_Akira_D

- Ransomware.Wins.Akira.G

- Ransomware.Wins.Akira.H

IOCs

| SHA256 | 3298d203c2acb68c474e5fdad8379181890b4403d6491c523c13730129be3f75 |

| VM termination substring | awk '{system("vim-cmd vmsvc/power.off " $1)}' |

| Encrypted file extension | .akiranew |